« Previous 1 2

S.M.A.R.T., smartmontools, and drive monitoring

Working Smart

Is S.M.A.R.T. Useful?

With S.M.A.R.T. attributes, you would think that you could predict failure. For example, if the drive is running too hot, then it might be more susceptible to failure; if the number of bad sectors is increasing quickly, you might think the drive would soon fail. Perhaps you can use the attributes with some general models of drive failure to predict when drives might fail and work to minimize the damage by moving data off the drives before they do fail.

Although a number of people subscribe to using S.M.A.R.T. to predict drive failure, its use for predictive failure has been a difficult proposition. Google published a study [5] that examined more than 100,000 drives of various types for correlations between failure and S.M.A.R.T. values. The disks were a combination of consumer-grade drives (SATA and PATA) with speeds from 5,400rpm to 7,200rpm and drives with capacities ranging from 80GB to 400GB. The data was collected over an eight-month window.

In the study, the researchers monitored the S.M.A.R.T. attributes of the population of drives, along with which drives failed. Google chose the word "fail" to mean that the drive was not suitable for use in production, even if the drive tested "good" (sometimes the drive would test well but immediately fail in production). The researchers concluded:

Our analysis identifies several parameters from the drive's self monitoring facility (SMART) that correlate highly with failures. Despite this high correlation, we conclude that models based on SMART parameters alone are unlikely to be useful for predicting individual drive failures. Surprisingly, we found that temperature and activity levels were much less correlated with drive failures than previously reported.

Despite the overall message that they had difficult developing correlations, the researchers did find some interesting trends.

- Google agrees with the common view that failure rates are known to be highly correlated with drive models, manufacturers, and age. However, when they normalized the S.M.A.R.T. data by the drive model, none of the conclusions changed.

- There was quite a bit of discussion about the correlation between S.M.A.R.T. attributes and failure rates. One of the best summaries in the paper is: "Out of all failed drives, over 56% of them have no count in any of the four strong S.M.A.R.T. signals, namely scan errors, reallocation count, offline reallocation, and probational count. In other words, models based only on those signals can never predict more than half of the failed drives."

- Temperature effects are interesting. High temperatures start affecting older drives (three to four years or older), but low temperatures can also increase the failure rate of drives, regardless of age.

- A section of the final paragraph of the paper bears repeating: We find, for example, that after their first scan error, drives are 39 times more likely to fail within 60 days than drives with no such errors. First errors in reallocations, offline reallocations, and probational counts are also strongly correlated to higher failure probabilities. Despite those strong correlations, we find that failure prediction models based on S.M.A.R.T. parameters alone are likely to be severely limited in their prediction accuracy, given that a large fraction of our failed drives have shown no S.M.A.R.T. error signals whatsoever. This result suggests that S.M.A.R.T. models are more useful in predicting trends for large aggregate populations than for individual components. It also suggests that powerful predictive models need to make use of signals beyond those provided by S.M.A.R.T.

- The paper tried to sum up all the observed factors that contributed to drive failure, such as errors or temperature, but they still missed about 36% of the drive failures.

The paper provides good insight into the drive failure rate of a large population of drives. As mentioned previously, drive failure correlates somewhat with scan errors, but that doesn't account for all failures, of which a large fraction did not show any S.M.A.R.T. error signals. It's also important to note that the comment in the last paragraph states, "… SMART models are more useful in predicting trends for large aggregate populations than for individual components." However, this should not keep you from watching the S.M.A.R.T. error signals and attributes to track the history of the drives in your systems. Again, some correlation seems to exist between scan errors and failure of the drives, and this might be useful in your environment to encourage making copies of critical data or decreasing the time period between backups or data copies.

GUI Interface to S.M.A.R.T. Data

In HPC systems, you like to get quick answers from monitoring tools to understand the general status of your systems. You can do this by writing some simple scripts and using the smartctl tool. In fact, it's a simple thing you can do to improve the gathering of data on system status (Google used BigTable, their NoSQL database [6], along with MapReduce to process all of their S.M.A.R.T. data). Remembering all the command options and looking at rows of test can be a chore sometimes. If your systems are small enough, such as a workstation, or if you need to get down and dirty with a single node, then GSmartControl [7] is a wonderful GUI tool for interacting with S.M.A.R.T. data.

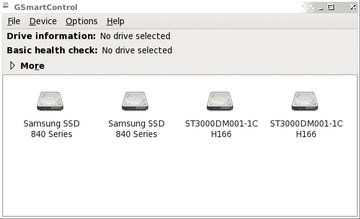

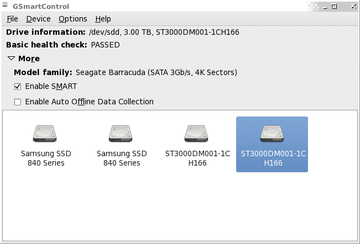

I won't spend much time talking about GSmartControl or how to install it, but I do want to show some simple screenshots of the tool. Figure 1 shows the devices it can monitor.You can see that this system has four storage devices. Clicking on a device (/dev/sdd) and More shows more information (Figure 2).

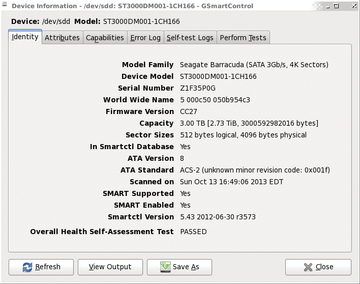

You can see that S.M.A.R.T. is enabled on the device and that it passed the basic health check. If you double-click the device icon, you get much more information (Figure 3). This dialog box has a myriad of details and functions available. The tabs supply information about attributes, tests, logs, and so on, and you can launch tests from the Perform Tests tab.

Summary

S.M.A.R.T. is an interesting technology as a standard way of communicating between the operating system and drives, but the actual information in the drives, the attributes, is non-standard. Some of the information between manufacturers and drives is fairly similar, allowing you to gather some common information. However, because S.M.A.R.T. attributes are not standard, smartmontools might not know about your particular drive (or RAID card). It may take some work to get it to understand the attributes of your particular drive.

S.M.A.R.T. can be an asset for administrators and home users. Probably its best role is to watch the history of storage devices. Simple scripts allow you to query your drives and collect that information, either by itself or as part of a monitoring system, such as Ganglia. If you want a more GUI-oriented approach, GSmartControl can be used for many of the smartctl command-line options.

Infos

- S.M.A.R.T.: http://en.wikipedia.org/wiki/S.M.A.R.T.

- smartmontools: http://sourceforge.net/apps/trac/smartmontools/wiki

- CentOS: http://www.centos.org/

- Ganglia: http://ganglia.sourceforge.net/

- Pinheiro, E., W.-D. Weber, and L.A. Barroso. "Failure Trends in a Large Disk Drive Population." In: Proceedings of the 5th USENIX Conference on File and Storage Technologies (FAST'07) (USENIX, 2007), 13p, http://tinyurl.com/8678qan

- NoSQL databases: http://nosql-database.org/

- GSmartControl: http://gsmartcontrol.berlios.de/home/index.php/en/Home

« Previous 1 2

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.