« Previous 1 2 3 4 Next »

Vagrant, Serf, Packer, and Consul create and manage development environments

Strong Quad

Consul

Usually, a developer will tell their application which machine has the database, but the program could alternatively ask Consul [9]. This service provides information about what services are available where at the current time; in other words, it is used for service discovery. Consul also offers failure detection by monitoring the current services and the system. For example, the tool alerts if a web server repeatedly returns HTTP status 200 or suddenly runs out of free main memory. Administrators can define appropriate rules to describe the necessary tests. When Consul detects a problem, it can automatically redirect requesting applications to another server that is still running. The web application would then not even notice that the database on the primary server had failed.

Finally, Consul offers a key/value store. In this mini-database, applications in particular can store their configuration data in JSON format. However, distributed applications can also exchange data via the key/value store and, thus, for example, elect a master. The data query takes place via a HTTP REST-style interface.

Agent Exchange

Consul itself comprises several components. The basis is one or more Consul servers that store information about the current services and answer requests from applications. HashiCorp recommends that you have at least three to five Consul servers to enhance data security. The Consul servers independently form a cluster and select a leader that is responsible for coordination. If you run multiple data centers, you can set up a separate cluster at each one. If a Consul server cannot answer an application request, it forwards the request to the other clusters and returns the response from them to the application.

Moreover, administrators can start Consul servers on other computers that simply redirect all requests to the right Consul server. This approach is designed to simplify load balancing, among other things. HashiCorp confusingly refers to this lower ranking Consul server as a client. Applications can query a Consul server directly, as well as detour via a Consul client.

Like Packer, Consul is written in Go and is available under the Mozilla Public License 2.0. Source code, available from GitHub [10], comes in packages for Windows, Mac OS X, and Linux. Just as with Packer, you'll find a ZIP archive that just contains a command-line utility. This agent launches either a Consul server or a Consul client, depending on the parameters you pass in.

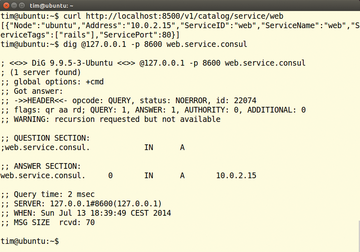

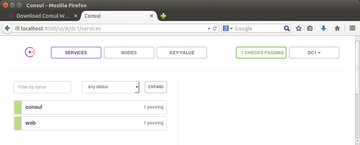

You can elicit information from a consul server either via its HTTP interface or its built-in DNS server (Figure 4), and HashiCorp offers a simple user interface in the form of a web application (Figure 5).

Figure 4: The two commands retrieve information about the web service. The first command uses the HTTP interface, the second the built-in DNS server from the Consul server.

Figure 4: The two commands retrieve information about the web service. The first command uses the HTTP interface, the second the built-in DNS server from the Consul server.

Figure 5: The Consul web application gives developers a quick overview of failred and working services. Everything is OK here.

Figure 5: The Consul web application gives developers a quick overview of failred and working services. Everything is OK here.

Serf

If a production web server fails, operators of online stores quickly lose money. To keep this from happening, you can turn to Serf [11] for help (see also the "Overlap" box). The tool monitors the computing nodes in a cluster.

Overlap

The functions of the four tools overlap at times. For example, Consul and Serf can detect failures and initiate appropriate countermeasures. In fact, the Consul agents even use the Serf library for communication. However, Serf targets computer nodes, whereas Consul focuses on providing services. Consul also takes over some functions from other systems, such as Chef, Puppet, or Nagios. The exact differences between the individual tools are explained by several pages in the Consul documentation [13].

As soon as one fails, it runs an action defined by the administrator. For example, if a web server goes on strike, Serf can automatically notify the upstream load balancer, which in turn distributes the load to other web servers.

Serf itself is designed as a distributed system: You first need to install a Serf agent on each computer node. These agents monitor their respective neighbors in the cluster. Should one of the agents fail, the monitoring agent informs the surviving agents in the cluster, which in turn inform their neighbors.

The Serf documentation compares the approach to a zombie invasion: It starts with one zombie, which then infects all the other healthy people. In this way, all the agents in a cluster hear about the failure within a very short time.

The procedure also has the advantage that it scales freely and efficiently. The agents communicate via a Gossip protocol that sends UDP messages. Administrators therefore need to drill holes in their firewalls. Finally, each agent needs just 5 to 10MB of main memory.

« Previous 1 2 3 4 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.