Comparing Ceph and GlusterFS

Shared storage systems GlusterFS and Ceph compared

Introducing Ceph Components

Ceph essentially consists of the actual object store, which may be familiar to some readers under its old name RADOS (Redundant Autonomic Distributed Object Store), and several interfaces for users. The object store again consists of two core components. The first component is the Object Storage Device, or OSD, wherein each individual disk belongs to a Ceph cluster. OSDs are the data silos in Ceph that finally store the binary objects. Users communicate directly with OSDs to upload data to the cluster. OSDs in the background also deal independently with issues such as replication.

The second component is the monitoring server (MON), which exists so that clients and OSDs alike always know which OSDs actually belong to the cluster at the moment: They maintain lists of all existing monitoring servers and OSDs and relay them on command to clients and OSDs. Moreover, MONs enforce a cluster-wide quorum: If a Ceph cluster breaks apart, only those cluster partitions can remain active that have the majority of MON servers backing them; otherwise, this could lead to a split-brain situation.

What is decisive for the understanding of Ceph's functionality is that none of the aforementioned components are central; all components in Ceph must work locally. No single OSDs are assigned a specific priority, and the MONs also have equal rights among themselves. It is possible to expand the cluster to add more OSDs or MONs without considering the number of existing computers or the layout of the OSDs. Whether the work in Ceph is handled by three servers with 12 hard drives or 10 servers with completely different disks of different sizes is not important for the functions the cluster provides. Because of the unified storage principle proclaimed by InkTank, storage system users always see the same interface regardless of what the object store is doing in the background.

Three interfaces in Ceph are important: CephFS is a Linux filesystem driver that lets you access Ceph storage like a normal filesystem. The RBD (RADOS Block Device) provides access to RADOS via a compatibility interface for blocks, and the RADOS Gateway offers RESTful storage in Ceph, which is accessible via the Amazon S3 client or OpenStack Swift client. Together, the Ceph components form a complete storage system that, in theory, is quite impressive – but is this the case in reality?

The Opponent: GlusterFS

GlusterFS has a turbulent history. The original ideas were simple management and administration as well as independence from classical manufacturers. The focus, however, has since expanded considerably – but more on that later. GlusterFS's first steps made the software a replacement for NAS systems (Network Attached Storage). GlusterFS abstracts from conventional data carriers by creating an additional layer between the storage and the user. In the background, the software uses traditional block-based filesystems to store the data. Files are the smallest administrative unit in GlusterFS. A one-to-one equivalence exists between the data stored by the user and the data that arrives on the back end.

GlusterFS Components

Bricks form the foundation of the storage solution. Basically, these are Linux computers with free disk space. The space can be made up of partitions but is ideally complete hard drives or even RAID arrays. The number of data storage devices available per brick or the number many servers involved plays only a minor role. These bricks stick together in a trust relationship. GlusterFS groups these local directories to form a common namespace, which is still a rather coarse construction and only partially usable.

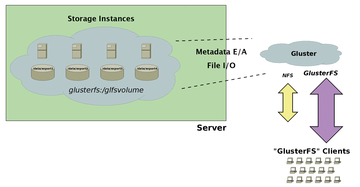

Here is where GlusterFS's second important component comes into play: the translators. These are small modules that provide the space to be shared with a particular property, for example, POSIX compatibility or distribution of data in the background. The user rarely has anything directly to do with the translators. The properties of the GlusterFS storage can be specified in the Admin Shell. In the background, the software brings the corresponding translators together. The result is a GlusterFS volume (Figure 1). One of GlusterFS's special features is its metadata management. Unlike with other shared storage solutions, there are no dedicated servers or entities. The user can choose from various interfaces for storing their data on GlusterFS.

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.