Photo by Geran de Klerk on Unsplash

Detecting security threats with Apache Spot

On the Prowl

Every year, cybercrime causes damage estimated at $450 billion [1]. Average costs have risen by around 200 percent per incident over the past five years, with no end in sight. The Herjavec Group even predicts annual losses of several trillion dollars by 2021 [2].

Under the auspices of the Apache project, industry giants such as Accenture, Cloudera, Cloudwick, Dell, Intel, and McAfee have joined forces and are trying to solve the problem with state-of-the-art technology. In particular, machine learning (ML) and the latest data analysis techniques are designed to improve the detection of potential risks, quantifying possible data loss and responding to attacks. Apache Spot [3] uses big data and modern ML components to improve the detection and analysis of security problems. Apache Spot 1.0 has been available for download since August 2017, and you can easily install a demo version using a Docker container.

Detection of Unknown Threats

Traditional deterministic, predominantly signature-based threat detection methods often fail. Apache Spot, on the other hand, is a powerful aggregation tool that uses data from a variety of sources and a self-learning algorithm to search for suspicious patterns and network behavior. According to the Apache Spot team, several billion events per day can be analyzed in the environment, if the hardware allows it, which means the processing capacity is significantly greater than that of previous security information and event management (SIEM) systems. Whether the system processes data from networks, Internet applications, or Internet of Things (IoT) environments is irrelevant because of their identical technological bases. The most important tasks include identifying risky network traffic and unknown cyberthreats (almost in real time). Apache Spot also offers a modular and scalable architecture that can be customized to meet your needs.

Traditional deterministic approaches to intrusion detection fail with the amount of data transferred daily over the Internet and the ever more complex data schemas. The number of threat vectors resulting from mobility, social media, and virtualized cloud networks does not make things any easier. For example, it takes an average of 146 days (98-197 days, depending on the industry) to uncover data breaches [4]. This critical risk window needs to be closed.

Apache Spot uses an innovative approach to identify new and previously unknown threats. The system visualizes fraud detection and in particular identifies compromised credentials and policy violations. Spot has compliance monitoring and can make network and endpoint behavior visible. Its strength lies in detecting malicious behavior patterns of zero-day threats, including undocumented ones.

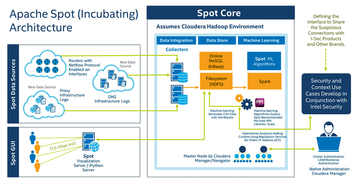

Apache Spot collects network traffic data and DNS logfile records. The correlated data is then forwarded to a Hadoop cluster running on the Cloudera Enterprise Data Hub (Figure 1). The analysis tools and algorithms for ML contributed by Intel are used to detect, evaluate, and respond to suspicious actions. The process of reassessment is continuous and generates risk profiles that support the administrator in evaluating, researching, and conducting forensics. The decisive factor is the detection of previously unknown security problems on different levels. The use of intelligent learning mechanisms also promises to reduce false positives significantly.

Figure 1: The Apache Spot architecture [5] shows how the system collects and evaluates traffic data from arbitrary sources and searches for anomalies and previously unknown attack patterns.

Figure 1: The Apache Spot architecture [5] shows how the system collects and evaluates traffic data from arbitrary sources and searches for anomalies and previously unknown attack patterns.

Ensuring Safety

In contrast to traditional security tools, Spot does not use historical information but makes its own way with monitored network data. According to Intel, network data is checked for hidden and deep-seated patterns that indicate possible dangers. The environment runs on Linux operating systems with Java support. Apache Spot is based on four core components:

- Parallel Data Ingestion Framework. The Spot system runs on a Cloudera Enterprise Data Hub and uses an optimized open source decoder to load network flow and DNS data into a Hadoop system. The decoded data is stored in several searchable formats.

- ML. Apache Spot uses a combination of Apache Spark, Kudu, and optimized C code to run scalable learning algorithms. The ML component filters suspicious traffic from normal traffic and characterizes the behavior of network traffic for optimization and further network analysis.

- Operative Analytics. This component includes noise filtering, whitelisting, and heuristics to identify the most likely patterns that indicate security threats, which reduces the number of false positives and non-threatening indicators.

- Interactive Visual Dashboard. Control and monitoring is via a web interface.

Functionality is based on resource, security, and data management. As experience over the past few years has shown, signature-based and rule-based security solutions are no longer sufficient to protect against new cyberattacks. Apache Spot seemingly closes this gap and takes the discovery of suspicious connections and previously unrecognized attacks to a new level.

Data Collection and Intelligence

The quality of a security solution depends in particular on the database, but generally speaking, more data does not necessarily mean more security. The core problem lies rather in data acquisition and evaluation. The Spot team not only promises service availability of almost 100 percent without data loss, but also faster and more scalable functionality. Data collection is handled by special collector daemons that run in the background and collect relevant information from filesystem paths. These collectors detect new files generated by network tools or previously created data and translate them into a human-readable format with the use of tshark and the NFDUMP tools.

After data transformation, the collectors store the data in the Hadoop distributed filesystem (HDFS) in the original format for forensic analysis and in Apache Hive in Avro and Parquet formats for SQL query accessibility. Files with a size of more than 1MB are forwarded to Kafka; smaller files are streamed via Kafka to Spark Streaming. Kafka stores the data transmitted by the collectors so that they are then available for analysis by Spot Workers, which read, analyze, and store the data in Hive tables. This procedure prepares the raw data for processing by the ML Engine algorithm.

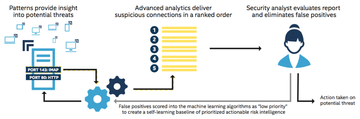

Apache Spot's ML component includes routines to analyze suspicious connections. The latent Dirichlet allocation (LDA) can discover hidden semantic structures in the collection. LDA is a three-tier Bayesian model that applies Spot to network traffic. The network log entries are converted into words by aggregation and discretization and then checked for IP addresses, words in protocol entries, and network activities. Spot assigns an estimated probability to each network protocol entry, highlighting events with the worst ratings as "suspicious" for further analysis (Figure 2).

Figure 2: Apache Spot evaluates suspicious connections and events, submits the list on the dashboard, and performs a specialist review.

Figure 2: Apache Spot evaluates suspicious connections and events, submits the list on the dashboard, and performs a specialist review.

Apache Spot has a number of analysis functions that can be used to identify suspicious or unlikely network events on the monitored network. The determined events are subject to further investigations. For this purpose, the system uses anomaly detection to ascertain typical and atypical network behavior and to generate a behavior model for each IP address from the results.

Content analysis is based on ML technologies and can analyze Netflow, DNS, and HTTP proxy logfiles. In Apache Spot terminology, logfile entries are called "network events." Spot analyzes suspicious content with the help of Natural Language Processing, extracts the relevant information from log entries, and uses it to build a "topic model."

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.