OpenShift by Red Hat continues to evolve

Live Cell Therapy

Authentication with LDAP

User administration is not one of the most popular topics among admins, because it is very complex and connected not only to IT, but to many other aspects of a company. Sales, billing, and technology ideally use the same data when it comes to managing those who use commercial IT services.

As early as the summer of 2006, OpenShift offered an impressive list of mechanisms for dealing with authentication. The classic approach, deployed for user authentication in most setups, is OAuth.

The challenge is that the user data that OAuth validates has to come from somewhere. An LDAP directory is a typical source of such data. Whether you have a private OpenShift installation involving only internal employees or a public installation that is also used by customers, LDAP makes it easy to manage this data.

The first version of OpenShift release cycle 3 offered LDAP as a possible source of user data. However, OpenShift 3.0 could only load user accounts from LDAP at that time. Existing group assignments were possibly swept under the carpet. What sounds inconsequential could potentially thwart a successful project: Because OpenShift handles the assignment of permissions internally by groups, you would have to maintain two user directories for a combination of LDAP and OpenShift 3.0 by keeping the user list in LDAP and the group memberships in OpenShift.

The developers have put an end to this nonsense: OpenShift can now synchronize the groups created in LDAP with its own group directory. In practical terms, you just need to create an account once in LDAP and add it to the required groups for it to have the appropriate authorizations for the desired projects in OpenShift.

Image Streams and HA

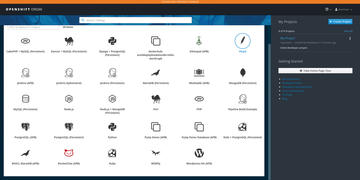

Various Platform-as-a-Service (PaaS) services will also be easier to install in the future. OpenShift takes its prebuilt images from image streams, and they have considerably expanded these in current OpenShift versions, so that Rocket.Chat or WordPress can now be rolled out automatically (Figure 2).

As beautiful as the colorful world of cloud-ready applications and stateless design may be, these solutions are still subject to elementary IT laws. Kubernetes as the engine of OpenShift comes with a few components that can be a genuine single point of failure if they malfunction. One example is the Kubernetes Master: It operates the central key-value store in the form of Etcd, which contains the entire platform configuration. Central components such as the scheduler or the Kubernetes API also run on the master node. In short, if the master fails, Kubernetes is in big trouble.

Several approaches are possible to eliminate this problem. The classical method would be to make the central master components highly available with the Pacemaker [5] cluster manager, which would mean the required services restart on another node if a master node fails. In previous versions of OpenShift, Red Hat pursued precisely this concept, not least because most of the Pacemaker developers are on the their payroll.

In fact, this solution involves many unnecessary complexities. Kubernetes has implicit high-availability (HA) functions. For example, the Etcd key-value store has its own cluster mode and is not fazed even by large setups. The situation is similar with the other components, which, thanks to Etcd features, can run easily on many hosts at the same time.

The OpenShift developers have probably also noticed that it makes more sense to use these implicit HA capabilities. More recent OpenShift versions only use the native method for HA; Pacemaker is completely out of the equation.

One tiny restriction is that it has no influence on those components that are part of the setup but not part of Kubernetes. For example, if you run an HA cluster with HAProxy on the basis of Pacemaker to support load distribution to the various Kubernetes API instances, you still need to run the setup with Pacemaker. However, in Kubernetes, Pacemaker is a thing of the past.

Persistent Storage

Storage is just as unpopular as authentication in large setups. Nevertheless, persistent storage is a requirement that must somehow be met in clouds, and OpenShift ultimately gives you nothing but. No matter how well the customer's setups are geared to stateless operation, in the end, there is always some point at which data has to be stored persistently. Even a PaaS environment with web store software requires at least one database with persistent storage.

However, building persistent storage for clouds is not as easy as for conventional setups. Classical approaches fail when the setups used reach certain values. For scalable storage, several solutions, such as GlusterFS [6] or Ceph [7], already exist, but on the client side they require appropriate support.

The good news for admins is that, in the past few months, the OpenShift developers have massively increased their efforts in the area of persistent storage. You can use the persistent volume (PV) framework to assign volumes to PaaS instances, and on the back end, the PV framework can now talk to a variety of different solutions, including GlusterFS and Ceph – both in-house solutions from Red Hat – and VMware vStorage or the classic NFS.

Of course, these volumes integrate seamlessly with OpenShift: Once the administrator has configured the respective storage back end, users can simply assign themselves corresponding storage volumes within their quotas. In the background, OpenShift then operates the containers with the correct Kubernetes pod definitions.

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.