« Previous 1 2 3 4 Next »

Proxmox virtualization manager

Cloudless

Comprehensive User Management

User management is a very important issue when it comes to virtualization solutions. Usually, more than one person needs access to the central management tool. For example, the accounting department wants to know regularly which clients make use of which service, and not every administrator should have all authorizations: If you need to create and manage VMs, you do not necessarily have to have the ability to create new users.

Proxmox takes this into account with comprehensive, roles-based authorization management. A role is a collection of specific authorizations that can be combined at will. Rights management in Proxmox is granular: You can stipulate what each role can do right down to the level of individual commands.

Additionally, Proxmox VE can be connected to multiple sources to obtain user data. Privileged access management (PAM) is the classic choice on Linux; Proxmox has its own user management, if needed. If you have a working LDAP directory or an Active Directory (AD) domain, either can be used as sources for accounts and does not change anything in rights management.

Users from these sources can also be assigned roles. To make sure that nothing goes wrong at login, Proxmox also supports two-factor authentication: In addition to time-based one-time passwords, a key-fob-style YubiKey can also be used.

Through its API, Proxmox also offers the ability to equip servers that are part of the cluster with firewall rules. The technology is based on Netfilter, (i.e., iptables) and lets you enforce access rules on the individual hosts. Although Proxmox comes with a predefined set of rules, you can adapt and modify them at will.

Ceph Integration

One of the biggest challenges in VM operations has always been providing redundant storage; without it, high availability is unattainable. Different solutions are on the market, such as distributed replicated block device (DRBD), which is also manufactured by the Vienna-based Linbit, and which has made a name for itself in connection with two-node clusters in particular.

Proxmox has supported DRBD as a plugin for a while, but now the developers have shifted their attention to another solution: Ceph. This distributed object store, which has become well known in the OpenStack world, now has many fans outside the cloud, too. Proxmox fully integrates Ceph into Proxmox VE, which means that an existing Ceph cluster can be used for more than just external storage.

Proxmox VE also installs its own Ceph cluster, which can be managed with the usual Proxmox tools. Proxmox covers all stages of Ceph deployment: The monitoring server, which enforces the cluster quorum in Ceph and provides all clients with information about the state of the cluster, can be rolled out on individual servers at the push of a button and can declare hard drives object storage devices (OSDs). OSDs in Ceph ultimately store data broken down into small segments.

The type, scope, and quality that Proxmox offers with Ceph integration into the VE environment is remarkable: The cluster setup in the Proxmox GUI is easy to use and works well. If necessary, you can even adjust the individual parameters of the cluster (e.g., to improve performance).

Because the best made memory is useless if the VMs don't actually store anything on it, Proxmox integrates Ceph RADOS block devices (RBDs). If you launch a VM, you can tell Proxmox that it has to be based on a new RBD volume. RBD can be selected as a storage volume, just like other storage types.

The simple fact that Proxmox suggests operating a hyperconverged setup causes frowns among users in smaller environments. "Hyperconverged" means that VMs run at the same time on the machines that provide hard drive space for Ceph.

Inktank, the company behind Ceph, regularly advises against relying on such a setup: Ceph's controlled replication under scalable hashing (CRUSH) algorithm is resource hungry. If one node in a Ceph setup fails, restoring missing replicas generates heavy load on the other Ceph nodes and can have a negative effect on the virtual systems running on the same servers.

What's New in Version 5.0

Version 5.0 contains several important new features. If you want to import VMs from other hypervisor types, the process is now far easier in Proxmox VE 5.0 than previously. The developers have genuinely overhauled the basic system: Proxmox VE is based on Debian GNU/Linux. The new version 5.0. is based on Debian 9, only recently released. In contrast to the original version, Proxmox delivers its product with a modified kernel 4.10.

As of Proxmox 5.0, the developers deliver their own Debian packages for Ceph. Until now, they had relied on the packages officially provided by Inktank.

The developers' main goal is to provide Proxmox customers with bug fixes more quickly than the official packages offered by Inktank. Only time will tell if they manage to do so: Ceph is not a small solution anymore, but a complex beast. It will undoubtedly be interesting to see how an external service provider without Ceph core developers manages to be faster than the manufacturer.

Either way, Ceph RBD will be the official de facto standard for distributed storage in Proxmox VE 5.0. Plans for the near future include integration of the advantages of the upcoming Ceph LTS version 12.2, aka Luminous: The "Blue Store" back end for OSDs, which Ceph LTS includes, is said to be significantly faster than the previous approach based on XFS. However, Inktank has not yet released the new version.

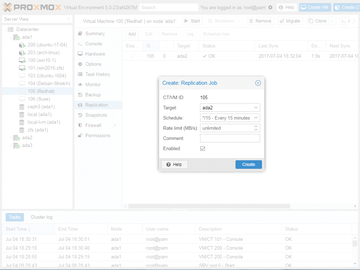

According to the developers, the most important new feature in Proxmox VE 5.0 by far is the new replication stack based on ZFS, which lets you create asynchronous replicas of storage volumes in Proxmox. In this context, Proxmox states that this procedure "minimizes" data loss in the event of malfunctions – as usual, asynchronous replication suffers in this case, because not all data can survive an error. If you want to use the feature, you can configure volume-based replication using the Proxmox GUI or at the command line (Figure 5).

« Previous 1 2 3 4 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.