Intel’s powerful new Xeon Phi co-processor

The Xeon Phi accelerator card from Intel takes an unusual approach: Instead of GPUs, the Xeon Phi features a cluster of CPUs for easier programming.

In the high-performance computing field, an increasing numbers of users have turned to GPU computing, wherein a host computer copies data to the graphics card, which then returns a result. This procedure is especially helpful for applications that repeatedly run the same operation against a large volume of data. A GPU can play to its strength, performing a large number of computations, each of which processes one data element. GPUs can process some types of calculations (such as mining bitcoins) orders of magnitude more efficiently than CPUs.

This performance advantage comes at a price. The programming model, and thus the programming procedure, differs fundamentally from that of CPUs. As a consequence, existing programs cannot run directly on GPUs. Although the OpenCL parallel programming framework tries to hide and abstract as many of these differences as possible, developers still need to be aware of the differences between coding for CPUs and GPUs.

This problem is one of the reasons Intel decided to look for an intermediate path, introducing the Xeon Phi accelerator at the beginning of this year. The Xeon Phi, which is based on x86 technology, has received more attention in recent months, mainly because it is inside the world’s fastest supercomputer – the Tianhe-2; in fact, the 48,000 Xeon Phi cards built in to the Tianhe-2 help it deliver nearly twice the raw performance of the second-place contender: the GPU-based Cray Titan. Here, I discuss the Xeon Phi card and show how it is different.

Single- to Multiple- to Many-Core

In 2005, Intel reached a dead end with its NetBurst microarchitecture and buried the decades-old dogma that higher clock speeds mean more power. Since then, the company has increased the capacity of its chipsets despite only modest changes to clock speeds by improving the microarchitecture and relying on multicore technology.

To take full advantage of additional processing power, developers need to adapt their programs for multiple-core systems. Intel launched the Tera-scale program to develop programming methods for future multicore and many-core architectures. As a first result of the Tera-scale research program, Intel introduced new hardware in 2007: the Teraflops Research Chip, also known as Polaris. Polaris included 80 simple cores and achieved a performance of 19.4GFLOPS per watt with a total capacity of 400GFLOPS. Just for comparison’s sake: The then state-of-the-art Core 2 Quad processor managed only 0.9GFLOPS per watt with a total capacity of 85GFLOPS.

Unfortunately, the Polaris was extremely difficult to program and was never available as a commercial product – only five people ever wrote software for the chip. Intel’s next step was to develop the Single-Chip Cloud Computer (SCC, code-named: Rock Creek). The processor included 48 cores (24 units, each with two cores), which were largely identical to the cores of the Pentium-S processors and communicated with each other via a high-speed network connection and four DDR-3 memory channels. Intel manufactured a few hundred SCCs and distributed them to their own labs, as well as to research institutions worldwide.

The SCC was capable of acting as a cluster on a chip, booting a separate Linux instance on each of the 48 cores. A 48-core machine with a single operating system instance failed, mainly because the SCC did not ensure cache coherency on the hardware side, unlike current commercial processors. In other words, changes to the data in one core’s cache were not automatically propagated to caches of the other cores. Thus, efficient use necessitated different programming concepts and far-reaching changes to the operating system – or even a custom operating system.

Larrabee’s Heritage

Starting in 2007, Intel tried to develop its own powerful GPU, which they code-named Larrabee. Unlike many GPUs, Larrabee would not consist of many special-purpose computing units but of numerous modified Pentium processors (P54C) that ran x86 code. The first-generation Larrabee was never launched on the market, probably because its was not powerful enough to compete with NVidia and AMD/ATI.

However, armed with the additional experience gained from the Tera-scale program, Intel decided to push on with the Larrabee project in the form of an accelerator card for HPC that would compete with NVidia’s Tesla GPUs.

Initial prototypes went to research institutions to test the card’s usability. The result is an accelerator card, code-named Knights Corner, which has been available commercially as the Xeon Phi since early 2013.

Architecture

The Xeon Phi is available as a PCI Express card in configurations that differ with respect to the number of available cores (57, 60, or 61), memory size (6, 8, or 16GB), clock speed (1053, 1100, or 1238MHz), and cooling concept (active or passive).

The basic architecture is the same for all cards: Like the Larrabee, the Xeon Phi’s CPU cores are based on first-generation Pentium (P54C) technology. The architecture supports 64-bit and floating-point instructions (x87) and a vector unit with 32 512-bit registers, with support for processing 16 single-precision floating-point numbers or 32-bit integers in parallel. Additionally, each core is multithreaded four times so that a 7100 series Xeon Phi with 61 cores can run up to 244 threads at the same time.

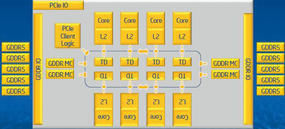

The cores each have a 64KB L1 cache and a 512KB L2 cache and are interconnected by a ring bus. Unlike most multiple-core processors, the Xeon Phi provides no shared cache between the cores; however, in contrast to SCC, Larrabee supports hardware-based cache coherency. Up to eight GDDR-5 memory controllers use two channels to connect the memory to the ring bus (Figure 1), to which the PCIe interface is also connected.

Besides the processor and memory, the Xeon Phi accelerator card also has sensors for monitoring temperature and power consumption. A system management controller makes this accessible to both the Xeon Phi processor and the host system. The controller can manage the processor, for example, to force a reboot of the card. Because the card does not have any input and output options, all data must flow through the PCIe interface and thus via the PCIe and system management buses. Physically, the card is about the same size (and uses the same sort of heat sinks) as a high-performance graphics card, but without the display outputs.

Operating System

Because the Xeon Phi has full-fledged cores, not just highly optimized special-purpose computing units, it can run its own operating system. Intel leverages this ability to manage the board’s resources and simplify software development.

When the host computer boots, the Xeon Phi first appears as a normal PCI device; the processor on the board is inactive. To activate the card, the host system’s system management controller helps load an initrd image with a built-in BusyBox into the Xeon Phi’s memory.

The Linux kernel used on the Xeon Phi differs only slightly from an ordinary x86 kernel; the necessary adjustments are comparable to those for an ARM image. After the image is transferred, the processor is started, and Linux is booted on the card for the first time. The coprocessor either uses initrd directly as the root filesystem, or it loads a filesystem from the host computer to the memory card, or it uses NFS to retrieve a filesystem.

Data Exchange

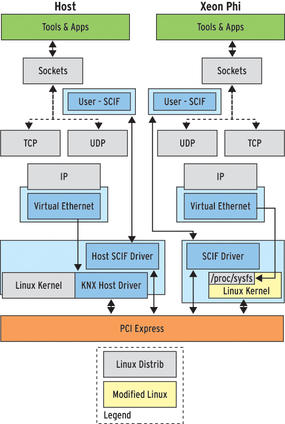

Because the card only communicates with the outside world through the PCIe interface, you might be wondering how it implements communication with the host computer and other components. Principally, the PCIe bus allows the host system to write data to a memory expansion card. Conversely, expansion cards can also write to the memory of the host computer. However, writing directly to the memory of the host system is extremely awkward for an application programmer because this kind of low-level data transfer usually only takes place at the driver level. Intel therefore provides the Symmetric Communications Interface (SCIF), a library that includes an easy-to-use interface for low-level data transfer at the memory level. SCIF is the most efficient way of exchanging data between the host computer and the Xeon Phi card, and it also provides a means for transferring the root filesystem to the memory of the card.

Networking via KVM

Intel has implemented additional data exchange options. The most important of these options integrates the card into a network. Intel uses the Virtio framework, among other things, for network access. Virtio provides virtual Ethernet interfaces on both the host system and the card’s operating system, with the data traveling across the PCIe bus. The Ethernet interfaces operate in typical Linux style. In other words, the virtual Ethernet interface on the host operating system can connect to a physical port on the host computer and the Linux running on the card can join the local network via the virtual network interface (Figure 2).

Following the same principle, Intel has also implemented a virtual serial port and a virtual block device. The virtual serial port is designed to transfer the boot log, debug messages, and other status information to the host computer. The block device is actually intended to provide the Linux swap space on the card, but if you modify the init scripts supplied by Intel appropriately, it also provides a root filesystem and thus basically a fourth option for booting the card.

Build Problems

Xeon Phi developers are likely to have two questions in particular:

- Are special steps necessary for compiling source code on the card?

- How can I use the card’s resources as efficiently as possible?

If you blindly follow Intel’s marketing claims, special programming steps are not a problem because the Xeon Phi consists of x86 cores. However, the Xeon Phi cores differ significantly from those used by conventional x86 processors. Both the vector units and the associated registers are different, and the cores lack all post-MMX extensions. In other words, they cannot handle MMX, SSE, or AVX instructions, nor do they have the registers introduced with these instruction sets.

These limitations are a problem because, ever since the introduction of the MMX instruction set, both Intel and AMD have recommended using it or its successor for floating-point calculations and no longer support computations with an x87 unit. However, the accelerator card only understands x87 instructions. This problem is one of the reasons why you cannot easily use, say, a GNU toolchain. Although Intel has developed a patch for the GNU assembler and the GNU GCC compiler to support compiling software for the Xeon Phi card, the GCC compiler has no support for the vector unit because extensive optimizations would be necessary to compile. To use the vector unit, developers need a proprietary compiler by Intel.

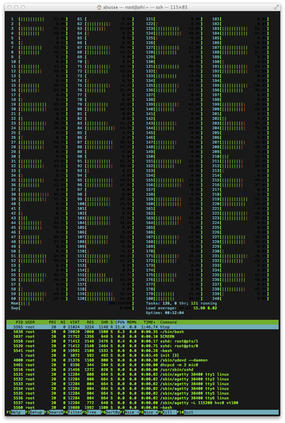

Developers have several options for fully exploiting the computing power of the card. Because the card is an independent system that uses Linux as its operating system and only requires the resources of the host computer for input and output, you can run programs on it as you would on any other computer. A programmer can therefore use the usual methods, such as POSIX threads or OpenMP, to write and execute parallelized programs (Figure 3).

You should be aware that the Xeon Phi has relatively little memory, considering the number of cores. On average, a card from the 5100 series only has 35MB of RAM for each thread, compared with several hundred megabytes for each thread on current server systems. Because of the limited amount of memory, it makes sense to operate Xeon Phi as an accelerator unit in interaction with the host machine or other machines on the network. Several options are available for implementing this interaction. SCIF, which I referred to earlier, provides a convenient approach to exchanging data between the card and the host computer. Thus, the host can outsource certain parts of the computation to the Xeon Phi. For even more convenience, developers can use the Message Passing Interface (MPI) to hand over computations. This approach is feasible because the Xeon Phi, to oversimplify things, looks just like another computer on the network with a large number of cores. Finally, an OpenCL compiler can outsource computations to the card.

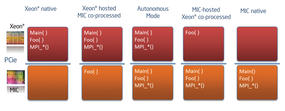

Because the Xeon Phi is partly a standalone system, the reverse path is also possible: Work can transfer from the Xeon Phi to the host computer or another computer on the network. Figure 4 shows the options for distributing the workload.

Xeon Phi vs. GPU

What makes the Xeon Phi card worthwhile? The Kepler generation of NVidia Tesla cards offers about three times the raw performance in floating-point operations per second (FLOPS) for a slightly higher price, which makes the Xeon Phi vastly inferior in terms of value for money.

On closer inspection, however, the Xeon Phi has two things going for it: First, it supports the MPI programming model, which has been around for close to 20 years, whereas OpenCL is only five years old. One could argue that MPI programmers are more experienced and will therefore find it easier to write better programs. Second, thanks to its PC-related architecture, the Xeon Phi is capable of running existing software with significantly fewer modifications.

Outlook

Intel has already announced the successor to the Xeon Phi, which currently goes by the name Knights Landing. The platform will expand to include the AVX-512 instruction set, which conventional processors by Intel will probably also use in the future. AVX 512 is also intended to provide better compatibility with SSE and AVX. Whether this also applies to the Xeon Phi, only time will tell.

Far more interesting is that Intel’s Knights Landing not only will be an expansion card but also will serve as a standalone platform or processor. This new technology thus takes a first step away from supporting a specific application profile toward a general solution for servers with highly parallel applications. Finally, Xeon Phi components might eventually make inroads into the desktop market, which would make the Xeon Phi a harbinger for a new generation of many-core systems.