Managing Virtual Infrastructures with oVirt 3.1

Version 3.1 of the oVirt management platform for virtual infrastructures has recently become available. On Fedora 17, the new release is easy to install and deploy.

Some years ago, Red Hat caused displeasure in the Linux community because the graphical administration tool for its own virtualization solution, RHEV, presupposed a Windows machine. This Microsoft legacy was a result of the 2008 Red Hat acquisition of the KVM hypervisor developed by Israeli KVM specialists Qumranet, along with what was at the time a nearly finished desktop virtualization product based on Windows.

Bye-Bye Windows

Although the Red Hat developers ported all the components of the management component, RHEV-M, from C# to Java in RHEV 3.0, use of the Administrator Console for RHEV still officially required a Windows machine with Internet Explorer 7 or greater because the oVirt-based front end had still not mastered all of its predecessor’s features.

Since Red Hat handed over oVirt to the community under the Apache Open Source license, development of the software has been continued under the umbrella of the oVirt project by SUSE, Canonical, Cisco, IBM, Intel, and others. Now, oVirt has the potential to do battle with commercial solutions by VMware, Citrix, and Microsoft as a powerful, free, alternative cloud management platform that is by no means capable of managing only Red Hat-based structures.

The oVirt project is based on many technologies developed by Red Hat with the kernel-based virtual machine (KVM) and the libvirt virtualization API. The first final and stable oVirt version (3.0) is contained as a technology preview in Red Hat’s commercial virtualization solution RHEV 3.0 that became available in January of this year.

RHEV Setup

An installation of RHEV for servers typically consists of a single (or multiple) hypervisor host (RHEV-H), a management system (RHEV-M) that manages either RHEV- or RHEL-based hypervisor systems, as well as a host for the administration console and PC (Windows or Linux) for the user portal. In addition, you would have one or more storage systems (SAN, iSCSI).

The respective minimum and recommended hardware requirements are listed on Red Hat’s promotional page when you download the evaluation version of RHEV 3. Red Hat suggests a minimal setup for test purposes that consists of an Enterprise Virtualization Manager Server (RHEV-M) with a local ISO domain and a hypervisor host. According to Red Hat, the former should be, if possible, a RHEL6 system with quad-core processor, 16GB of RAM, 50GB of local disk space, and a 1Gb NIC, which provides the ISO domain mentioned earlier.

As a client for the Admin Console, Red Hat recommends a Windows 7 system. This just leaves the mini-hypervisor, RHEV-H, for the virtualization host. This can be downloaded as a 172MB evaluation version from Red Hat, but it can also be, says Red Hat, RHEL 6 (and thus also CentOS 6) or Fedora 17. The hardware recommended by Red Hat is a dual-core server with 16GB of RAM, 50GB of memory, and a 1Gb NIC. However, the CPU must be a 64-bit system with a mandatory virtualization extension: either AMD-V or Intel VT.

In contrast to RHEV 2.2, RHEV 3 is no longer based on RHEL 5, but on RHEL 6, which offers a number of advantages KVM-wise. For example, guests can use up to 64 virtual CPU cores and up to 2TB of memory. Additionally, the RHEL6 kernel supports all current KVM technologies, such as KSM (kernel shared memory), VHostNet, transparent huge pages (THP), or X2APIC.

One further component is missing in the RHEV virtualization toolbox: “RHEV for Desktops,” which is an add-on for RHEV server that supports a virtual desktop infrastructure (VDI). Communication between a client or thin client and a RHEV-virtualized desktop operating system is handled by the Spice protocol developed by Qumranet, which Red Hat has now improved so that, for example, users can run any USB 1.1/2.0 device on a client, with Spice passing it through to the remote virtualized desktop operating system.

Multi-Component Glue

The new oVirt version 3.1 is available on the oVirt project homepage. The package contains the JBoss application server 7.0 as the foundation for the oVirt engine, the KVM hypervisor, an SDK written in Python, an admin and a user portal as web applications, and the components required to create the Libvirt VDSM nodes/host. Each oVirt node has its own hypervisor, its own storage pool, and its own disk images.

oVirt nodes communicate through a stack comprising libvirt and the oVirt host agent (VDSM) with the oVirt eEngine, which ultimately manages the array of computers. The virtual guest systems use oVirt guest agents written in Python to tell the oVirt engine, among other things, which IP address they use, what applications are installed on them, or how much memory they need.

Other components include the Java-based web interface for setting up, configuring, and managing hypervisors and guest systems; the user portal, with which users log on to the guest systems; and, finally, the REST API that lets your own programs or scripts tap into the oVirt engine. Additionally, a command-line interface is available. The back end of the oVirt engine stores all of the data and configurations in a PostgreSQL database and authenticates users via OpenLDAP or Active Directory. Independent of RHEV and Red Hat, oVirt aims to establish itself as a free alternative to VMware and Citrix for “orchestrating” cloud and virtualization environments.

What’s New in oVirt 3.1

For those who tested oVirt 3.0 on CentOS 6.3 or Fedora 17, the new features in the current version 3.1 will be of interest. For example, the oVirt engine now supports Red Hat’s Directory Server or IBM’s Tivoli Directory Server for authentication. Additionally, oVirt 3.1 supports the ability to create and clone live snapshots and hotplugging virtual machines. It can also mount external block devices on virtual machines as local disks, and you can configure storage clusters with oVirt 3.1.

A particularly interesting feature is the new installation mode “All-In-One,” which allows administators to configure a Fedora host optionally as a management machine or hypervisor. Further innovations include support for mounting any POSIX-compliant filesystem, importing virtual machines, integration of the above-mentioned Python SDKs, and the command-line interface. Developers will also look forward to the extensions to the REST API with JSON and session support.

oVirt on Fedora

In this article, I’ll demonstrate the basic principle of deploying the back end on a management machine. A detailed documentation of the solution is available online. The oVirt engine presupposes a Java Runtime Environment and the JBoss server 7 application server. Because oVirt stores all of its data in a database, the setup also requires a PostgreSQL database.

Fedora 17 and CentOS 6.3 include the first stable oVirt version 3.0 out of the box. The recently published second stable oVirt version 3.1 can be installed on Fedora 17 via the Fedora – x86_64 Test Updates package source. Fedora 17 then offers the new oVirt version 3.1 as an update (Figure 1).

A direct upgrade of an installed oVirt version 3.0 is not possible on Fedora. As stated in the release notes for the current version, you must remove the previously installed oVirt v3.0, exporting any existing virtual machines beforehand, then update the package repositories in Fedora 17, update to oVirt 3.1, and re-import the virtual machines. The Fedora version announced for November 18 should include oVirt 3.1 by default.

Optionally, you can handle the update of the package sources required for oVirt 3.1 via the Yum repository

yum localinstall http://ovirt.org/releases/ovirt-release-fedora.noarch.rpm

oVirt itself can then be installed in the form of a series of packages belonging to the framework via the package manager. For example, the engine core is found in the ovirt-engine package. Installing this with yum install ovirt-engine automatically installs the other required packages, such as the oVirt Engine Backend and the Configuration Tool for Open Virtualization Manager. The following packages are also installed: Database Scripts for Open Virtualization Manager (which writes the required tables and configurations to the PostgreSQL database), ISO Upload Tool for Open Virtualization Manager, Log Collector for Open Virtualization Manager, Notification Service for Open Virtualization Manager Tools, and Open API for Red Hat Enterprise Virtualization Manager.

The other mandatory packages are Setup and Upgrade Scripts for Open Virtualization Manager and Common Libraries for Open Virtualization Manager Tools. The JBoss application server, the JRE (OpenJDK version 1.7.0 ), the PostgreSQL database (9.1.3), and various other components, such as VDSM, are also automatically installed. If you install oVirt on the hypervisor host, you only need the ovirt-node* packages.

Start Your Engines

The ovirt-engine-setup-3.1.0-1.FC17 package provides the engine-setup script, which you need to run as root. The script itself requires at least 2GB of free memory. If you have already used engine-setup for installing and testing oVirt 3.0, you must run engine-cleanup to remove any remains of an old installation, close any open database connections, delete tables, and so on.

The script prompts for a number of important parameters, including the password for the administrator (admin), the web application (Admin Portal ), and the database. It shows you the respective default values in the square brackets, which you can accept by pressing Enter. Additionally, engine-setup asks you for your company name and the default storage back end. You have a choice of iSCSI, Fibre Channel, and NFS. The latter is good for a quick test run, and the script offers to create an NFS share as an ISO domain on the local server, where you can specify the desired mountpoint.

The script then configures the iptables firewall and lists all of the settings before it starts on the oVirt setup. During this step, it also generates the required certificates and creates the database tables. Incidentally, you can specifically feed parameters into engine-setup. The engine-setup -help option provides information about this.

Afterward, the oVirt interface is available on http://<management server> and, optionally, via HTTPS. Note that the oVirt framework requires at least 4GB of RAM; otherwise, the web interface will not start.

Now you can log in to the User Portal, Administrator Portal, or Reports Engine. The account for the Admin Portal is admin with the password set during the configuration.

Data Center

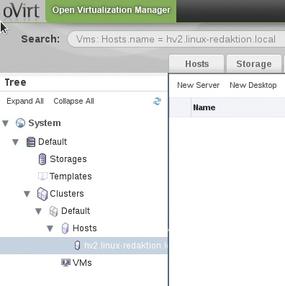

In the navigation area on the left, starting with the Default data center, you can see the administrable resources of the virtualized infrastructure, consisting of data centers, clusters, storage, and disks, as well as hosts, pools, templates, and VMs (Figure 2).

A complex role and authorization schema allows the administrator to delegate administrative tasks. The entry point for this is the “Configure” link at the top left (Figure 3).

A virtual host can be created with a click on New in the Hosts tab, even if creating hosts only makes sense when the appropriate conditions, such as storage for the virtual disk, ISOs, and so on, are met.

Configuring the Firewall

In addition to the IP address (or FQDN name) of the hosts, the dialog expects a symbolic name and a password. The host must also be accessible via SSH.

If the hypervisor used is a freshly installed Fedora 17 system, as in the example, you will not be able to add this host in oVirt because of the SSH connection setup. If you don’t know the appropriate iptables rule off the top of your head, you first need to disable iptables as root on the hypervisor like this:

systemctl stop iptables.service

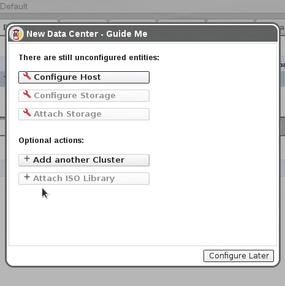

Many dialogs in oVirt start with a click on Guide Me, a wizard that lets you, for example, connect to an existing ISO library or storage (Figure 4).

To create a virtual machine, you need to check the target host in the desired cluster in the navigation frame on the left-hand side; in the right-hand panel, enable the Virtual Machines tab and click on New Server or New Desktop.

Conclusions

Thanks to oVirt, the Red Hat Enterprise Virtualization solution makes up ground on the well-established solutions by VMware, Citrix, and Microsoft’s System Center 2012. Because of the open license, it also supports self-built but professional solutions for the management of virtual environments. Unfortunately, oVirt is not even officially used in RHEV 3, at least not in terms of the admin front end. However, with Fedora 17, you can already see how Red Hat envisions a future without .NET and Active Directory. The next RHEV version will finally do without a Windows administration computer.