RAM Revealed

Virtualized systems are inflationary when it comes to RAM requirements. Storage access is faster when excess RAM is used as a page cache, and having enough RAM helps avoid the dreaded performance killer, swapping. We take a look at the current crop of RAM.

Random Access Memory (RAM) has always been used by processors and computer systems to store the programs currently being executed along with their data. Back in the 1980s, operating systems started to use excess RAM as a page cache for caching disk access.

The technology used since the start of the millennium, however, is Double Data Rate Synchronous Dynamic Random Access Memory (DDR-SDRAM). In contrast to its predecessor, SDRAM, DDR-SDRAM transfers data on both the rising and falling edges of the clock signal, thus doubling the data rate.

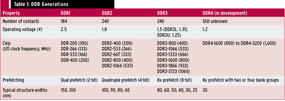

DDR-SDRAM has reached the third generation now, and work is in progress on the fourth (Table 1).

Current systems use DDR3; but, because servers and desktops are typically deployed for three to five years, many datacenters and offices still use DDR2 RAM. Initial prototypes of DDR4 also exist. However, because the energy efficiency of DDR3 continually improves and Load-Reduced (LR)-DIMMs will support even larger DDR3 memory configurations in future, analysts don’t anticipate a significant breakthrough for DDR4 until 2014.

Error Correction

No matter which generation of DDR you look at, you always have a choice between legacy memory modules and memory modules with error correcting. The latter include an Error-Correcting Code (ECC) mechanism and are mainly found in server applications. The benefits are obvious: Because of the integrated Hamming code, ECC memory modules can identify and correct 1-bit errors, and 2-bit errors are at least identified.

The mainboard and processor need to support error correction for ECC; if they do not, ECC modules will run without providing ECC support. Error-correcting modules are visibly identifiable if you check the number of soldered memory chips. Although legacy Dual Inline Memory Modules (DIMMs) typically have eight memory chips on one side, ECC DIMMs house a ninth memory chip. ECC modules are slightly more expensive than the legacy modules, but the enhanced capability is definitely worth the price in server operations.

Buffer Chips

Desktop computers and servers with one CPU socket use what are known as unbuffered DIMMs, which means the memory controller accesses the memory chips directly. This setup allows for a good price/performance ratio, but because the signals travel directly from the memory controller to each memory chip, the maximum amount of memory is restricted. This explains why dual-CPU systems use different memory modules that contain buffer chips or registers, thus extending the limits. If you look at the dual-CPU systems in the Intel core microarchitecture (Xeon 5100, 5200, 5300, and 5400), you will see that they all used fully buffered DIMMs (FB-DIMMs) on the basis of DDR2.

On the downside, the Advanced Memory Buffer (AMB) that an FB-DIMM also features will increase energy requirements and produce more heat – this explains why the manufacturers fitted cooling fins to the memory modules.

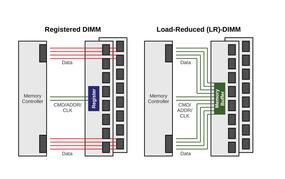

The Nehalem/Westmere microarchitecture that followed (Xeon 5500 and 5600) replaced FB-DIMMs with registered DIMMs on the basis of DDR3. The CMD/ADDR/CLK signal lines run through the register chip, but not the actual data connections. This means considerably reduced energy requirements compared with FB-DIMMs. LR-DIMMs (Figure 1), which are just around the corner, take this one step further.

LR-DIMMs have an Isolation Memory Buffer (iMB) through which all the signal lines run, but the iMB doesn’t use a special signal protocol (like the AMB on FB-DIMMs); it works just like normal registered DIMMs. Thus, the iMB hardly increases power consumption. Because all of the signal lines run through the iMB on LR-DIMMs, the electrical load on the memory controller is lower compared with registered DIMMs. From the memory controller’s point of view, LR-DIMMs act like single-rank modules. The dual-CPU systems in the Sandy Bridge microarchitecture expected in 2012 will be the first to support LR-DIMMs.

Rank

DIMMs always have a number of memory chips soldered onto them. The individual chips can use four (x4), eight (x8), or 16 (x16) signal lines. The signal lines on the memory controller are directly connected to the memory chips in the case of unbuffered DIMMs, so x4 chips are disqualified here – they can only be used in combination with buffer chips.

The memory area uniquely addressable by the memory controller has 64 (non-ECC) or 72 (ECC) signal lines and is referred to as a rank. For non-ECC memory modules, a rank can thus comprise either eight x8 chips, or four x16 chips. Memory modules populated with a single rank are referred to as single-rank modules and modules with two ranks as dual-rank modules. A single dual-rank DIMM puts the same load on the memory bus as two single-rank DIMMs.

In desktop applications, memory controllers support up to four ranks per memory channel, which is equivalent to two dual-rank DIMMs. Desktop mainboards with two memory controllers (dual-channel) thus typically have four RAM slots. Some boards have six slots; however, they only support one dual-rank DIMM in combination with two single-rank DIMMs per channel. Boards with three memory controllers (triple channel) typically still have four RAM slots: two slots for channel A and one slot each for channels B and C.

Green RAM

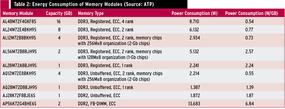

Besides the development of FB-DIMMs toward registered DIMMs and LR-DIMMs, technology has also made progress in reducing energy. For example, operating voltage drops from 2.5V for DDR1 to 1.8V for DDR2, and 1.5V for DDR3. DDR3L (1.35V) and DDR3U (1.25V) are two further reductions before DDR4 drops to 1.2V. Continuous miniaturization of the chip manufacturing process also offers benefits in terms of energy: DDR1 started at 150nm; the structure widths dropped in both DDR2 and DDR3, and the first DDR4 prototypes are based on a 30nm manufacturing process. All told, these measures reduce the energy requirements per gigabyte of RAM from just under 7 watts for ECC DDR2 FB-DIMMs to a current figure of 0.54 watts for ECC DDR3 Registered DIMMs (Table 2).

The Author

Werner Fischer has been a technology specialist with Thomas-Krenn.AG and editor in chief of the Thomas Krenn wikis since 2005. His work focuses on hardware monitoring, virtualization, I/O performance, and high availability.