Spartan Camp

If you believe Red Hat’s marketing hype, the company has no less than revolutionized data storage with version 2.1 of its Storage Server. The facts tell a rather different story.

Software Defined Storage (SDS) is gaining popularity within the IT community. An SDS system is similar to a Storage Area Network (SAN) system, except, rather than operating on specialized, proprietary hardware systems, an SDS uses software to handle the storage on commodity hardware.

This emphasis on commodity hardware – and more transparent software systems – means the customer can avoid the expense and annoyance of the vendor lock-in associated with so many SAN solutions. In 2013, Red Hat introduced an SDS product designed to give users a viable alternative to a SAN: Red Hat Storage Server (RHSS) (see Figures 1 and 2).

Functionally, RHSS is oriented on classic storage systems – in the background, data resides in block storage, although the device exports data on the customer-facing side via different interfaces, such as Fibre Channel, I-SCSI, NFS, or Samba. Of course, you can easily implement all of these functions based on free software.

OpenStack Cloud Storage

Red Hat must have clearly understood that it would be very difficult to earn a penny on yet another SAN-like product without touting some kind of additional benefits. The company says RHSS actually extends the capabilities of classical storage systems, pointing to “seamless scalability” and “perfect connections to the cloud.”

If you read between the lines of the marketing bulletins, you will recognize the customer pool Red Hat intends to fish in with RHSS: big data, massively scalable storage – in short, cloud storage. Red Hat’s PR apparatniks place special emphasis on the good OpenStack connectivity of RHSS version 2.1, which was released in September.

This turn toward OpenStack is interesting because Red Hat seemed to sleep through the OpenStack hype for a long time and did not enter the OpenStack development scramble until late in the game. Red Hat has apparently seen the light, and the company now claims to be the “top committer” to OpenStack.

Old Friends on the RHEL Base

When we looked at RHSS 2.1 in the lab, we quickly recognized it as a collection of old friends. Red Hat’s own operating system for enterprise customers, RHEL, provides the underpinnings for the system.

This stands to reason because Enterprise Linux forms the basis of almost all of Red Hat’s enterprise products. However, an additional component is needed to make RHSS into a modern and powerful tool.

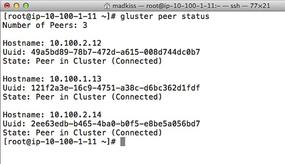

Enter GlusterFS (Figures 3 and 4).

Red Hat acquired Gluster late in 2011 and assimilated its only product, GlusterFS. Shortly after, Red Hat revamped many parts of the project, added release and feature management, and set its own developers to working on new features for distributed storage.

At first glance, the GlusterFS in RHSS seems to be a standard version, in other words, the version that is also publicly available on the GlusterFS website. But, you can assume that RHSS will take priority in internal development, and that fixes will appear in RHSS before they trickle down into the official GlusterFS tree.

RHSS inherits all the features of GlusterFS: At least two nodes are necessary for redundant storage, and redundant storage allows for many storage scenarios.

GlusterFS can use translators to manage replicated (i.e., mirrored) volumes and volumes with striping. Last but not least, GlusterFS also supports distributed storage. You can even combine storage modes; for instance, a distributed, replicated volume is no problem for GlusterFS.

Client Front Ends

Red Hat GlusterFS has seen some major developments in recent months. In addition to the native FUSE driver, GlusterFS also offers an NFS front end that supports NFSv3 access. In RHSS, Red Hat offers also the possibility to export existing GlusterFS volumes via a pre-installed Samba, so that even Windows hosts can access them.

Unfortunately, Red Hat has not integrated the Samba VFS module (of which a beta version already exists) into Gluster for RHSS. This potential improvement would give the GlusterFS filesystem itself the ability to generate Samba exports without being dependent on Samba.

Object Access

Red Hat says its Storage Server has an object access feature. The term is quite cloudy and refers to the trend of storage services natively providing a RESTful interface through which users can upload files.

In other words, object access is designed to make RHSS a full-fledged alternative to Amazon’s S3 or Google Drive by letting users upload files via a web interface. Again, Red Hat sticks to its GlusterFS guns and relies on the UFO approach: Unified File and ObjectStore describes a technique in which the Swift proxy from OpenStack’s Swift object store (Figure 5) stores data on GlusterFS in the background, instead of on Swift’s own ring servers. Red Hat claims to have contributed significantly to this patch and helped to make it stable and integrate the required functionality into GlusterFS.

In RHSS, Red Hat supplies a suitably patched GlusterFS and a prepared OpenStack Swift module. It is possible for users to use the OpenStack Swift protocol to access data directly with a client such as Cyberduck.

Geo-Replication

Red Hat goes to great lengths in its advertising to emphasize the geo-replication feature. Geo-replication is intended to expand GlusterFS, adding an option for multiple site failover scenarios. If a complete data center fails, say, because of flooding, the data remains available, because the software has already used geo-replication to copy everything at Gluster level to another data center.

A simple failover would ensure that incoming requests are forwarded to the backup data center, where a setup based on the current data would carry on serving up the platform’s capabilities as if nothing had happened.

Again, geo-replication is a GlusterFS kernel function that RHSS has just inherited. Unfortunately, the geo-replication feature has an unpleasant side effect: A follow-the-sun scenario is not possible in geo-replication with GlusterFS.

After the data center fails over to a new location, the storage at the old site needs to be completely synchronized with the new site – as if the data at the “old” site had become physically unusable, even if that is not the case. Despite this problem, geo-replication is a useful feature for preventing disaster scenarios.

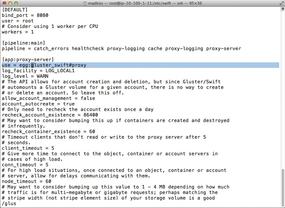

People disagree on the crucial issue of whether colorful GUIs are necessary or merely annoying. The management tools of classical SAN storage systems are very popular; in fact, the vendors of these devices cite their sophisticated management tools as an argument for the superiority of their own technology.

Hardcore admins, however, are most likely to feel at home at the command line and have very little use for graphical tools. For them, RHSS is the perfect solution; a graphical configuration tool is not included with the storage server out the box. The Administration Guide for RHSS is almost 200 pages long and explains in detail how to use and configure certain functions; however, all of this happens exclusively at the command line. The RHSS is thus targeted at experienced storage professionals who prefer to use the keyboard.

OpenStack Compatibility

Red Hat has pushed on with the development of various OpenStack components in the past few months, so they also support GlusterFS. GlusterFS integration in Nova, OpenStack’s computing component, for example, originated in Raleigh, as did the patch for Qemu, which allows for GlusterFS as a storage back end for virtual machines without complex mount operations. Then there’s Cinder, which now also has a GlusterFS back end. You’ll find more about the modules and their Gluster-integration on the OpenStack website.

Thanks to all these features, Gluster acts as a storage jack-of-all-trades for OpenStack with direct access to the major components. Red Hat’s commitment to OpenStack integration is evidenced by the detailed documentation on the topic of “RHSS and OpenStack” in the RHSS guide (Figure 6).

But Who Is Going to Pay for That?

After a few days of testing with a temporary license, the price list for RHSS finally reached our lab – prompting some incredulous frowns from the test team, who initially believed there must be some kind of misunderstanding. According to the list, a two-node cluster with support on weekdays costs no less than 9,000 Euros, while the same cluster with 24x7 support is priced at 14,000 Euros. Realistically, however, no one will operate cloud storage with only two nodes; assuming you have four storage hosts, a one-year license with 24x7 support will cost 26,000 Euros. And, if you move to a three-year contract, you will pay almost 70,000 Euros for the same system. Mind you: The prices are only for licensing the Red Hat Storage Server. The required hardware is likely to send the price of the solution skyrocketing. The documentation does not reveal whether Red Hat also has aggressive discount strategies similar to its competitors.

Against the backdrop of these prices, it seems questionable whether any advantage remains for RHSS customers compared with traditional SANs, although Red Hat marketing documents claim that RHSS 2.1 “can generate up to 52 percent in storage system savings and an additional 20 percent in operational savings.” These figures clearly represent a comparison with conventional, proprietary SAN products, rather than a home-grown solution.

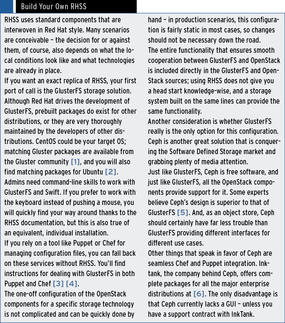

Has Red Hat simply overstepped the mark? Although the company has put some work directly into developing the components that are now integral parts of the RHSS, these components are available for free without RHSS. Admins can even combine them in a similar way, thus creating a kind of DIY RHSS (see the box titled “Build Your Own RHSS”) without thousands of dollars of capital outlay.

[1] GlusterFS for Cent OS; [2] GlusterFS for Ubuntu; [3] Puppet and GlusterFS; [4] Chef and GlusterFS; [5] Gluster Bashing for Ceph; [6] Ceph downloads

A DIY storage server does obviously differ from a real RHSS in one pertinent point: RHSS comes with a support contract; the admin therefore has the option of asking Red Hat for help in case of difficulty. Because GlusterFS is the core component of RHSS, support customers therefore receive support directly from Gluster upstream. It doesn’t get any better than this! Still, a cautious buyer should keep in mind that Red Hat’s support is focused around the software, whereas the hefty cost for SAN support applies to the complete system of proprietary hardware and software together.

If you want to take a closer look at RHSS before shelling out all this money, you can take it for a test drive. Red Hat offers AWS-hosted VMs designed to give you an impression of RHSS’s capabilities (Figures 1 and 2). A mouse click starts a complete RHSS setup, comprising six storage nodes, on which the tester can then try out the individual GlusterFS and Swift functions. A very detailed manual available through the test drive GUI explains how this works.

All told, testers can put RHSS through its paces for 15 hours, divided into three separate test runs, each lasting no longer than five hours. Registering for the test can take some time; applications for the test must be vetted manually. The Linux Magazine editorial team was granted access about 12 hours after applying (only a fraction of the announced 48-hour waiting period).

One benefit of the test drive is that Red Hat provides VMs for the server components, as well as Windows- and Linux-based clients. Linux uses FUSE to support local GlusterFS mounts, and the Windows client uses SMB to access GlusterFS files.

Conclusions

RHSS is technically sound work. On RHEL underpinnings, it gives users a combination of GlusterFS and OpenStack Swift. The fact that Red Hat has invested a large amount of work in those very services is to the company’s credit – both RHSS and the projects themselves have benefited from these improvements to a considerable extent – and GlusterFS has become much better in terms of quality in the past year and a half. But, hard work alone does not appear to justify the price that Red Hat is asking for its product. In fact, it is questionable whether Red Hat could ever extend RHSS to an extent that a price of some 9,000 Euros would be justified for a system with two nodes. Apart from GlusterFS and Swift, as well as Samba, the product does not appear to currently contain any added-value components – including anything that could possibly be viewed as a unique selling point, such as an easy-to-use configuration interface.

You could set up a similar cloud-based storage cluster for OpenStack with Ubuntu or SUSE. The difference is basically the support that Red Hat includes in the price. Now that GlusterFS belongs to Red Hat, that support actually comes from upstream developers.

Despite the high cost, RHSS is still less expensive than operating a SAN with specialized hardware. Red Hat is clearly targeting customers who would otherwise have bought a SAN and are forced to purchase a support contract due to internal requirements.