Die Hard

NIST has chosen the Keccak algorithm as the new cryptographic hash standard, but in real life, many users are still waiting to move to its predecessor, SHA-2.

Cryptographic hash functions are an important building block for secure communications on the internet. Many changes have occurred in recent years. MD5 and SHA-1, which have been in use for many years, turned out not to be as secure as originally thought. The Flame virus, which was discovered on many computers in the middle East in May last year, exploited an MD5 vulnerability to install itself via the Windows update service.

Following cryptographic breakthroughs, the National Institute for Standards and Technology (NIST) decided to launch a competition for the development of a new hash standard. In October of 2012, the winner was chosen: the Keccak algorithm, which was developed by a team of Belgian scientists. This is the second competition of this type: The symmetrical encryption algorithm AES, which is found in nearly every cryptographic application today, was chosen in 2003 in the course of a similar competition.

So, what do cryptographic hash functions do? To put it simply, they convert arbitrary input to fixed length output. The output is typically a hex number: for example, the MD5 hash of the word “Hello” is 8b1a9953c4611296a827abf8c47804d7. To be secure, a cryptographic hash function needs to fulfill two conditions:

- Irreversible: It must be extremely difficult to find input that reproduces a given hash.

- Collision resistant: It must be extremely difficult to find two inputs that generate the same output.

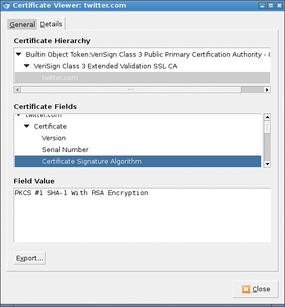

Cryptographic hash functions are used for many purposes, the most important being digital signatures. Signature methods such as RSA do not use the entire input, but only the hash of the input. But, hash functions are used in many other applications. For example, secure protocols such as SSL/TLS are complex constructs that use hash functions as building blocks for different purposes.

MD5 Broken, SHA-1 Endangered

In 2004, a team led by Chinese scientist Xiaoyun Wang first demonstrated a practical collision in the MD5 function and succeeded in producing two input files that resulted in the same MD5 hash, but, the warning signs had been there longer. As early as 1996, the late Professor Hans Dobbertin from Bochum, Germany, warned of vulnerabilities in MD5.

The Chinese researchers were not content just to attack MD5. In 2005, Wang’s team improved known attacks on the SHA-1 standard, which was regarded as secure at the time. Although these attacks remain theoretical – nobody has been able to cause a real collision up to the present day – one thing was clear: SHA-1 was not secure for the long term.

This just left the previously little-used hash functions of the SHA-2 family, by the names of SHA-224, SHA-256, SHA-284, and SHA-512. But, again, some cryptographers saw issues looming; the methods that SHA-2 uses differ only slightly from those used by MD5 and SHA-1.

So, NIST announced that it would be developing a new hash standard and launched a competition to do so. The idea was for the world’s leading cryptographers to submit proposals for the new standard. The proposals were discussed at several conferences and investigated for potential vulnerabilities. Besides the obvious reasons, calls to pay more attention to the subject in general had become more insistent. Cryptographic hash functions had been ignored by researchers for too long.

In all, 64 proposals were submitted for the competition, and these were subsequently reduced in several rounds to 15 and then 5 finalists. The candidates who made it into round two were then allowed to make minor improvements to their algorithms to respond to potential criticism.

The competition did not just lead to the development of several new hash functions, it also improved the cryptographers’ understanding of them. One important finding in the competition was that the SHA-2 functions are much better than many people thought. Well-known cryptographer Bruce Schneier thus proposed ending the competition without a winner a couple of weeks before the final decision.

None of the finalists was considerably better than SHA-512; some were slightly faster, but not so much faster that they would justify creating a new standard. Schneier himself had produced one of the most promising candidates for SHA-3, along with a group of other scientists, in the form of the Skein hash function. Regardless of how the competition ended, Schneier recommended that application developers should stick with SHA-512, at least for the time being, because the algorithm was proven and minutely investigated.

But Schneier’s words fell on stony ground. On October 2, NIST announced the winner: Keccak, a development by a Belgian team of scientists, is to become the new SHA-3 standard. For co-developer Joan Daemen, this was a major success. He had already worked on the Rijndael encryption algorithm, which later became the AES standard.

The reason that NIST gives for its decision is that Keccak’s internal structure is very different from the existing SHA-2 functions. If problems do arise in future, there would still be an alternative that did not suffer from the same issues. At the end of the day, Bruce Schneier was happy with the decision. Despite his previous criticism, he emphasized the fact that he was not at all worried about Keccak’s security and that the decision was a good one.

NIST has still not published the final SHA-3 standard, which means that users are advised to avoid SHA-3 for the time being. Because multiple variants of the Keccak algorithm could be used, it will not become clear until after the standard is published which parameters the function needs to be used with.

SHA-1 Use Widespread

In other words, the message is still unchanged: Secure applications should continue to use SHA-2. But, although the issues with SHA-1 have been known for seven years, there are still some problems. SHA-1 is still very widespread, and MD5 has by no means disappeared.

In 2008, a team of researchers presented an attack against MD5 signatures at 25C3, the Chaos Computer Club’s annual congress. This attack allowed them to create a spoofed root certificate on an SSL certificate authority that was supported by any browser. It was not until after this attack that certificate authorities were persuaded to stop using MD5. But, instead of moving directly to SHA-2, most of them switched to what is also a potentially vulnerable algorithm, SHA-1.

The interesting thing is that an attack of this kind should not be possible, even with the insecure MD5 function. SSL certificate authorities add a randomly generated serial number to the certificate. So, for an attack of this kind to succeed, the attackers would need to create two certificates with an identical MD5 hash; the attackers thus have to know in advance what data they need to add to the certificate. But, researchers discovered a vulnerability when investigating the RapidSSL CA that incremented the serial number instead of generating a random number. Because the certificates were fairly cheap, the attackers were able to guess a serial number, and the attack succeeded after several attempts.

An attack on MD5 with real-world implications was subsequently revealed in 2012. The Flame virus contained a valid Microsoft code signature and was thus able to propagate via the Windows Update Service. The precise details of the attack are unknown, but cryptographers assume that the Flame programmers have knowledge of more MD5 vulnerabilities that they have not yet revealed.

Move to SHA-2 Slow

In 2011, more or less all SSL certificates were signed with a SHA-1 signature (Figure 1).

It was not until 2012 that the first certification authorities started to move to SHA-2. The problem here was downward compatibility. If you asked a certification authority, you would likely receive the answer that Windows XP, which did not support SHA-2 signatures prior to Service Pack 3, was still commonly in use. Compatibility issues of this kind are likely to increase in the future. After all, who wants to lock older smartphones out of their web service just to improve security? (See the “Using SHA-2” box for more.)

The situation is even more difficult when it comes to transmission protocols. The legacy but still popular SSL standard version 3 only supports MD5 and SHA-1 signatures. And the successors of SSL, TLS 1.0 and 1.1, do not improve on this; it is not until TLS 1.2 that TLS began to support newer algorithms with SHA-2.

On the server side, you need version 1.0.1 of OpenSSL to enable TLS 1.2, for example, on an Apache web server. However, the only browser to support TLS 1.2 thus far is Opera. This means that server administrators can enable the new, safer standard, but it will go virtually unused. And, there can be no question of disabling the legacy algorithm for the time being. However, disabling MD5 signatures is something that you can (and should) do today.

You can enable TLS 1.2 – given OpenSSL 1.0.1 and a recent 2.2 or 2.4 version of the Apache web server – using the SSLProtocol configuration option. Listing 1 provides a potential server configuration.

Listing 1: Apache Configuration

SSLProtocol -SSLv2 +SSLv3 +TLSv1 +TLSv1.1 +TLSv1.2 SSLCipherSuite TLSv1:SSLv3:!SSLv2:HIGH:MEDIUM:!LOW:!MD5

Conclusions

You are unlikely to encounter security problems with SHA-1 today, but the experience with MD5 teaches us that migration from vulnerable algorithms can take a long time. Despite the fact that security issues had been known since 1996, it was not until the Flame virus successfully attacked the MD5 signatures of the Windows Update Service in 2012 – 16 years later – that many authorities began to address the problem.

Initial collisions are likely to be reported for SHA-1 in the next few years. However, the type of collision is important for the success of an attack: The problems with MD5 did not become relevant until attackers succeeded in creating meaningful data, such as certificates, with a collision. But, it did not take long for the problems with MD5 to become evident, and the attacks soon became more serious.

The Author

Hanno Böck is a freelance journalist and volunteer developer with Gentoo Linux. He also works for web hosters schokokeks.org, who rely entirely on free software for their service offerings.