OpenMP

The powerful OpenMP parallel do directive creates parallel code for your loops.

The HPC world is racing toward Exascale, resulting in systems with a very large number of cores and accelerators. To take advantage of this computational power, users have to get away from serial code by writing parallel code as much as possible. Why run 100,000+ serial jobs on an Exascale machine? You can accomplish this with cloud computing. OpenMP is one of the programming technologies that can help you write parallel code.

OpenMP has been around for about 21 years for Fortran and C/C++ programming. Its directives tell the compiler how to create parallel code in certain regions of a program. If the compiler understands the directives, it creates parallel code to fulfill them. Directives have proven to be a good approach to easy parallelization, so OpenACC borrowed this approach (see previous articles).

Directives just look like comments to a compiler that doesn't understand them, so you can keep one source tree for both the original code and the parallel code. Simply changing a compiler flag builds the code for the original code version or for a parallel code version.

Initially, OpenMP focused solely on CPUs. Shared Memory Processing (SMP) systems, in which each processor (core) could “see” all of the others, were coming online. OpenMP provided a very convenient way to write parallel code that could run on all of the processors in the SMP system or a subset of them. As time went on, people integrated MPI (Message Passing Interface) with OpenMP for running code on distributed collections of SMP nodes (e.g., a cluster of four-core processors).

With the ever increasing demand for more computation, companies developed HPC “accelerators,” which are general non-CPU processors such as GPUs (graphic processing units), DSPs (digital signal processors), FPGAs (field programmable gate arrays), and other specialized processors. Fairly quickly GPUs jumped into the lead, offering hundreds if not thousands of lightweight threads. With the help of various tools, users could write code that executed on the GPUs and produced massive speedups for many applications.

Starting with OpenMP 4.0, the OpenMP standard added directives that targeted GPUs and has expanded on this capability with the recent release or OpenMP 5.0.

In this first of several articles introducing OpenMP, I want to get you to take your serial Fortran or C/C++ code and start porting it to parallel processing. To make this as simple as possible, in this and the next two articles I explain a very small number of directives you can use to parallelize your code. Only the most used or easiest to use directives are discussed to illustrate that you don’t need all of the directives and clauses in the standard; just a small handful will get you started porting your code and can result in real performance gains. These articles won’t be extensive because you can find many tutorials online.

OpenMP

OpenMP has a fairly long history in the HPC world. It was started in 1996 by several vendors, each with their own SMP, to create vendor-neutral shared memory parallelism. OpenMP uses pragmas in the form of comments to tell the compiler how it should process the code. With a vendor-neutral approach to SMP, code could be moved easily to other compilers or other operating systems and rebuilt. If the compiler wasn't OpenMP aware, then it would just ignore the comments and build serial code, allowing one code base to be used for serial or parallel code.

OpenMP has matured over the years, steadily adding new features to the standard:

- 1996 – Architecture Review Board (ARB) formed by several vendors implementing their own directives for SMP.

- 1997 – OpenMP 1.0 for C/C++ and Fortran added support for parallelizing loops across threads.

- 2000, 2002 – Version 2.0 of Fortran, C/C++ specifications released.

- 2005 – Version 2.5 combined both specs into one.

- 2008 – Version 3.0 added support for tasking.

- 2011 – Version 3.1 improved support for tasking.

- 2013 – Version 4.0 added support for offloading (and more).

- 2015 – Version 4.5 improved support for offloading targets (and more).

OpenMP is designed for multiprocessor/multicore shared memory machines exclusively using threads. It offers explicit, but not automatic, parallelism, giving the user full control. As previously mentioned, control is achieved by the use of directives (pragmas) embedded in the code.

The OpenMP API has three parts: compiler directives, run-time library routines, and environment variables. The compiler directives are used for such things as spawning a parallel region of code, dividing blocks of code per thread, distributing loop iterations among threads, and synchronizing work among the threads.

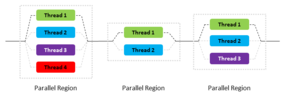

In general, OpenMP operates on a fork-and-join model of parallel execution. As shown in Figure 1, application execution proceeds from left to right. Initially the application starts with a single thread, the master thread shown in black. The application then hits a parallel region in the code and the master thread creates a “team” of parallel threads. This is the “fork” phase. The portion of the code in the parallel region is then executed in parallel, where the master thread is one of the threads (the other threads are shown in light gray).

The OpenMP run-time library has routines for various purposes, such as setting and querying the number of threads, querying unique thread IDs, and querying the thread pool size.

OpenMP environment variables allow you to control execution of parallel code at run time, including setting the number of threads, specifying how loop iterations are divided, and enabling or disabling dynamic threads.

Classically, the run-time portion of OpenMP assigns a thread to each available core on the system (I cover GPUs in a later article), which can be controlled by OpenMP directives in the code or environment variables. The important thing to remember is that OpenMP will use all of the cores if you let it.

OpenMP Directives

Specifying the OpenMP directives in your code is straightforward (Table 1). In general, the directives appear as comments in both Fortran and C, unless the compiler understands OpenMP.

Table 1: Specifying OpenMP Directives

| Fortran | C |

!$OMP or !$omp |

#pragma omp |

Parallel Region

The first step in porting code to OpenMP is to use the fundamental OpenMP parallel construct to define the parallel region (Table 2). This directive creates a team of threads for the parallel region. After the directive, each thread executes the code, including any subroutines or functions. The end directive synchronizes all threads.

Table 2: Defining the Parallel Region

| Fortran | C |

!$omp parallel ... !$omp end parallel |

#pragma omp parallel

{

...

}

|

The parallel region, delimited by omp parallel and end parallel, has a couple of restrictions: It must be defined in the routine of the code, and the code inside the parallel region must be structured. You cannot jump into or out of, a parallel region (i.e., no GOTO commands).

Each thread in the team is assigned a unique ID (thread ID), generally starting with zero. These thread IDs can be used for any purpose the user desires. For example, if a parallel region seems to be running slow, you can use the thread ID to identify whether a thread is running slower than the others.

Some clauses that I will discuss in a later article can be added to the parallel directive to allow more flexibility or to allow easier coding for certain patterns. The focus of this and subsequent articles is to use just a few directives, so you can start porting serial code to OpenMP.

Notice in Table 3 that you can nest parallel regions. The first parallel region will use N threads (N cores), and the second parallel region (the nested parallel region) will use N threads for each thread in the first parallel region. Therefore you have N + N^2 threads. If you don't control the number of threads per parallel region carefully, you can generate more than one thread per core, perhaps inhibiting performance. In some cases, this is a desired behavior, because some processors have cores that can accommodate more than one thread at a time. Just be careful not to overload cores with multiple threads.

Table 3: Nesting Parallel Regions

| Fortran | C |

!$omp parallel ... !omp parallel ... !omp end parallel --- !$omp end parallel |

#pragma omp parallel

{

...

#pragma omp parallel

{

...

}

...

}

|

Parallelizing Loops with Work-Sharing Constructs

The parallel region spawns a team of threads that each do work, which is how the performance of an application can be improved (spreading the work across multiple cores). The work-sharing constructs must be placed inside the parallel regions. If you don't do this, then only one thread is used. In general, the work-sharing construct is not capable of spawning new threads. Only the omp parallel directive can do that (more on this in a future article).

Each thread in the team has its own data, although cooperating threads can share data (termed “shared” data). Some directives can perform reductions within the team and copy data to the threads.

In general, because OpenMP is based on the SMP model, most variables are shared between the threads by default. For example, in Fortran this could include COMMON blocks or MODULES. In C, file scope variables and static variables are shared. On the other hand, loop index variables are considered private in each thread.

The directive pairs in Table 4 allow you break a loop across threads in the team. OpenMP directives split the loops as evenly as possible across all of the threads in the team without overlap. When the threads are created, it copies the appropriate portions of a, b, and c to each thread (i.e., the fork portion of the OpenMP model).

Table 4: Breaking a Loop Across Threads

| Fortran | C |

!$omp parallel do do i=1,N a(i) = b(i) + c(i) end do !$omp end parallel do |

#pragma omp parallel for

{

for (i=0; i < n; i++) {

a[i] = b[i] + c[i]

}

}

|

Once the code inside the region finishes, the data is copied back from the worker threads to the master thread (i.e., the synchronization part of the OpenMP model); then, the threads are destroyed and computing continues with the next instructions.

To gain the most performance from your code, you want to put as much work as possible in the parallel region. If the amount of work is too small, it could take as long as or longer to run than serial code because, creating the threads, copying the data over, and synchronizing the data after the parallel loop can take more time than simply using a serial section of code.

Nested Loops

Assume you have nested loops in your code as shown in Table 5, and try to determine where you would put your parallel region and loop directive for these nested loops. For the most iterations (j*k = 100) by each thread, you would probably put the parallel region around the outside loop (Table 6); otherwise, the work on each thread is less (fewer loops), and you aren't taking advantage of parallelism. To convince yourself, put the parallel do directive around the inside loop, and you’ll see that the parallel region is created and destroyed 100 times, each time only running the innermost loop 10 times.

Table 5: Serial Code with Loops

| Fortran | C |

do i = 1, 10 do j = 1, 10 do k = 1, 10 A(i,j,k) = i * j * k end do end do enddo |

for (i=0; i < 10; i++) {

for (j=0; j < 10; j++) {

for (k=0; k < 10; k++) {

A[i][j][k] = i * j * k

}

}

}

|

Table 6: Parallel Code with Loops

| Fortran | C |

!#omp parallel do do i = 1, 10 do j = 1, 10 do k = 1, 10 A(i,j,k) = i * j * k end do end do enddo !$omp end parallel do |

#pragma omp parallel for

{

for (i=0; i < 10; i++) {

for (j=1; j < 10; j++) {

for (k=0; k < 10; k++) {

a[i][j][k] = i * j * k

}

}

}

}

|

Getting Started

With the one directive omp parallel do, you can take advantage of great sources of parallelism in your loops and possibly improve the performance of your code. Before jumping in, you should be prepared to take several steps.

First, create one or more “baseline” cases with your code before using OpenMP directives. The baseline cases can help you check that your code is getting the correct answers once you start porting. One way to do this is to embed a test in your code that checks the answers (e.g., HPL (high-performance Linpack), which is used for the TOP500 benchmark). At the end, the test tells you whether the answers are correct. Another approach I tend to use is to write the code results to a file for the baseline case and then compare those with the results once you start porting, as I explained in the last OpenACC article. The exact same approach can be used with OpenMP.

Second, put timer functions throughout the code that get the wall clock time when called. At the minimum, you can use one at the beginning of the code and one at the end. This time is generally called the wall clock or run time for the application. If you are interested in understanding how the directives affect the execution time for different parts of your code, you can put timers around those parts. Do as much as you want, but don’t go too crazy.

Third, optionally use a profiler that can tell you how much total time is used in each routine of the code. Several profilers are available – primarily those that came with the compiler you are using.

Fourth, run the serial code with the timers or use the profiler to get a stack rank of which routines took the most time. These are the routines you want to target first because they take the most time. I discussed this process in the last OpenACC article, and the same procedure can be used with OpenMP.

I invite you to go forth and create parallel code with omp parallel do. If you want to get really crazy, you can mix OpenMP and OpenACC on your code. (I double dog dare you.)