How Persistent Memory Will Change Computing

Persistent memory is non-volatile data storage in DIMM format. Losing power means that you won't lose data. What is it, and how can you use it?

The same data storage hierarchy paradigm has been used for many years, and seemingly it works quite well. However, a new kind of data storage is coming that will completely disrupt this hierarchy and force the developer, user, and admin, to rethink how and where they store data. The general term for this storage is NVRAM (Non-Volatile Random Access Memory). In this article, I focus on something reasonably new – and most probably disruptive – called persistent memory (PM).

Looking back at the history of processors, one can see that layers of caching were added to improve performance. The first line of data storage are the CPU registers, which have the fastest and lowest latency of any storage in the system. However, the amount of storage is very small because of cost (as part of the CPU), and data had to be retrieved either directly from main memory (DRAM) or from disk storage, resulting in slow data access, and therefore slow performance.

To improve performance, caching was added to processors. Between the registers and main memory, Level 1 (L1), Level 2 (L2), and even L3 and L4 caches have been added. Typically the L1 cache is part of the processor (each core) and can store more data than the registers, but it is slower and has higher latency than registers.

The L2 cache is a bit larger than the L1 cache, but it is also a bit slower and has higher latency. Sometimes it is shared across two cores or more. The L3 cache another level above L2 is slower yet and has higher latency than the L2 cache. Typically the L3 cache works for all cores on the processor.

After the processor cache sits DRAM, or main memory. This can be fairly large, but again it has lower performance and higher latency than the caches. I hope you are noticing a trend.

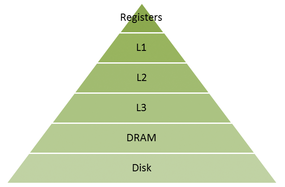

All the registers, caches, and memory are volatile. If power is lost, the data stored in them is lost. On the other hand, storage that can survive a loss of power is typically disk or flash. The data in the system is written to or read from this storage layer so it can be used at a later time. This level of data storage has the largest capacity, the slowest performance, and the highest latency in the data hierarchy up to this point. Moreover, it is typically centralized outside the system, a very important distinction. Figure 1 presents the levels of data storage starting with the registers at the top and moving down the pyramid to disk storage.

The top of the pyramid has the fastest storage (fastest throughput and lowest latency). As you move down the pyramid, data storage capacity increases – as indicated by the width of the level – the cost per gigabyte of each level increases, and performance decreases compared with the level above it. The data storage is also volatile until you reach the final disk layer (which can be flash storage, too). This layer allows you to lose power without losing data, but the trade-off is that, although this layer has the largest capacity and the best cost per gigabyte, it is also the slowest and has the highest latency.

An issue developers face every day is how to move data efficiently through these storage layers so that applications can run with the best possible performance. Often, the data movement is controlled by the code generated by the compiler and code embedded within the system. That is, you don't have a great deal of direct control over data movement. However, that might be a good thing, because it would be a huge amount of work to write application code to move data up and down the hierarchy.

Where Does Persistent Memory Fit?

NVRAM is simply data storage that does not lose data if the system loses power. The most common example is flash memory. This has been available for a while, but storage is outside the system and has worse performance than conventional DRAM. On the other hand, persistent memory sits in the system DIMM slots but doesn't lose data if power is lost.

Efforts to create PM have been made in the past, but the latest innovation, as typified by Intel and Micron's 3D XPoint PM [1], is part of the system. Most commonly, the data storage is put into the DRAM slots. If the power is removed, the data stored in the PM will be there when power is restored (this is where the term "persistent" comes from).

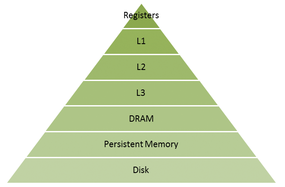

In the data storage hierarchy, persistent memory sits between DRAM and disk storage, as shown in Figure 2. Generally, persistent memory doesn't have the same performance as DRAM, meaning that it is a bit slower and has slightly higher latency, but again, it doesn't lose data if power is lost. Moreover, it will likely have more capacity than DRAM (i.e., terabytes instead of gigabytes).

Before PM, the "gap" between disk storage and DRAM was very large. Persistent memory, however, is much closer to DRAM performance than disk or flash performance, so the gap between disk storage and PM is very large. This technology will change the data storage hierarchy.

Instead of having nonvolatile storage at the bottom with the largest capacity but the slowest performance, nonvolatile storage is now very close to DRAM in terms of performance. Systems using PM will force people to think about how and where they store data. Should you write applications that use PM exclusively and only use DRAM as a "cache"? Or maybe you can use both PM and DRAM to get a very large pool of addressable memory or even use it as storage? As you can see, PM will be very disruptive in how we think about programming, where we store data, and how we move data around the system.

As with every new technology there are always positives and negatives, and PM is no exception (Table 1). With these points in mind, I'll talk about possible ways you might use PM.

Persistent Memory as Memory

The wikipedia article on 3D XPoint states that it is bit addressable, which means you can perform I/O to it as if it were memory. In terms of capacity, persistent memory will be roughly an order magnitude larger than DRAM. At the extreme, you can pretty much buy systems with 1-2TB of memory, but you probably don't buy too many of them because of cost. Typical compute nodes are in the 64-256GB range. Persistent memory will be on the order of several terabytes at first, so it's roughly an order of magnitude larger than the typical node.

This extra capacity presents an opportunity. You could use it as memory that is slower than DRAM. If memory bandwidth is not a bottleneck for your application, then this could be a great way to increase the memory capacity at a lower price than DRAM. Given the memory capacity, you also might be willing to sacrifice a little memory bandwidth performance for much larger memory capacity. The final decision will most likely depend on how much performance is lost going from DRAM to PM.

In the past, an effort was made to create SSD drives in DRAM slots with the hope of greatly increasing memory capacity while keeping the price down. What was found by most users was that the decrease in memory bandwidth (memory performance) was much larger than they anticipated. As a result "SSD memory" didn't really go anywhere because application performance was adversely affected to the point of frustration by users.

However, PM, like Intel's 3D XPoint, is much faster than SSDs and has much lower latency. Consequently, it could be a good trade-off between performance, cost, and memory capacity. The only real way to know is to test your applications on systems with PM.

Persistent Memory for Filesystems

You can also treat PM as storage [2], so you can use it as raw storage or create a filesystem on it. Using PM for storage is basically the same idea as using a RAM disk, with one very important difference: When the power is turned off, the data is not lost. To the operating system, the PM looks like conventional block storage, and a filesystem can be built using that block storage. The two most obvious ways to deploy a filesystem (storage) on PM in servers are to use it as local scratch space (local filesystem) or as part of a distributed filesystem (parallel or not).

In both cases, realize that you are taking very fast storage (almost as fast as DRAM) and layering software on top of it. This adds latency and reduces bandwidth into and out of the PM. I like to say that you are "sullying the hardware with software," but to create a usable storage system, you are forced to do this. Ideally, you want some sort of filesystem that is very lightweight and imposes little effect on performance.

An important point to think about in this regard is POSIX. If it takes a somewhat large amount of software to allow a POSIX filesystem to use PM, resulting in much larger latencies, is it worth it? POSIX gives you compatibility and a common interface, so you can easily move applications from system to system. If the alternative is a specific library that has to be linked to your application that allows you to take advantage of the amazing performance, and you lose easy compatibility, forcing you to rewrite the I/O portions of your application, is it worth it? The ultimate answer is that it depends on your applications; however, think about the price you would be willing to pay in terms of performance to gain POSIX compatibility.

Using PM for local storage could be a big win for applications that write out local temporary files. Examples include out-of-core solvers that need to write temporary data to storage (e.g., finite element methods (FEM) or databases). Using PM as really fast local storage allows you to take existing applications and improve their performance quickly. However, you also have the option of using PM as extra memory and not using an out-of-core algorithm if the problem can fit into total memory, including PM. Of course, some problems won't fit into memory, requiring some sort of local storage.

You can always use the PM in servers to create a distributed filesystem. Immediately, parallel filesystems like Lustre, OrangeFS, or GPFS spring to mind. However, you need to consider carefully what you are about to do. If you use PM as part of a distributed filesystem and the server loses power, you don't lose any data, but you could lose access to the data. Unless the filesystem employs RAID or replication, you won't be able to access the data on the server.

Additionally, you have to consider moving data from the network interface to PM via the filesystem. With PM, you have extremely fast storage, and the I/O bottleneck might just have moved to the network interface somewhere else in the system. Moving the bottleneck is inevitable, but you need to be ready for it to appear somewhere in the system.

Performance

The performance of PM is always under discussion, particularly in the case of 3D XPoint, because it is so close to release. In this case, performance has always been discussed in general terms:

- 1,000 times the performance of NAND flash

- 1,000 times the endurance of NAND flash

- 10 times the density of DRAM

- A price between flash and DRAM

In addition to the DIMM form factor, Intel is going to release 3D XPoint under the Optane brand in the form of SSDs. Recently, Intel gave a demonstration of these SSDs at the OpenWorld conference, hosted by Oracle.

The results were summarized in an article online [3].

Brian Krzanich, CEO at Intel, talked about Optane and finally gave the world some performance numbers, although they are for the Optane SSD and not the Optane DIMMs. Krzanich was demoing on what looked like a 1U two-socket server from Oracle that had two Intel Xeon E5v3 "Haswell" processors. One processor used an Intel P3700 SSD and a prototype Optane SSD. Both drives were connected to the system using NVMe [4] links to improve performance. The size of the P3700 SSD was not given, but by referencing Newegg [5], the following details were found:

- Capacity: 400GB

- Price: $909 (as of the writing of this article)

- Up to 450,000 4K random read IOPS

- Up to 75,000 4K random write IOPS

- Maximum sequential read: up to 2,700MBps

- Maximum sequential write: up to 1,080MBps

Two benchmarks or tests were shown, although the details of the tests were not given. Both tests compared the IOPS and latency using the P3700 SSD versus the Optane SSD. The results are summarized in Table 2.

Because the data path to/from the drives is the same (NVMe), the source of the differences is mostly in the drives themselves. The Optane drive is clearly much faster than the current P3700 SSD drive. Based on these results, I can't wait for the DIMM performance numbers to come out!

Summary

Persistent memory has the promise of non-volatile data storage, but with performance almost comparable to DRAM and for less cost than DRAM. A key attribute of PM is that, if for some reason, the system loses power, the data stored on the PM, perhaps on DIMMs containing the PM, is not lost as it would be with DRAM.

Persistent memory fits into a system's data storage hierarchy above permanent storage, such as disk and flash drives, but below DRAM. The non-volatile nature of PM means that it bridges the gap between storage that is either on the bus or outside the system and DRAM that is inside the system. This could be a really disruptive development for data storage. Be sure to keep a sys on it.

- 3D XPoint: https://en.wikipedia.org/wiki/3D_XPoint

- Layton, J., and Barton, E. "Fast Forward Storage & IO," http://storageconference.us/2014/Presentations/Panel3.Layton.pdf

- Intel Shows Off 3D XPoint Memory Performance: http://www.nextplatform.com/2015/10/28/intel-shows-off-3d-xpoint-memory-performance/

- NVMe: https://en.wikipedia.org/wiki/NVM_Express

- Intel P3700 SSD: http://www.newegg.com/Product/Product.aspx?Item=N82E16820167232&cm_re=Intel_P3700_SSD-_-20-167-232-_-Product