© gortan123, 123RF.com

Review: Accelerator card by OCZ for ESX server

Turbocharger for VMs

Manufacturer OCZ advertises VXL, its storage acceleration software, with crowd-pulling arguments: It runs without special agents in applications on any operating system, reducing traffic to and from the SAN by up to 90 percent and allowing up to 10 times as many virtual machines per ESX host than without a cache. The ADMIN test team decided to take a closer look.

The product consists of an OCZ Z-Drive R4 solid state storage card for a PCI Express slot in the virtualization host and associated software. The software takes the form of a virtual machine running on the ESX server that you want to benefit from the solution: This component handles the actual caching.

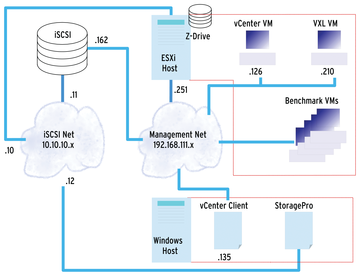

Additionally, a Windows application exists for the cache control settings. The virtual machine runs on Linux and starts a software tool called VXL. The Windows application (which, incidentally, can also run on a virtual machine) and the VXL virtual machine need access to a separate iSCSI network that connects the storage to be accelerated on the virtualization host (Figure 1). The administrator communicates with the ESX server, its VMs, and the VXL components on a second management network.

Knowing How

I'll start by saying that the software configuration is

...Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.