How Linux and Beowulf Drove Desktop Supercomputing

Open source software and tools, the Beowulf Project, and communities changed the face of high-performance computing.

Just about every Linux user has a gut feeling for what open source means, especially in regard to what they do and what it means to them. The rise of free software and open source software has had a profound effect on just about every technology in existence, especially on high-performance computing. In this article, I discuss some history about free and open source software and focus on two key projects: Linux and Beowulf.

Begin at the Beginning

An argument can be made that the open source software movement started with the birth of the Internet in 1969. This development allowed computer systems to communicate, to share, send, and receive data. To accomplish this, a software standard had to be developed, and each system had to follow these standards. Proprietary computer and software companies would have to follow these standards if their systems or tools would work with those from all of their competitors. The track record of competing companies adhering to a software standard had been abysmal; therefore, an argument can be made that the best or only way for companies to follow a standard is through open source software.

Free Software

The TeX typesetting tool is probably the first large-scale software to be released under a permissive license in 1978. The project was started and is led by Donald Knuth, one of the shining stars of computer science. In developing TeX, he adhered to two principles: “… to allow anybody to produce high-quality books with minimal effort, and to provide a system that would give exactly the same results on all computers, at any point in time.” To achieve these two goals, he released TeX with a very permissive license and indicated that the source code of TeX is in the public domain.

The start of open source is GNU. Although it might not be the first open source set of software tools, it is close to the beginning. More importantly, it is where the concept of free software started. GNU (an acronym for GNU’s Not Unix) was launched in September 1983 by Richard Stallman with the starting goal of writing a Unix-like operating system. Stallman’s tenet was “software should be free.” He wanted users to be free to read the source code and study it. They could also share the software, modify it, and release their modified software. To GNU, “free” does not refer to the price of software, but rather to a matter of liberty. The GNU project explains its use of free as: “... you should think of ‘free’ as in ‘free speech’, not as in ‘free beer’.”

Stallman, if you didn't know, has uncompromising opinions and ethics; he had defined himself as a force of nature and carried that drive into GNU. Although he’s not the sole author of software within GNU, he has created a formidable project that has a massive number of Unix-like tools written under the GNU umbrella. Many Linux tools are GNU tools (e.g., GCC and Octave). The GNU tools took the Linux kernel from just a kernel to a complete operating system.

In October 1985, Stallman set up the Free Software Foundation (FSF), a non-profit organization created to support the free software movement, which includes the “… universal freedom to study, distribute, create, and modify computer software … .”

Up to the mid-1990s, FSF primarily used its funds to pay software developers to write free (in terms of liberty and not price) software for the GNU project. From the mid-1990s to today, FSF mostly works on legal and structural issues for the free software movement and community.

Open Source Software

Open Source Software (OSS) is a bit different from free software. Generally, it is defined as, “… a type of computer software in which source code is released under a license in which the copyright holder grants users the rights to use, study, change, and distribute the software to anyone and for any purpose … .” Although different from the free software movement, its roots definitely started there. In 1997, Eric Raymond published an essay, “The Cathedral and the Bazaar” (later published as a book), which was based on his experiences in the open source project Fetchmail, as well as his observations of the Linux kernel development process.

The essay was a factor in Netscape Communications Corporation’s decision to release their Netscape Communicator Internet Suite as free software, which in turn caused a group of people to hold a strategy session to discuss open source software development – in particular, how they felt the open development process would fare relative to other processes. Note that the emphasis was on the software development model.

This strategy session then led to the Open Source Initiative (OSI) being founded in 1998. Although related to the free software movement, it chose to use the phrase “open source” to “… dump the moralizing and confrontational attitude that has been associated with ‘free software’ … ” (Maher, Jennifer Helene. Software Evangelism and the Rhetoric of Morality: Coding Justice in a Digital Democracy. Taylor & Francis, 2015, p. 69). Very quickly, the term “open source” was adopted by a wide range of people, include Linus Torvalds.

Licenses

Open source continues strong to this day through a variety of licenses. An interesting aspect of open source licenses is that the software is usually released for free in terms of cost, but that is not always the case. The most common open source licenses are those that have been approved by the OSI according to their Open Source Definition (OSD).

You might be familiar with some of the most common licenses, such as the Apache License, BSD 3-Clause License, GNU Public License (GPL, which includes the “copyleft” license, the opposite of a copyright), GNU Lesser General Public License (LGPL), and MIT License. These aren’t the only licenses, but they are commonly used. Notice that open source licenses can include free licenses, such as GPL and LGPL.

A quick glance at free versus open source software might result in the conclusion that they are the same. Although very close, they have important differences. Free software emphasizes the “free as in speech” aspect of software; open source software emphasizes the development model (i.e., open collaboration for all).

Many people don’t know that FSF holds the copyrights on many GNU tools such as GCC. As a result, it can enforce copyleft requirements of the GPL license when copyright infringement occurs on that software.

Open Source Observations

Free and open source licenses are important in general, and in particular to desktop supercomputers. Using free/open source licensing allows millions of people to study, learn, and even contribute to a project in the form of direct coding, porting, testing, or documentation. Moreover, some companies pay people to work on free and open source software, but a very large percentage of contributors are volunteers who want to work on a specific project. Whereas small focused teams paid to write specific software might be an expedient solution, an open source project can contribute to better, perhaps more secure, code and can even result in faster development because people are motivated by something other than getting paid: something that they personally feel is important, interesting, and fun.

Linux

Although most of you will know at least some of the history of Linux, I think it’s fairly important to review the history quickly, particularly in the context of the 1990s into the early 2000s. Without going back to the Multics operating system (OS), I’ll start with efforts to create PC versions of Unix.

In 1987, Professor Andrew Tannenbaum created MINIX, a Unix-like OS that he used for teaching operating systems. At that time, it was a 16-bit OS, although processors were 32-bit. Even though MINIX was not ported to 32-bit and was typecast as “educational,” it had a great effect on those wanting Unix on PC processors.

Torvalds began a project in 1991 to write a kernel for his hardware, a 32-bit Intel 80386 processor. He used MINIX and GCC for the development platform, and this initial kernel morphed into an OS kernel, which he announced on August 25, 1991, on the comp.os.minix newsgroup.

Very quickly components were added to this kernel, including those from the GNU project. I remember when networking was added because there was a great deal of excitement on the newsgroups. In 1992, X Windows was ported to Linux, supplying a GUI. From then on, Linux usage exploded.

The success of Linux is based on a few factors, all of which combined to launch Linux as an operating system: (1) Its license was free (open source), allowing a veritable army of coders to contribute. (2) Unix had been in use for a long time at universities and research institutes. (3) Computer science students had been learning MINIX for years and writing Unix code. (4) The GNU project had created a great deal of code for Unix-like operating systems.

Beowulf

Beowulf clusters were born from the simple need for a commodity-based cluster system designed as a cost-effective alternative to large supercomputers. Jim Fischer at NASA Goddard created a project plan for the High-Performance Computing and Communications (HPCC)/Earth and Space Sciences project that included “a task for development of a prototype scalable workstation and leading a mass buy procurement for scalable workstations for all the HPCC projects.” The word “workstation” carries a very specific meaning, in that it is designed for individual users. Therefore, although perhaps not explicitly stated, the goal was to create a desktop cluster for use by individuals.

In 1994, on the basis of this project and task, Thomas Sterling and Don Becker built the first Beowulf cluster. It was a modest system consisting of 16 i486DX4 processors and 10Mbps Ethernet. The processors were too fast for a single 10Mbps Ethernet connection, so Becker, an author of Ethernet drivers for Linux, modified his Ethernet drivers for channel bonding two Ethernet interfaces to act as one. The initial system cost roughly $50,000.

Sterling and Becker developed more systems, as did others. Within a year, the cost of the original components for the first Beowulf now cost $28,000; other systems had moved on to faster Ethernet connections and faster processors, snowballing into larger systems with faster networks and faster processors with more cores.

One thing all of these systems had in common was that the software tools used in Beowulf clusters were free, open source, or both. They all used Linux (there were exceptions, of course, but these were small in number). The combination of free and open source software, Linux, and now Beowulf started driving clusters very, very quickly.

To clarify, there is no such thing as “Beowulf software.” Beowulf clusters are designed to be extremely flexible. You can use software tools that help you solve your problems. Some of these tools were developed with Beowulf clusters in mind, but their sum does not equal Beowulf software. Truly, Beowulf clusters comprise Linux, additional software tools and projects, best practices, tutorials, and a community that is willing to help each other.

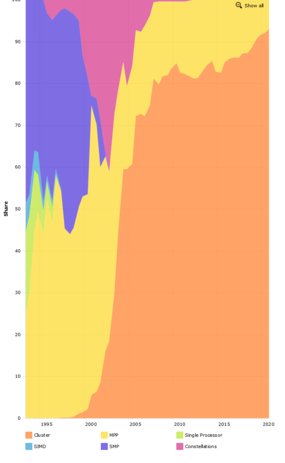

By the end of the 1990s 28 clusters were in the TOP500. Figure 1 (source: TOP500 Development Over Time Statistics) shows a history of these architectures. Notice how quickly clusters dominated the list.

Summary

Open source software development has had a huge effect on high-performance computing (HPC, ie, supercomputing). Beginning with the free software movement, epitomized by the GNU project, high-quality source code was available for use, study, modification, and extension, which led to even better software and techniques that improved HPC.

After the establishment of the free software movement, the open software movement developed, which is a bit different in that it is focused on a development model, not just fulfilling the definition of “free.” The movement showed the world that publishing source code can have a great effect on the quality of software and the resulting economic impact.

From these movements, a complete and powerful operating system was born: Linux. When Linux first came out, it was thought that it wouldn’t last very long or have much of an effect, but it’s safe to say that opinion was wrong. Linux is now arguably the dominant operating system for servers, particularly in HPC. By November 2017, the TOP500 list reported that 100% of HPC systems ran a Linux or Linux-family operating system.

Equally important to Linux in the HPC world is the Beowulf cluster. This project used commodity components coupled with Linux, other software tools, best practices, and a thriving community willing to help and share to create HPC systems that were orders of magnitude better than the previous generation of monolithic centralized supercomputers. Beowulf clusters then became the dominant HPC architecture, as evidenced by the TOP500 list.

An important aspect of Beowulf clusters is that their origin was targeted at workstations. The focus was on putting more performance in the hands of individual users and moving away from centralized single systems. Keep this in mind for the next article in the series.

Additional Resources

Levy, Steven. Hackers: Heroes of the Computer Revolution. Anchor Press/Doubleday, 1984

Raymond, Eric. The Cathedral and the Bazaar. O’Reilly Media, 1999

Wikipedia History of Linux

Digital Ocean concise history of Linux

Beowulf on the NASA website

ClusterMonkeyHPC (Doug Eadline) history of Beowulf clusters