NVDIMM and the Linux Kernel

Non-volatile dual in-line memory modules will provide storage as fast as RAM and keep its content through a reboot. The Linux kernel is already geared to handle the new technology and can even serve the modules up as block devices.

Many databases, such as Berkeley DB, Oracle TimesTen, Apache Derby, and MySQL Cluster, can be configured to run completely in their host's main memory. SAP HANA is even an in-memory-only database. Such products are used when you need a quick response despite high workload. Classic high-performance computing also likes to keep work data in RAM on the node so that CPUs do not have to wait too long for data from mass storage.

Because RAM content is always lost in a shutdown or failure, such systems need to be backed up periodically to a non-volatile medium, although it's not especially reliable in practice and can slow the entire system. Non-volatile RAM would be valuable, and it is coming to market soon as non-volatile DIMM (NVDIMM). The role model was buffer cache memory on RAID controllers, which kept its content when switching off the system to ensure the integrity of its data.

In December 2015, the JC-45 subcommittee of the JEDEC industry association published specifications for persistent memory modules that fit the sockets for DDR4 SDRAM DIMMs. However, motherboards need a BIOS that supports the modules. JEDEC envisages two approaches: NVDIMM-N backs up the content of its DRAM in case of a power outage and restores the data when the voltage is restored. NVDIMM-F behaves like an SSD attached to the memory bus.

Useful Hybrids

NVDIMM is a wanderer between two worlds. Easily confused with the appearance of DDR4 modules (Figure 1), only a backup capacitor (often larger), batteries in some cases, and a flash chip reveal its strange features. If you need to shut down the server, the capacitors or batteries buffer the content of the module until it is completely written to the flash chips. Newer technologies even promise to remove this step by making flash fast enough for direct use as main memory.

A typical use of NVDIMMs, besides the in-memory databases mentioned earlier, would be high-performance computing. Linux developers adapted the kernel to enable DMA transfers to and from NVDIMM – for example, to exchange data faster within a cluster using InfiniBand.

Not every data center needs to keep terabyte-scale databases in memory or calculate the volume of underground gas deposits; however, NVDIMMs can also help with setups that simply want to run storage caching using Bcache or dm-cache. Then, the NVDIMM hardware would act as a faster and safer buffer between the kernel's RAM buffer cache and slow, but large, hard drives or SSDs.

The Nearer, the Faster

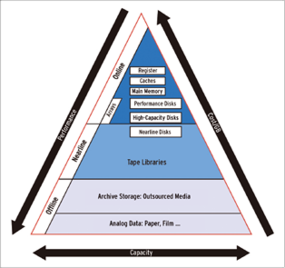

The traditional storage pyramid (Figure 2) shows the speed differences between technologies. In the case of DRAM, memory cells of capacitors are arranged to form a matrix, which the memory controller can address quickly by columns and rows. The controller is architecturally very close to the CPU and is connected to it via a fast parallel bus.

The CPU, however, typically addresses SSDs via serial attached SCSI (SAS) or serial ATA (SATA). These protocols need their own host bus adapters, which are usually connected to the CPU via PCI Express. Even if you avoid going through an additional chip, as is the case with NVMe or SCSI PQI, the kernel still needs to packetize the data in SCSI or NVMe packets, store the data in main memory, inform the target DMA controller (SSD or NVMe card) of the storage areas and initiate the DMA transfer (for the terminology, see Table 1).

| Table 1: Glossary | |

|---|---|

| Acronym | Explanation |

| DIMM | Dual in-line memory modules. |

| DMA | Direct Memory Access: A method of transferring data from main memory to hardware without the help of the CPU. |

| DRAM | Dynamic Random Access Memory: Volatile memory consisting of capacitors and typically used as main memory in PCs and servers. |

| HBA | Host Bus Adapter: Hardware interface that connects a computer to a bus or a network system. The term is commonly used, especially in the SCSI world, to describe the adapters that connect to the hard drive or the SAN (Storage Area Network). |

| NVDIMM | Non-Volatile Direct Input Memory Modules: Very fast DIMM memory for use either as main memory or mass storage devices. |

| NVMe | Non-Volatile Memory express: Flash memory connected via PCI (Peripheral Component Interconnect) Express, InfiniBand, or fibre channel with lower latency and higher throughput compared with traditional SSDs. |

| SCSI PQI | SCSI PCIe Queueing Interface. |

But wait! There's more. After successfully completing the transfer, an interrupt notifies the driver so that it can initiate the next transmission. These transfers must be the correct size, because a disk is a block device that only processes data of a specified package size, typically 512 bytes or 4096 bytes for large hard drives and flash media.

Block devices are at a disadvantage by design when it comes to handling large numbers of very small data units. To manage these problems, operating systems respond with counter strategies: One of these is the page cache, which sits between the filesystem and the kernel's block I/O layer, that ensures the most favorable data is stored in main memory. On the other hand, the I/O scheduler tries to time access to the hardware if possible.

Low Latency and High Granularity

For some applications, these strategies are not enough. Hardware developers are therefore increasingly attempting to shift mass storage closer to the CPU. The popular statement by Microsoft Research employee Jim Gray puts it in a nutshell: ``Tape is Dead, Disk is Tape, Flash is Disk, RAM Locality is King.'' Whereas newer technologies have increasingly tried to move storage closer to the memory controller, the new approach is to locate mass storage parallel to main memory on the memory controller.

Having mass storage parallel to the main memory offers several advantages: For one, it unsurprisingly reduces latency significantly, such as the delay between issuing an I/O request in the direction of the filesystem and having the first data returned. On the other hand, block size is more or less irrelevant: Whereas access to the classical block devices rely on fixed, fairly large blocks, RAM is now addressable byte- or word-wise. In practice, however, the even faster CPU caches determine main memory access behavior. Operations in which the processor caches have a practical effect and thus have cache-line granularity.

New Options

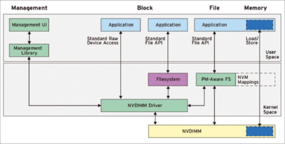

The access semantics differ enormously between NVDIMM and block-oriented devices such as SSDs and hard drives. NVDIMMs are connected like memory, and they behave similarly; the Linux kernel addresses them via RAM-oriented load/store operations. Drivers turn them into ultra-fast block devices, which are addressed via read/write operations, opening up new possibilities for custom programs and the kernel.

The only filesystems that use direct access (DAX) at the moment are XFS and ext4. They can bypass the classic kernel request semantics for block devices and communicate directly with the NVDIMM modules, thus eliminating the route by way of classical RAM, and the CPU no longer needs to host DMA completion IRQs.

Not with RAID

The approach has one disadvantage: Because the filesystems address storage directly, the option of duplicating data in the style of software RAID is no longer available. If you store your data redundantly, you can't use this feature. The software RAID drivers cannot access the data because the process of creating and saving data ideally happens on the NVDIMMs, removing the need to copy from DRAM to the NVDIMMs.

If you also want to bypass the kernel, then check out the NVM Library (NVML) project. The library for C and C++ abstracts the specifics of NVDIMMs for the programmer and thus supports direct access to the hardware. This is especially suitable for back-end databases that require the speed of in-memory databases as well as a short startup time. The records already exist here and do not need to be copied into main memory. Figure 3 provides an overview of the software architecture of Linux NVDIMM support.

Integrity in the Arena

If the integrity of your data is more important to you than speed, you need to check out the NVDIMM Block Translation Table (BTT) subsystem in the kernel. BTT describes the method for accessing NVDIMMs atomically, in the manner typical of SCSI devices. The method either completes operations or it doesn't – without any in-between states.

If a BTT resides on the NVDIMM, the kernel divides the available storage space into areas of 512GB, known as arenas. Each arena starts with the 4KB arena info block. Each block ends with the BTT map and the BTT flog (a portmanteau from ``free list'' and ``log''), as well as a 4KB copy of the arena info block. In between, the actual data can be found.

The BTT map is a simple table that translates a logical block address (LBA) to the internal blocks of the NVDIMM. The BTT flog is a list that maintains the free blocks on the NVDIMM. Any write access to a BTT-managed NVDIMM first goes to a free block from the flog. Linux only updates the BTT map if the write was successful. If a power failure occurs during the write process, the old version of the data exists until the BTT map is updated.

Testing with Linux

You can test NVDIMM on a Linux system as of kernel 4.1, but versions 4.6 or later are recommended. If you like, you can emulate NVDIMM hardware without the physical hardware using Qemu, or you can assign part of main memory to the NVDIMM subsystem using the boot manager's memmap parameter at the command line. Without appropriate hardware, write operations in this area are naturally not persistent.

Listing 1: Showing Memory with dmesg

# dmesg | grep BIOS-e820 [ 0.000000] e820: BIOS-provided physical RAM map: [ 0.000000] BIOS-e820: [mem 0x0000000000000000-0x000000000005efff] usable [ 0.000000] BIOS-e820: [mem 0x000000000005f000-0x000000000005ffff] reserved [ 0.000000] BIOS-e820: [mem 0x0000000000060000-0x000000000009ffff] usable [ 0.000000] BIOS-e820: [mem 0x00000000000a0000-0x00000000000fffff] reserved [ 0.000000] BIOS-e820: [mem 0x0000000000100000-0x0000000077470fff] usable [ 0.000000] BIOS-e820: [mem 0x0000000077471000-0x00000000774f1fff] reserved [ 0.000000] BIOS-e820: [mem 0x00000000774f2000-0x0000000078c82fff] usable [ 0.000000] BIOS-e820: [mem 0x0000000078c83000-0x000000007ac32fff] reserved [ 0.000000] BIOS-e820: [mem 0x000000007ac33000-0x000000007b662fff] ACPI NVS [ 0.000000] BIOS-e820: [mem 0x000000007b663000-0x000000007b7d2fff] ACPI data [ 0.000000] BIOS-e820: [mem 0x000000007b7d3000-0x000000007b7fffff] usable [ 0.000000] BIOS-e820: [mem 0x000000007b800000-0x000000008fffffff] reserved [ 0.000000] BIOS-e820: [mem 0x00000000fed1c000-0x00000000fed1ffff] reserved [ 0.000000] BIOS-e820: [mem 0x0000000100000000-0x0000000c7fffffff] usable

A dmesg command shows free areas (Listing 1). Areas marked usable can be used by the NVDIMM driver. The last line shows an area of 50GiB usable. Calling

memmap=16G!4G

reserves 16GiB of RAM starting around 4GiB (0x0000000100000000), and hands it over to the NVDIMM driver. Once you have modified the kernel command line and rebooted the system, you will see an entry similar to Listing 2 in your kernel log. The storage is clearly divided: The kernel has tagged 0x0000000100000000 to 0x00000004ffffffff (4-20GiB) as persistent (type 12). The /dev/pmem0 device shows up after loading the driver. Now, working as root, you can type

mkfs.xfs /dev/pmem0

to create an XFS filesystem and mount as usual with:

mount -o dax /dev/pmem0 /mnt

Note that the mount option here is dax, which enables the aforementioned DAX functionality.

Listing 2: NVDIMM Memory After memmap

[ 0.000000] user: [mem 0x0000000000000000-0x000000000005efff] usable [ 0.000000] user: [mem 0x000000000005f000-0x000000000005ffff] reserved [ 0.000000] user: [mem 0x0000000000060000-0x000000000009ffff] usable [ 0.000000] user: [mem 0x00000000000a0000-0x00000000000fffff] reserved [ 0.000000] user: [mem 0x0000000000100000-0x0000000075007017] usable [ 0.000000] user: [mem 0x0000000075007018-0x000000007500f057] usable [ 0.000000] user: [mem 0x000000007500f058-0x0000000075010017] usable [ 0.000000] user: [mem 0x0000000075010018-0x0000000075026057] usable [ 0.000000] user: [mem 0x0000000075026058-0x0000000077470fff] usable [ 0.000000] user: [mem 0x0000000077471000-0x00000000774f1fff] reserved [ 0.000000] user: [mem 0x00000000774f2000-0x0000000078c82fff] usable [ 0.000000] user: [mem 0x0000000078c83000-0x000000007ac32fff] reserved [ 0.000000] user: [mem 0x000000007ac33000-0x000000007b662fff] ACPI NVS [ 0.000000] user: [mem 0x000000007b663000-0x000000007b7d2fff] ACPI data [ 0.000000] user: [mem 0x000000007b7d3000-0x000000007b7fffff] usable [ 0.000000] user: [mem 0x000000007b800000-0x000000008fffffff] reserved [ 0.000000] user: [mem 0x00000000fed1c000-0x00000000fed1ffff] reserved [ 0.000000] user: [mem 0x0000000100000000-0x00000004ffffffff] persistent (type 12) [ 0.000000] user: [mem 0x0000000500000000-0x0000000c7fffffff] usable

Speed Test

If you own NVDIMMs and want to check out the speed advantage, all you need is a short test with the Unix dd tool. In Listing 3, root copies 4GB from the null device /dev/zero to the PMEM mount point. The oflag = direct flag helps bypass the kernel's buffer cache and thus taps into the power of the physical NVDIMMs (from the preliminary series by HP). The same test on the hard drive of the host returns values of around 50MBps (over a test period of around 80 seconds).

Listing 3: Benchmarking

$ dd if=/dev/zero of=/mnt/test.dat oflag=direct bs=4k count=$((1024*1024)) 1048576+0 records in 1048576+0 records out 4294967296 bytes (4.3 GB, 4.0 GiB) copied, 4.55899 s, 942 MB/s

Availability

NVDIMMs will probably go on sale to the general public in 2017. To make the Linux kernel suitable for the fast modules, the hardware industry is handing samples to dedicated kernel developers like SUSE Labs. The results will benefit all distributions (upstream). Installation of the appropriate kernel and driver will then happen successively. Four distributions already can handle NVDIMMs:

- openSUSE Tumbleweed

- openSUSE Leap 42.2

- SUSE Linux Enterprise 12 SP2

- Fedora 24

During our research, we found no evidence of Debian and Ubuntu following suit, but this will most likely happen before the year is out.

The Authors

Johannes Thumshirn works at SUSE Linux GmbH as a Linux kernel developer for storage, especially NVMe and NVDIMM, as well as traditional storage technologies such as FC/FCoE, SCSI, and SAS.

Markus Feilner is a Linux specialist from Regensburg, Germany. He has worked with Linux as an author, trainer, consultant, and journalist since 1994. The Conch diplomat, Minister of the Universal Life Church, and Jedi Knight today heads the documentation team at SUSE in Nuremberg, Germany.