Pymp – OpenMP-like Python Programming

Applying OpenMP techniques to Python code.

Ever since Python was created, users have been looking for ways to achieve multiprocessing with threads, which the Python global interpreter lock (GIL) prevents. One common approach to getting around the GIL is to run computationally intensive code outside of Python with tools such as Cython and ctypes. You can even use F2PY with compiled C functions.

All of the previously mentioned tools “bypass” Python and rely on a compiled language to provide threaded multiprocessing elements with an interface to Python. What is really needed is either a way to perform threaded processing or a form of multiprocessing in Python itself. A very interesting tool for this purpose is Pymp, a Python-based method of providing OpenMP-like functionality.

OpenMP

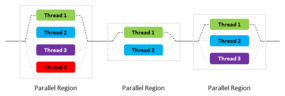

OpenMP employs a few principles in its programming model. The first is that everything takes place in threads. The second is the fork-join model, which comprises parallel regions in which one or more threads can be used (Figure 1).

Only a single thread (the master thread) exists before the parallel region of the OpenMP program. When the parallel region is encountered, the master thread creates a team of parallel threads. The code in this parallel region is executed in parallel among the various team threads.

When the threads complete their code in the parallel region, they synchronize and terminate, with only the master thread remaining. Inside the parallel region threads typically share data, and all of the threads can access this shared data at the same time.

The process of forking threads in a parallel region, joining the data back to the master thread, and terminating the other threads can be done many times in a single program, although you don’t want to do it too often because of the need to create and destroy the threads.

Pymp

Because the goal of Pymp is to bring OpenMP-like functionality to Python, Pymp and Python should naturally share some concepts. A single master thread forks into multiple threads, sharing data and then synchronizing (joining) and destroying the threads.

As with OpenMP applications, when Pymp Python code hits a parallel region, processes – termed child processes – are forked and are in a state that is nearly the same as the “master process.” Note that these are forked processes and not threads, as is typical with OpenMP applications. As for the shared memory, according to the Pymp website, “… the memory is not copied, but referenced. Only when a process writes into a part of the memory [does] it gets its own copy of the corresponding memory region. This keeps the processing overhead low (but of course not as low as for OpenMP threads).”

As the parallel region ends (the “join” phase), all of the child processes exit so that only the master process continues. Any data structures from the child processes are synchronized using either shared memory or a manager process and the pickle protocol via the multiprocessor module. This module has an API similar to the threading module and supports spawning processes.

As with OpenMP, Pymp numbers the child processes with thethread_num variable. The master process has thread_num 0.

With OpenMP, you define a parallel region. In Fortran and C the regions are defined by the directives in Listing 1. Pymp has no way to mark the parallel region. The Pymp website recommends you use a pymp.range or pymp.xrange statement, or even an if-else statement. Doing so achieves the same expected behavior.

Listing 1: Defining a Parallel Region

| Fortran | C |

!$omp parallel ... !$omp end parallel |

#pragma omp parallel

{

...}

#pragma end parallel

|

From the website, example code might look like:

with pymp.Parallel(4) as p:

for sec_idx in p.xrange(4):

if sec_idx == 0:

p.print('Section 0')

elif sec_idx == 1:

p.print('Section 1')

...The first statement in the code outline defines the parallel construct.

As with OpenMP code, you can control various aspects of Pymp code with environment variables (e.g., with the OpenMP variables that begin with OMP), or you can use Pymp versions that begin with PYMP. The mapping is pretty straightforward:

- PYMP_NESTED/OMP_NESTED

- PYMP_THREAD_LIMIT/OMP_THREAD_LIMIT

- PYMP_NUM_THREADS/OMP_NUM_THREADS

The first variable is a binary: TRUE or FALSE. The second variable can be used to set a limit on the number of threads. The third variable is a comma-separated list of the number of threads per nesting level. If only one value is specified, it is used for all levels.

Other aspects to Pymp are explained on the website, including:

- Schedules

- Variable scopes

- Nested Loops

- Exceptions

- Conditional parallelism

- Reductions

- Iterables

This article is too short to cover these topics, but if you are interested, the GitHub site briefly explains them, and you can create some simple examples for further exploration.

Installing Pymp

Although I use Anaconda, I also use Pip when needed. When I examined both Anaconda and Pip for Pymp, the latest version they both had was 0.01, although the last version released was 0.4.2 on September 7, 2018; therefore, I installed Pymp according to the instructions on the website.

After downloading and exploding the .tar.gz or .zip source file and moving to the directory created, I ran the command,

$ python setup.py develop

which builds Pymp. Fortunately, the Pymp codebase is fairly small, so the build goes very fast.

Note that the website proposes using the latest master branch from the Git repo if you want the absolute latest version of Pymp.

Simple Introductory Code

To illustrate how to code with Pymp, the sample code in Listing 2 from the website begins with basic Python code. To keep things simple, this is a serial code with a single array. Listing 3 is the Pymp version of the same code.

Listing 2: Python Code

from __future__ import print_function

ex_array = np.zeros((100,), dtype='uint8')

for index in range(0, 100):

ex_array[index] = 1

print('Yay! {} done!'.format(index))Listing 3: Pymp Code

from __future__ import print_function

import pymp

ex_array = pymp.shared.array((100,), dtype='uint8')

with pymp.Parallel(4) as p:

for index in p.range(0, 100):

ex_array[index] = 1

# The parallel print function takes care of asynchronous output.

p.print('Yay! {} done!'.format(index))The first change to the serial code is creating a shared array with a pymp method. The next change is to add the statement creating the number of processes (with pymp.Parallel(4) as p). Remember that these are forked processes and not threads.

The final action is to change the range function to p.range(0, 100). According to the Pymp website, this is the same as using the static schedule.

The approach illustrated in these code samples bypasses the GIL in favor of the operating system’s fork method. From the GitHub site, “Due to the copy-on-write strategy, this causes only a minimal overhead and results in the expected semantics.” Note that using the system fork operation excludes Windows because it lacks a fork mechanism.

Laplace Solver Example

The next example, the common Laplace solver, is a little more detailed. The code is definitely not the most efficient – it uses loops – but I hope it illustrates how to use Pymp. For the curious, timings are included in the code. Listing 4 is the Python version, and the Pymp version of the code is shown in Listing 5. Changed lines in are marked with arrows (-->**<---).

Listing 4: Python Laplace Solver

import numpy

from time import perf_counter

nx = 1201

ny = 1201

# Solution and previous solution arrays

sol = numpy.zeros((nx,ny))

soln = sol.copy()

for j in range(0,ny-1):

sol[0,j] = 10.0

sol[nx-1,j] = 1.0

# end for

for i in range(0,nx-1):

sol[i,0] = 0.0

sol[i,ny-1] = 0.0

# end for

# Iterate

start_time = perf_counter()

for kloop in range(1,100):

soln = sol.copy()

for i in range(1,nx-1):

for j in range (1,ny-1):

sol[i,j] = 0.25 * (soln[i,j-1] + soln[i,j+1] + soln[i-1,j] + soln[i+1,j])

# end j for loop

# end i for loop

#end for

end_time = perf_counter()

print(' ')

print('Elapsed wall clock time = %g seconds.' % (end_time-start_time) )

print(' ')Listing 5: Pymp Laplace Solver

--> import pymp <--

from time import perf_counter

nx = 1201

ny = 1201

# Solution and previous solution arrays

--> sol = pymp.shared.array((nx,ny)) <--

--> soln = pymp.shared.array((nx,ny)) <--

for j in range(0,ny-1):

sol[0,j] = 10.0

sol[nx-1,j] = 1.0

# end for

for i in range(0,nx-1):

sol[i,0] = 0.0

sol[i,ny-1] = 0.0

# end for

# Iterate

start_time = perf_counter()

--> with pymp.Parallel(6) as p: <--

for kloop in range(1,100):

soln = sol.copy()

for i in p.range(1,nx-1):

for j in p.range (1,ny-1):

sol[i,j] = 0.25 * (soln[i,j-1] + soln[i,j+1] + soln[i-1,j] + soln[i+1,j])

# end j for loop

# end i for loop

# end kloop for loop

# end with

end_time = perf_counter()

print(' ')

print('Elapsed wall clock time = %g seconds.' % (end_time-start_time) )

print(' ')To show that Pymp is actually doing what it is supposed to do, Table 1 shows the timings for various numbers of cores. Notice that the total time decreases as the number of cores increases, as expected.

Table 1: Pymp Timings

| No. of Cores | Total Time (sec) |

|---|---|

| Base (serial) | 165.0 |

| 1 | 94.0 |

| 2 | 42.0 |

| 4 | 10.9 |

| 6 | 5.0 |

Summary

Ever since Python was created, people have been trying to achieve multithreaded computation. Several tools were created to do computations outside of and integrate with Python.

Over time, parallel programming approaches have become standards. OpenMP is one of the original standards and is very popular in C/C++ and Fortran programming, so a large number of developers know and use it in application development. However, OpenMP is used with compiled, not interpreted, languages.

Fortunately, the innovative Python Pymp tool was created. It is an OpenMP-like Python module that uses the fork mechanism of the operating system instead of threads to achieve parallelism. As illustrated in the examples in this article, it’s not too difficult to port some applications to Pymp.