Warewulf Cluster Manager – Howlingly Great

The Warewulf stateless cluster tool is scalable and highly configurable, and it eases the installation, management, and monitoring of HPC clusters.

A plethora of cluster tools are out there to help people get started provisioning, managing, and monitoring HPC clusters. One of the best approaches is to use stateless compute nodes, commonly called diskless nodes because they can make configuration and administration much easier in lots of ways. In this article, I present Warewulf, one of the original and very widely used stateless cluster tools.

A common question from people just starting out in high-performance computing (HPC) clusters is: Which cluster tool should I use? Although you have a number of choices, I always argue for those that are stateless. The advantage of stateless cluster tools is that, by design, they help ensure that the image is consistent across all nodes in the cluster, which facilitates administrative tasks, in that debugging nodes becomes trivially easy and very scalable, with easy-to-change node roles and operating system images for replacement and testing. Plus, it can be argued that they are slightly cheaper that stateful tools because you don’t need disks in each node. Moreover, it has been argued that stateless approaches are easy to use, fast, and easy to manage because the images are so small.

In this article, I want to discuss the one I have been using for a long time: Warewulf. It pioneered many of the stateless methods that other tools use today and is considered the standard stateless open source toolkit for clustering. It is primarily a stateless cluster provisioning and management tool that can also be installed as a stateful tool (i.e., installed onto disks in the compute nodes). It is simple, automates the process, and is very scalable. In this four-part series on using Warewulf in production clusters, I’ll start by discussing how to install Warewulf on a master node and statelessly boot compute nodes.

Introduction

HPC clusters focus on computation – typically running applications that span a number of systems, as opposed to database clusters or high-availability clusters, which are something totally different. In this article, I focus on what people describe as “beowulf” clusters. Throughout the rest of this article, I’ll use the phrase “cluster” to mean HPC clusters. Classically, clusters focus on scientific computing, such as computational fluid dynamics (CFD), weather modeling, solid mechanics, molecular modeling, bioinformatics, and so on, but clusters have increasingly become popular for a variety of other purposes, such as visualization, analyzing the voting records of the supreme court of the United States, archeology, social analysis, and – a current buzz word – “big data.” These are just a few of the many applications that use clusters for computational needs.

A wide variety of toolsets are available for building HPC clusters. Some of them range from pretty simple to fairly complex to use, from open source to commercial, and from stateless to stateful. In my opinion, the best cluster tools are stateless; that is, the nodes don’t maintain their state between reboots. The common phrase used to describe a stateless cluster is “diskless,” but in reality, it is stateless – the nodes don’t have any knowledge of state between reboots, whereas nodes can have disks but still be considered stateless.

One of the most popular cluster tools that does provisioning and management, is Warewulf. It is has been in use on many clusters for many years, primarily as a stateless cluster tool, but it can do stateful clusters as well. Warewulf is very easy to use, very flexible, and is very fast at imaging nodes. The latest version of Warewulf, the version 3.x series, has some new and updated capability to make things even easier and more flexible.

Warewulf supports the traditional model of a cluster master node that does the management and provisioning and a set of compute nodes that do computations. As mentioned earlier, this is the first of a four-part article series on using Warewulf in production clusters. In the second part, I will demonstrate a method for adding user accounts, as well as some very useful utilities, and adding a “hybrid” capability to Warewulf that combines the stateless OS along with important NFS-mounted file systems. In the third article, I will build out the development and run-time environments for MPI applications, and in the fourth article, I will add a number of classic cluster tools, such as Torque and Ganglia, that make clusters easier to operate and administer.

To begin the journey of installing and using Warewulf on production clusters, I’ll start with the prerequisites for the master node.

Prerequisites

If you are interested in HPC or any sort of technical computing or application development, then you likely have a master node almost completely configured for using Warewulf. Warewulf doesn’t require too many prerequisites and by using something like Yum, you can easily configure the master node.

Warewulf is developed on Red Hat-based distributions of Linux, and although nothing is stopping it from being used on other distributions, I will focus this article on Red Hat (clone). Warewulf also supports Ubuntu and Ubuntu-like distributions, but the Ubuntu-like distributions should be fairly similar to Ubuntu itself. Some work has been done for using Debian on the master node, but it might not be fully tested and supported. A variety of distributions for compute nodes is also supported, but if you use something other than the same distribution that is on the master node, some of burden of using them will fall on you to make sure they’re configured correctly.

The basic list of packages you need on the master is,

- MySQL

- Apache (or similar web server)

- dhcp

- tftp-server

- Perl

- mod-perl

and that is about it. I typically also install the development tool package group on the master node because I want to develop, build, and test applications on the cluster, but that is not strictly necessary (e.g., if you have separate interactive login systems from your master node).

In the rest of the article, I’ll walk through six major steps on installing packages, including Warewulf, and configuring the master node, creating the image that will be used for booting nodes, and finally, booting nodes.

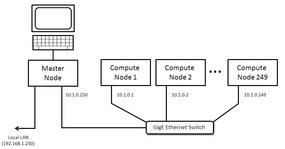

Before jumping into the first step, I wanted to explain the test system I will be using so you can refer back to it. Figure 1 illustrates the basic layout and the one I will use in this article.

On the left is the master node, which has a monitor/keyboard/mouse and two Ethernet ports. The first port goes to what is sometimes called the “outside world” – that is, the network outside of the cluster. In this example it has IP address 192.168.1.250 and can access the Internet through a firewall (this will be needed for building the master node).

The second Ethernet line from the master node goes to a private network that has a Gigabit Ethernet (GigE) switch. For this example, it has the IP address 10.1.0.250. For the sake of this article, all nodes on this private network will have address 10.1.0.x, where x goes from 1 to 250 (250 being the master node). You can use other networking schemes for the private network, but just make sure you know what you’re doing.

From the GigE switch, I have a number of compute nodes that begin with the IP address 10.1.0.1 and continue to 10.1.0.249, giving me a maximum of 249 compute nodes for this example. You can have a larger number of compute nodes if you like (or need) by using additional network addresses, such as 10.1.1.x and 10.1.2.x – you just have to pay attention to how your nodes are labeled and how the network is configured.

The Warewulf configuration for this article was run on my home test cluster system:

- Scientific Linux 6.2

- 2.6.32-220.4.1.el6.x86_64 kernel

- GigaByte MAA78GM-US2H motherboard

- AMD Phenom II X4 920 CPU (four cores)

- 8GB of memory (DDR2-800)

- The OS and boot drive are on an IBM DTLA-307020 (20GB drive at Ultra ATA/100)

- /home is on a Seagate ST1360827AS

- ext4 is used as the file system with the default options.

- eth0 is connected to the LAN and has an IP address of 192.168.1.250

- eth4 is connected to the GigE switch and has an IP address of 10.1.0.250

Step 1 – Prepping the Master Node

If you have done a fairly typical installation of a Red Hat or like distribution (such as CentOS or Scientific Linux), then you are likely to have most of the tools you need. If you are interested in HPC, then you have probably installed compilers and other development tools, but you need to install some additional tools as well, and you need to make some small configuration changes to the master node.

Turning Off SELinux

SELinux can be very useful for many situations, but HPC isn’t always one of them. More precisely, in Warewulf, it can interfere with the chroot environment for creating the images for our compute nodes. It isn’t strictly a Warewulf issue, but a chroot issue. So to make things easy, it is best to turn off SELinux.

Disabling SELinux is fairly easy to do but requires a reboot to take effect. If you follow this article you will see how to disable SELinux. To begin, edit the file, /etc/selinux/config and change the option SELINUX to disabled. Then, when you reboot the master node, you should be ready to go.

After disabling SELinux, the next step in prepping the master node is to install some packages you are likely to need. Because I’m creating HPC clusters, I might want to build and use applications, so it’s probably appropriate to install the development tools for Scientific Linux 6.2. Although you don’t have to do this step, it’s recommended if you are going to build and run applications.

The next step involves Yum; I won’t show all of the output (Listing 1) because it can be quite long, but I at least wanted to show you what was installed on my test system so you have an idea of what comes with the Development Tools group. Also, notice that you have to do this as root because you’ll be installing packages.

Listing 1: Installing the Development Tools

[root@test1 ~]# yum groupinstall "Development tools" Loaded plugins: refresh-packagekit, security Setting up Group Process epel/group_gz | 214 kB 00:00 sl/group_gz | 212 kB 00:00 Package flex-2.5.35-8.el6.x86_64 already installed and latest version Package gcc-4.4.6-3.el6.x86_64 already installed and latest version Package autoconf-2.63-5.1.el6.noarch already installed and latest version Package redhat-rpm-config-9.0.3-34.el6.sl.noarch already installed and latest version Package rpm-build-4.8.0-19.el6_2.1.x86_64 already installed and latest version Package 1:make-3.81-19.el6.x86_64 already installed and latest version Package 1:pkgconfig-0.23-9.1.el6.x86_64 already installed and latest version Package gettext-0.17-16.el6.x86_64 already installed and latest version Package patch-2.6-6.el6.x86_64 already installed and latest version Package bison-2.4.1-5.el6.x86_64 already installed and latest version Package libtool-2.2.6-15.5.el6.x86_64 already installed and latest version Package automake-1.11.1-1.2.el6.noarch already installed and latest version Package gcc-c++-4.4.6-3.el6.x86_64 already installed and latest version Package binutils-2.20.51.0.2-5.28.el6.x86_64 already installed and latest version Package byacc-1.9.20070509-6.1.el6.x86_64 already installed and latest version Package indent-2.2.10-5.1.el6.x86_64 already installed and latest version Package systemtap-1.6-5.el6_2.x86_64 already installed and latest version Package elfutils-0.152-1.el6.x86_64 already installed and latest version Package cvs-1.11.23-11.el6_2.1.x86_64 already installed and latest version Package rcs-5.7-37.el6.x86_64 already installed and latest version Package subversion-1.6.11-2.el6_1.4.x86_64 already installed and latest version Package gcc-gfortran-4.4.6-3.el6.x86_64 already installed and latest version Package patchutils-0.3.1-3.1.el6.x86_64 already installed and latest version Package git-1.7.1-2.el6_0.1.x86_64 already installed and latest version Package swig-1.3.40-6.el6.x86_64 already installed and latest version Resolving Dependencies --> Running transaction check ---> Package cscope.x86_64 0:15.6-6.el6 will be installed ---> Package ctags.x86_64 0:5.8-2.el6 will be installed ---> Package diffstat.x86_64 0:1.51-2.el6 will be installed ---> Package doxygen.x86_64 1:1.6.1-6.el6 will be installed ---> Package intltool.noarch 0:0.41.0-1.1.el6 will be installed --> Processing Dependency: gettext-devel for package: intltool-0.41.0-1.1.el6.noarch --> Processing Dependency: perl(XML::Parser) for package: intltool-0.41.0-1.1.el6.noarch --> Running transaction check ---> Package gettext-devel.x86_64 0:0.17-16.el6 will be installed --> Processing Dependency: gettext-libs = 0.17-16.el6 for package: gettext-devel-0.17-16.el6.x86_64 --> Processing Dependency: libasprintf.so.0()(64bit) for package: gettext-devel-0.17-16.el6.x86_64 --> Processing Dependency: libgettextpo.so.0()(64bit) for package: gettext-devel-0.17-16.el6.x86_64 ---> Package perl-XML-Parser.x86_64 0:2.36-7.el6 will be installed --> Processing Dependency: perl(LWP) for package: perl-XML-Parser-2.36-7.el6.x86_64 --> Running transaction check ---> Package gettext-libs.x86_64 0:0.17-16.el6 will be installed ---> Package perl-libwww-perl.noarch 0:5.833-2.el6 will be installed --> Processing Dependency: perl-HTML-Parser >= 3.33 for package: perl-libwww-perl-5.833-2.el6.noarch --> Processing Dependency: perl(HTML::Entities) for package: perl-libwww-perl-5.833-2.el6.noarch --> Running transaction check ---> Package perl-HTML-Parser.x86_64 0:3.64-2.el6 will be installed --> Processing Dependency: perl(HTML::Tagset) >= 3.03 for package: perl-HTML-Parser-3.64-2.el6.x86_64 --> Processing Dependency: perl(HTML::Tagset) for package: perl-HTML-Parser-3.64-2.el6.x86_64 --> Running transaction check ---> Package perl-HTML-Tagset.noarch 0:3.20-4.el6 will be installed --> Finished Dependency Resolution

The overall installation didn’t take too long and didn’t add too much space to the master node.

After installing the Development Tools packages, you need to install MySQL. As mentioned previously, Warewulf is used for storing the images that get pushed to the nodes and for storing relationships between “objects” (which are basically capabilities or files). Again, you’ll take advantage of Yum for this step (Listing 2), and I won’t show all of the output, but you’ll get an idea of what is installed with the MySQL Database Server group. Also, notice that you need to do this step as root, too.

Listing 2: Installing MySQL Database Server

[root@test1 ~]# yum groupinstall "MySQL Database server" Loaded plugins: refresh-packagekit, security Setting up Group Process Resolving Dependencies --> Running transaction check ---> Package mysql-server.x86_64 0:5.1.61-1.el6_2.1 will be installed --> Processing Dependency: mysql = 5.1.61-1.el6_2.1 for package: mysql-server-5.1.61-1.el6_2.1.x86_64 --> Processing Dependency: perl-DBD-MySQL for package: mysql-server-5.1.61-1.el6_2.1.x86_64 --> Running transaction check ---> Package mysql.x86_64 0:5.1.61-1.el6_2.1 will be installed ---> Package perl-DBD-MySQL.x86_64 0:4.013-3.el6 will be installed --> Finished Dependency Resolution Dependencies Resolved

Once again, the installation didn’t use too much disk space or take too much time.

The final set of dependencies within Warewulf are the bits that allow delivery of the image to the compute nodes: httpd (Apache here), dhcp, tftp-server (used for sending images to compute nodes), mod_perl (used for Warewulf tools).

Again, use Yum as root (Listing 3).

Listing 3: Warewulf Dependencies (partial output)

[root@test1 ~]# yum install httpd dhcp tftp-server mod_perl Loaded plugins: refresh-packagekit, security Setting up Install Process Package httpd-2.2.15-15.sl6.1.x86_64 already installed and latest version Resolving Dependencies --> Running transaction check ---> Package dhcp.x86_64 12:4.1.1-25.P1.el6_2.1 will be installed ---> Package mod_perl.x86_64 0:2.0.4-10.el6 will be installed --> Processing Dependency: perl(BSD::Resource) for package: mod_perl-2.0.4-10.el6.x86_64 ---> Package tftp-server.x86_64 0:0.49-7.el6 will be installed --> Running transaction check ---> Package perl-BSD-Resource.x86_64 0:1.29.03-3.el6 will be installed --> Finished Dependency Resolution Dependencies Resolved

Once again the installation didn’t use too much disk space or take too much time.

Step 2 – Configuring MySQL

Now that MySQL is installed, you need to configure it before installing the Warewulf RPMs (this makes life a bit easier). First, you need to start the MySQL service (Listing 4).

Listing 4: Starting the MySQL Service

[root@test1 ~]# service mysqld start

Initializing MySQL database: WARNING: The host 'test1' could not be looked up with resolveip.

This probably means that your libc libraries are not 100 % compatible

with this binary MySQL version. The MySQL daemon, mysqld, should work

normally with the exception that host name resolving will not work.

This means that you should use IP addresses instead of hostnames

when specifying MySQL privileges !

Installing MySQL system tables...

OK

Filling help tables...

OK

To start mysqld at boot time you have to copy

support-files/mysql.server to the right place for your system

PLEASE REMEMBER TO SET A PASSWORD FOR THE MySQL root USER !

To do so, start the server, then issue the following commands:

/usr/bin/mysqladmin -u root password 'new-password'

/usr/bin/mysqladmin -u root -h test1 password 'new-password'

Alternatively you can run:

/usr/bin/mysql_secure_installation

which will also give you the option of removing the test

databases and anonymous user created by default. This is

strongly recommended for production servers.

See the manual for more instructions.

You can start the MySQL daemon with:

cd /usr ; /usr/bin/mysqld_safe &

You can test the MySQL daemon with mysql-test-run.pl

cd /usr/mysql-test ; perl mysql-test-run.pl

Please report any problems with the /usr/bin/mysqlbug script!

[ OK ]

Starting mysqld: [ OK ]After starting the MySQL service, you need to set the password for the mysqladmin:

[root@test1 ~]# mysqladmin -u root password 'junk123' [root@test1 ~]#

A dummy password, junk123, is used in this example. Make sure your own password is fairly strong. After setting the password you need to create the warewulf table:

[root@test1 ~]# mysqladmin create warewulf -p Enter password:

This is where you use the password you just created for MySQL.

The next step is not strictly necessary at this point but because you’re configuring the master node, you might as well do it now. This step configures tftp, so you can boot images on the compute nodes. To do so, edit the file /etc/xinetd.d/tftp to look like Listing 5.

Listing 5: Configuring tftp

# default: off

# description: The tftp server serves files using the trivial file transfer \

# protocol. The tftp protocol is often used to boot diskless \

# workstations, download configuration files to network-aware printers, \

# and to start the installation process for some operating systems.

service tftp

{

socket_type = dgram

protocol = udp

wait = yes

user = root

server = /usr/sbin/in.tftpd

server_args = -s /var/lib/tftpboot

disable = no

per_source = 11

cps = 100 2

flags = IPv4

}In a subsequent step, you’ll restart services to make sure the tftp server picks up the modified configuration file.

Step 3 – Installing Warewulf

Now the master node is prepped for installing the Warewulf RPMs, which is fairly easy to do if you first add the Warewulf repo to your Yum configuration. To do so, enter:

[root@test1 ~]# wget http://warewulf.lbl.gov/downloads/releases/warewulf-rhel6.repo -O /etc/yum.repos.d/warewulf-rhel6.repo

For this article, version 3.1.1 of Warewulf was installed (it was the latest and greatest when the testing was performed). Because the Warewulf repo has been added to the Yum configuration on the master node, it’s very easy to install the RPMs (warewulf-common, warewulf-provision, warewulf-vnfs, and warewulf-provision-server). The following commands install warewulf-common,

[root@test1 ~]# yum install warewulf-common

warewulf-provision,

[root@test1 ~]# yum install warewulf-provision

warewulf-vnfs,

[root@test1 ~]# yum install warewulf-vnfs

and warewulf-provision-server:

[root@test1 ~]# yum install warewulf-provision-server

Step 4 – Configuring Warewulf

Now that the Warewulf RPMs are installed, you need to configure Warewulf. As with the other aspects of Warewulf, this is not difficult and really only needs to be done once. The first task is to run the warewulf sql script to configure the warewulf database.

[root@localhost ~]# mysql warewulf < /usr/share/warewulf/setup.sql -p Enter password:

The password is the mysqladmin password you created when configuring MySQL.

The next task is to edit the configuration file /etc/warewulf/database.conf and insert your msqladmin password. For example, the following example uses the dummy password (junk123).

[root@localhost ~]# more /etc/warewulf/database.conf # Database configuration database type = sql database driver = mysql # Database access database server = localhost database name = warewulf database user = root database password = junk123

To define which interface on the master node will be used for sending the images to the compute nodes and the location of the tftp root directory, edit the /etc/warewulf/provision.conf file. Remember that for this example, one network is private and goes from the master node to the compute nodes. The master node also has a public network that goes from the master node to the “outside” world. For my test system, the provision.conf file looks like this:

[root@test1 ~]# more /etc/warewulf/provision.conf # What is the default network device that the nodes will be used to # communicate to the nodes? network device = eth4 # What DHCP server implementation should be used to interface with? dhcp server = isc # What is the TFTP root directory that should be used to store the # network boot images? By default Warewulf will try and find the # proper directory. Just add this if it can't locate it. tftpdir = /var/lib/tftpboot

In this case, I used eth4 as the interface to the cluster. Be sure you use the correct Ethernet interface in provision.conf or Warewulf won’t be able to boot nodes. For example, if I had used eth0, Warewulf would have tried to boot nodes that are networked to the public interface (outside world). For this article, the cluster is on a private network, which the master node connects to via eth4.

Step 5 - Creating the VNFS

Warewulf uses the Virtual Node File System (VNFS) concept. The VNFS is the image sent to the compute node and installed in memory using a tmpfs filesystem or to disk. Creating a VNFS is very, very easy, starting with creating an installation into a chroot directory. Warewulf comes with a simple shell script for creating a chroot directory for Red Hat-based distributions. The script, mkchroot-rh.sh, is easy to edit and execute. I suggest you start with the script and build a VNFS before you start editing it.

First, you should execute the script with an argument for where you want to locate the chroot. Listing 6 shows is the top part and the bottom part of the output from the script.

Listing 6: Creating a chroot Directory

mkchroot-rh.sh /var/chroots/sl6.2 Dependencies Resolved =============================================================================================================================================== Package Arch Version Repository Size ================================================================================================================================================ Installing: basesystem noarch 10.0-4.el6 sl 3.6 k bash x86_64 4.1.2-8.el6 sl 901 k chkconfig x86_64 1.3.47-1.el6 sl 157 k coreutils x86_64 8.4-16.el6 sl 3.0 M cronie x86_64 1.4.4-7.el6 sl 70 k crontabs noarch 1.10-33.el6 sl 9.3 k dhclient x86_64 12:4.1.1-25.P1.el6_2.1 sl-security 315 k e2fsprogs x86_64 1.41.12-11.el6 sl 549 k ... Installed: basesystem.noarch 0:10.0-4.el6 bash.x86_64 0:4.1.2-8.el6 chkconfig.x86_64 0:1.3.47-1.el6 coreutils.x86_64 0:8.4-16.el6 cronie.x86_64 0:1.4.4-7.el6 crontabs.noarch 0:1.10-33.el6 dhclient.x86_64 12:4.1.1-25.P1.el6_2.1 e2fsprogs.x86_64 0:1.41.12-11.el6 ethtool.x86_64 2:2.6.33-0.3.el6 filesystem.x86_64 0:2.4.30-3.el6 findutils.x86_64 1:4.4.2-6.el6 gawk.x86_64 0:3.1.7-6.el6 grep.x86_64 0:2.6.3-2.el6 gzip.x86_64 0:1.3.12-18.el6 initscripts.x86_64 0:9.03.27-1.el6 iproute.x86_64 0:2.6.32-17.el6 iputils.x86_64 0:20071127-16.el6 less.x86_64 0:436-10.el6 mingetty.x86_64 0:1.08-5.el6 module-init-tools.x86_64 0:3.9-17.el6 net-tools.x86_64 0:1.60-109.el6 nfs-utils.x86_64 1:1.2.3-15.el6 openssh-clients.x86_64 0:5.3p1-70.el6 openssh-server.x86_64 0:5.3p1-70.el6 pam.x86_64 0:1.1.1-10.el6 passwd.x86_64 0:0.77-4.el6 pciutils.x86_64 0:3.1.4-11.el6 procps.x86_64 0:3.2.8-21.el6 psmisc.x86_64 0:22.6-15.el6_0.1 rdate.x86_64 0:1.4-16.el6 rpcbind.x86_64 0:0.2.0-8.el6 sed.x86_64 0:4.2.1-7.el6 setup.noarch 0:2.8.14-13.el6 shadow-utils.x86_64 2:4.1.4.2-13.el6 sl-release.x86_64 0:6.2-1.1 strace.x86_64 0:4.5.19-1.10.el6 tar.x86_64 2:1.23-3.el6 tcp_wrappers.x86_64 0:7.6-57.el6 tzdata.noarch 0:2012b-3.el6 udev.x86_64 0:147-2.40.el6 upstart.x86_64 0:0.6.5-10.el6 util-linux-ng.x86_64 0:2.17.2-12.4.el6 vim-minimal.x86_64 2:7.2.411-1.6.el6 which.x86_64 0:2.19-6.el6 words.noarch 0:3.0-17.el6 zlib.x86_64 0:1.2.3-27.el6 Dependency Installed: MAKEDEV.x86_64 0:3.24-6.el6 audit-libs.x86_64 0:2.1.3-3.el6 binutils.x86_64 0:2.20.51.0.2-5.28.el6 bzip2-libs.x86_64 0:1.0.5-7.el6_0 ca-certificates.noarch 0:2010.63-3.el6_1.5 coreutils-libs.x86_64 0:8.4-16.el6 cpio.x86_64 0:2.10-9.el6 cracklib.x86_64 0:2.8.16-4.el6 cracklib-dicts.x86_64 0:2.8.16-4.el6 cronie-noanacron.x86_64 0:1.4.4-7.el6 cyrus-sasl-lib.x86_64 0:2.1.23-13.el6 db4.x86_64 0:4.7.25-16.el6 dbus-libs.x86_64 1:1.2.24-5.el6_1 dhcp-common.x86_64 12:4.1.1-25.P1.el6_2.1 e2fsprogs-libs.x86_64 0:1.41.12-11.el6 expat.x86_64 0:2.0.1-9.1.el6 fipscheck.x86_64 0:1.2.0-7.el6 fipscheck-lib.x86_64 0:1.2.0-7.el6 gamin.x86_64 0:0.1.10-9.el6 gdbm.x86_64 0:1.8.0-36.el6 glib2.x86_64 0:2.22.5-6.el6 glibc.x86_64 0:2.12-1.47.el6_2.9 glibc-common.x86_64 0:2.12-1.47.el6_2.9 gmp.x86_64 0:4.3.1-7.el6 groff.x86_64 0:1.18.1.4-21.el6 hwdata.noarch 0:0.233-7.6.el6 info.x86_64 0:4.13a-8.el6 iptables.x86_64 0:1.4.7-4.el6 keyutils-libs.x86_64 0:1.4-3.el6 krb5-libs.x86_64 0:1.9-22.el6_2.1 libacl.x86_64 0:2.2.49-6.el6 libattr.x86_64 0:2.4.44-7.el6 libblkid.x86_64 0:2.17.2-12.4.el6 libcap.x86_64 0:2.16-5.5.el6 libcap-ng.x86_64 0:0.6.4-3.el6_0.1 libcom_err.x86_64 0:1.41.12-11.el6 libedit.x86_64 0:2.11-4.20080712cvs.1.el6 libevent.x86_64 0:1.4.13-1.el6 libffi.x86_64 0:3.0.5-3.2.el6 libgcc.x86_64 0:4.4.6-3.el6 libgssglue.x86_64 0:0.1-11.el6 libidn.x86_64 0:1.18-2.el6 libnih.x86_64 0:1.0.1-7.el6 libselinux.x86_64 0:2.0.94-5.2.el6 libsepol.x86_64 0:2.0.41-4.el6 libss.x86_64 0:1.41.12-11.el6 libstdc++.x86_64 0:4.4.6-3.el6 libtirpc.x86_64 0:0.2.1-5.el6 libusb.x86_64 0:0.1.12-23.el6 libuser.x86_64 0:0.56.13-4.el6_0.1 libutempter.x86_64 0:1.1.5-4.1.el6 libuuid.x86_64 0:2.17.2-12.4.el6 logrotate.x86_64 0:3.7.8-12.el6_0.1 ncurses.x86_64 0:5.7-3.20090208.el6 ncurses-base.x86_64 0:5.7-3.20090208.el6 ncurses-libs.x86_64 0:5.7-3.20090208.el6 nfs-utils-lib.x86_64 0:1.1.5-4.el6 nspr.x86_64 0:4.8.9-3.el6_2 nss.x86_64 0:3.13.1-7.el6_2 nss-softokn.x86_64 0:3.12.9-11.el6 nss-softokn-freebl.x86_64 0:3.12.9-11.el6 nss-sysinit.x86_64 0:3.13.1-7.el6_2 nss-util.x86_64 0:3.13.1-3.el6_2 openldap.x86_64 0:2.4.23-20.el6 openssh.x86_64 0:5.3p1-70.el6 openssl.x86_64 0:1.0.0-20.el6_2.3 pciutils-libs.x86_64 0:3.1.4-11.el6 pcre.x86_64 0:7.8-3.1.el6 popt.x86_64 0:1.13-7.el6 python.x86_64 0:2.6.6-29.el6 python-libs.x86_64 0:2.6.6-29.el6 readline.x86_64 0:6.0-3.el6 rsyslog.x86_64 0:4.6.2-12.el6 sqlite.x86_64 0:3.6.20-1.el6 ssmtp.x86_64 0:2.61-15.el6 sysvinit-tools.x86_64 0:2.87-4.dsf.el6 tcp_wrappers-libs.x86_64 0:7.6-57.el6 Complete! Creating default fstab Creating SSH host keys Setting root password... Changing password for user root. New password: Retype new password: passwd: all authentication tokens updated successfully. Done.

Notice that I created the chroot in /var/chroots/sl6.2. In general you can put the chroot environment anywhere you want, as long as the filesystem has enough capacity. For this article, I will use /var for storing the chroots and the VNFS images. Be careful that /var/ has enough capacity (sometimes people or distributions like to create a separate partition for /var/, so make sure it has enough capacity. I recommend using a meaningful name for the specific chroot, which in my case is sl6.2, which is short for Scientific Linux 6.2 (sorry, I’m a bit uncreative). You can also see how big the chroot is:

[root@test1 ~]# du -s /var/chroots/sl6.2/ 371524 /var/chroots/sl6.2/

So, the chroot is not very large at all. As part of the mkchroot script, you are prompted for the root password for the root account on this chroot environment. I recommend you use a password that is different from the root password on the master node.

The next task is to convert the chroot into a VNFS that Warewulf can use. Fortunately, Warewulf has a nice utility to do this named wwvnfs.

[root@localhost ~]# wwvnfs --chroot /var/chroots/sl6.2 Using 'sl6.2' as the VNFS name Creating VNFS image for sl6.2 Building template VNFS image Excluding files from VNFS Building and compressing the final image Cleaning temporary files Importing new VNFS Object: sl6.2 Done.

You just tell the wwvnfs command which chroot you want to convert into a VNFS, and it does everything for you. The cool part of the command is that it imports the VNFS into MySQL and defines it as a VNFS that can be used for booting nodes. In this case, the image is called sl6.2.

The next task is to create what is called a bootstrap image. During provisioning, the compute node gets a bootstrap image with tftp, from the master node during the PXE boot process. This image contains a Linux kernel, device drives, and a small set of programs used to complete the rest of the provisioning. Once the bootstrap image boots the node, it calls back to the master node and gets the VNFS, provisioning the file system. Then it calls init for the new filesystems to bring up the desired Linux system. A key to Warewulf is that the same kernel is used in the bootstrap as in the provisioned system, making the whole boot process simpler. At the same time, it is important to make sure the bootstrap kernel and the device drivers in the bootstrap image match those in the VNFS. To facilitate this, Warewulf has a method to create a bootstrap image from a specific kernel, customizing it for use with a specific VNFS. The method is encapsulated in the command wwbootstrap, which creates these bootstrap images, bundling the device drivers, firmware, and provisioning software with the kernel.

Another cool feature of Warewulf that results from the concept of a bootstrap is that you can create bootstrap images using kernels installed on the master node or kernels present in a chroot. Although this sounds somewhat unimportant, it allows you to create a special kernel for the compute nodes in the chroot environment and boot the nodes to it, without having to change the master node. This also means you can provision a Linux distribution different from the master node. So, for example, you can do things easily and create a chroot using Scientific Linux 5.8 on a Scientific Linux 6.2 master node, or – even more interesting – you can provision a Debian compute node from a Scientific Linux master node. However, this is a subject for another article.

To return to the test system, create a bootstrap image to match the VNFS. The syntax for the wwbootstrap command is simple:

[root@test1 ~]# wwbootstrap `uname -r` Number of drivers included in bootstrap: 458 Number of firmware images included in bootstrap: 47 Building and compressing bootstrap Creating new Bootstrap Object: 2.6.32-220.el6.x86_64 Imported 2.6.32-220.el6.x86_64 into a new object Done.

This command creates the bootstrap image that matches the kernel on the master node, which is the same kernel used for the VNFS. To make things easy, I used the kernel name found by the command, 'uname -r'. The wwbootstrap command also loads the bootstrap image into MySQL and makes it available for booting nodes.

Step 6 – Booting Compute Nodes

This final step boots the compute nodes. To get ready for this step, you need to restart a few services. Recall that Warewulf uses tftp, httpd, and MySQL for managing provisioning and booting nodes. As a precautionary step for booting the first compute node, you should restart these services to make sure they have the latest configuration file (Listing 7).

Listing 7: Restarting Services

[root@test1 ~]# service iptables stop

iptables: Flushing firewall rules: [ OK ]

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Unloading modules: [ OK ]

[root@test1 ~]#

[root@test1 ~]# service mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

[root@test1 ~]#

[root@test1 laytonjb]# service httpd restart

Stopping httpd: [ OK ]

Starting httpd: [Sun Apr 15 12:47:28 2012] [warn] module perl_module is already loaded, skipping

httpd: apr_sockaddr_info_get() failed for test1

httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1 for

ServerName

[ OK ]

[root@test1 ~]#

[root@test1 ~]# /etc/init.d/xinetd restart

Stopping xinetd: [ OK ]

Starting xinetd: [ OK ]Although not strictly necessary, the next task is to restart the Warewulf services: both dhcp and pxe:

[root@test1 ~]# wwsh dhcp update Rebuilding the DHCP configuration Done. [root@test1 ~]# [root@test1 ~]# wwsh pxe update [root@test1 ~]#

At this point, you are ready to start booting nodes, but you still need to make sure a couple of things work on the compute node. First, make sure your compute node’s pxe is first in the boot order (check the BIOS). Next, make sure the compute nodes can boot without a keyboard or mouse and the nodes are plugged in. Then, be sure to use a wired network rather than a wireless network on the compute node(s).

Now you can boot some nodes. The next step is either to define the node(s) you are going to boot in the Warewulf database or to have it scan for nodes that are booting and looking for DHCP with the wwnodescan command. I chose the first method because I knew exactly which node I was going to boot first along with the MAC address of the NIC that it would be using. The following command seems a little long, but I’ll walk you through the details:

[root@test1 ~]# wwsh

Warewulf> node new n0001 --netdev=eth0 --hwaddr=00:00:00:00:00:00 --ipaddr=10.1.0.1 --groups=newnodes

Warewulf> provision set --lookup groups newnodes --vnfs=sl6.2 --bootstrap=2.6.32-220.el6.x86_64

Are you sure you want to make the following changes to 1 node(s):

SET: BOOTSTRAP = 2.6.32-220.el6.x86_64

SET: VNFS = sl6.2

Yes/No> y

Warewulf> quitIn this example, I’m using the wwsh command to interact with the Warewulf database. The first command defines a new node in Warewulf with the name n0001 and defines its properties. It will use the eth0 network interface on the compute node for booting (see the netdev option). The third option states the MAC address of the card (I have used all zeros for this example, but you would fill in the details of the real MAC address here). I then defined the IP address of node n0001 as 10.1.0.1. With the final option, I added the node to a group called newnodes, but you could name its group anything you want.

The next step is to use wwsh to define the provisioning properties for node n0001. The second command line, defines the provisioning properties by starting with the command provision set. The next option defines a specific group, in this case newnodes (this has to match the group you used in the previous statement) and then defines the VNFS for that group (in this case, sl6.2, which matches the VNFS I created earlier). Then, you define the bootstrap image used to boot the node, which in this case is 2.6.32-220.el6.x86_64). Notice that wwsh confirms that you want to make changes to the node in the Warewulf DB. This is important because, if you make a mistake, you will change the “properties” of that node and how it boots.

Now that you have defined the VNFS for the group as well as the bootstrap, you are ready to power up your compute node! To make my setup work, I plugged a monitor/keyboard/mouse into the compute node and turned it on. Because Warewulf is tied in to dhcp and is monitoring for new nodes, it will see the compute node with the MAC address defined in the Warewulf database via wwsh and will send the appropriate bootstrap image; then, the boot process starts. To make sure everything works, I always like to log in into the compute node from the master node:

[root@test1 ~]# ssh 10.1.0.1 The authenticity of host '10.1.0.1 (10.1.0.1)' can't be established. RSA key fingerprint is a6:8e:89:6e:b8:7b:7a:98:d2:dc:f4:aa:ae:2d:62:c4. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '10.1.0.1' (RSA) to the list of known hosts. root@10.1.0.1's password: Last login: Sun Apr 29 19:16:14 2012 -bash-4.1# ls -s total 0

And The Eagle has Landed – or, in this case, Warewulf is working.

Summary

I know this might seem like a lot of work, but really the vast majority of it only has to be done once and only for the master node. As well as the packages needed by Warewulf, the master node needed some tools for building and managing applications. Then, Warewulf itself needed to be installed in the form of five RPMs (not too difficult). Next, the master node was configured so everything worked together. At that point, Warewulf was ready for configuring. So, all of this only had to be done once.

Once in Warewulf, you built the VNFS and bootstrap images for booting nodes and ran a few wwsh commands to make sure everything was read to boot. Then, you defined the first compute node in Warewulf, including it’s VNFS and bootstrap image, and booted the first compute node. At this point, you can start booting more compute nodes if you like.

Now you can boot compute nodes into a stateless configuration using a ramdisk for storing the image. A common complaint about booting into a ramdisk is that you are using memory for the OS image; however, log in to the compute node and take a look at the amount of memory used. It is relatively little, and believe it or not, memory is pretty cheap this days, so using a couple hundred megabytes for an OS image to gain stateless capabilities is a very nice trade-off.

In the coming articles, I plan to talk about how to enhance the image you have created and start adding common cluster tools to your Warewulf cluster. Stay tuned, it’s going to be fun.