Setting Up an HPC Cluster

Setting up a real-world HPC cluster with Kickstart, SSH, Son of Grid Engine, and other free tools.

High-Performance Computing (HPC) clusters are characterized by many cores and processors, lots of memory, high-speed networking, and large data stores – all shared across many rack-mounted servers. User programs that run on a cluster are called jobs, and they are typically managed through a queueing system for optimal utilization of all available resources. An HPC cluster is made of many separate servers, called nodes, possibly filling an entire data center with dozens of power-hungry racks. HPC typically involves simulation of numerical models or analysis of data from scientific instrumentation. At the core of HPC is manageable hardware and systems software wrangled by systems programmers, which allow researchers to devote their energies to their code. This article describes a simple software stack that could be the starting point for your own HPC cluster.

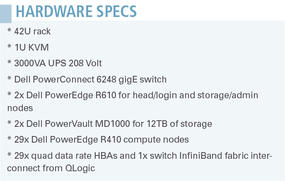

What does an HPC cluster look like? A typical cluster for a research group might contain a rack full of 1U servers (Figure 1). The cluster might be as small as four nodes, or it could fill an entire rack with equipment. (See the box titled “Hardware Specs” for more on the hardware configuration used for this article.)

Before You Begin

Before delving into the setup steps, I’ll start with a look at some planning and purchasing issues. Being a systems programmer with eyes on the code, I am often surprised at the number of real-world logistical issues in need of consideration.

Before you order the hardware, you’d better give some thought to a few basic questions. Why do you need a cluster? What are your peers doing? Is a cluster the correct solution? Have system requirements been collected and base specifications decided? Start with an initial quote from a choice vendor. From there, move on to obtain at least two other comparable competing vendor quotes. Even with comparable quotes in-hand, go the extra mile. Ask if you can run a representative job on proposed hardware in a vendor lab for benchmarking. What are the application bottlenecks, and can the budget be applied to maximize those metrics?

Before issuing a purchase order, consider the space and logistical issues. Will the hardware be assembled, cabled, and verified by the vendor? On- or off-site? Have you reserved an adequate rack footprint to house the equipment? A cluster generates excessive heat, so server room footprint placement is key. The space must have adequate cooling capacity. Are you interleaving the hottest racks in the server room? With a space decided, move on to scheduling an electrician and securing budget codes for billing. Don’t forget to put in all requests for required networking, inventory, and card/key access for primary systems administrators. No matter how much planning you do, you’re bound to be surprised by something.

Be sure to consider the backup scheme and include it in your budgeting process. Backup can double storage costs and will blow your budget if it is left for last. Always make sure you have the correct rack configuration, correct voltage PDUs, and correct plugs, with only needed systems on UPS. Make sure you acquire all software licensing and installation media ahead of time. If possible, request vendor off-site assembly and integration. The vendor must provide a properly trained, equipped, and insured delivery service for inside delivery to the designated footprint with a lift-gate truck and debris removal. A rack of equipment can literally weigh a ton. If the rack falls off the delivery truck, you do not want to be the one responsible for or underneath it. And there will be NO unscheduled deliveries directly to the server room.

The Configuration

Once you have made all the necessary plans, chosen a preferred vendor, and had the system delivered, it’s time for the on-site software installation and configuration. For something as complicated as an HPC cluster, all configurations will vary, but the example configuration described in this article will give you an idea of the kind of choices you’ll face if you try this on your own.

For this article, I assume you have some familiarity with managing and configuring Linux systems. Most of the commands mentioned in this article are documented in a relevant man page, or you can find additional information through the Red Hat Enterprise Linux [1] or CentOS [2] documentation. In particular, the Red Hat Enterprise Linux Installation Guide [3] and the section on Kickstart installations [4] are important reading.

The basic steps for getting your HPC cluster up and running are as follows:

- Create the admin node and configure it to act as an installation server for the compute nodes in the cluster. This includes configuring the system to receive PXE client connections as well as setting it up to support automated Kickstart installations.

- Boot the compute nodes one by one, connecting to the admin server and launching the installation.

- When all your nodes are all up and running, install a job queue system to get them working together as a high performance cluster.

At this point, I’ll assume you have a full rack of equipment, neatly wired (Figure 2) and plugged into power, housed in a hosting facility. Let’s call the whole system Fiji, a short and pleasant name to type. Before you go to the server room, the first step is to download and burn the CentOS install DVD media for 64-bit [5], currently version 5.6.

Once you have the media in-hand, begin by installing the admin node, which will act as a kickstart installation server to automate the install of all the compute nodes that fill out the rest of the cluster. Even though this article is targeted toward installing a cluster on enterprise-grade rack hardware, there is no reason one cannot follow along at home with a few beige boxes and an 10Mb hub, as long as the servers are capable of booting from the network with PXE [6]. PXE (which stands for Preboot eXecution Environment”) lets you install and configure the compute nodes without having to stop and boot each one from a CD.

When deploying a multi-user cluster, where users log in via SSH, VNC or NX, it is also a good idea to have a separate head node for the interactive logins. For a small to medium-sized cluster, it is okay to combine what is often called the storage node with the admin node. In this article, I assume the cluster consists of one full rack of equipment, but you can extend the techniques described here to replicate this process N times for a larger cluster.

The admin/storage node and the head/login node both have multiple Ethernet interfaces. The first interface (eth0) connects to the private, internal gigabit switch and 192.168.1.0 network, where all internal communication between nodes and management interfaces will occur. The second Ethernet interface (eth1) will connect to the public network for remote user and admin login. This is important to note because, in this case, the baseboard management controller (BMC/DRAC) boards, which are controllable via IPMI [7], are configured to share the first Ethernet port. This means you might see two different MAC addresses and two different IPs on the eth0 port; you want both facing internally.

Begin by hooking up the keyboard-video-mouse (KVM) switch to the admin node and powering on. Check BIOS settings, set a password, and choose the boot order. Then configure the BMC, setting an admin username and password and configuring an internal IP address for the device.

Everything should be redundant on the admin/storage and head/login nodes, including the root drives, which will hold the OS. Configure a RAID 1 mirror for the internal root drive through the RAID BIOS.

At this point, you also need to configure any large external data storage enclosures. Our lab has 10TB, which we configure as RAID 6 plus one hot spare. I recommend letting storage fully initialize before continuing. After full RAID initialization, power off the node and unplug the external RAID enclosure before the OS install because the OS install partitioner can have difficulty with very big disks.

Setting Up the Admin Node

The first step is to set up the admin node that will also act as a storage and Kickstart installation server for the cluster. To get started installing the admin node, insert the CentOS installation DVD into the system that will act as the admin node. Boot the installer and select mostly all defaults. The only steps that differ from the defaults are setting a bootloader password, configuring the two network interfaces, and switching SELinux to permissive mode. In this example, I will call the admin/storage node fijistor with internal IP 192.168.1.2. For the package selection, do not change the defaults. Note that the cluster packages in the CentOS anaconda installer do not refer to HPC clustering in this context.

Once the installer completes, shut down the system, hook the external RAID back up, remove the “boot from DVD” option from the BIOS and power on. Admire your fresh login screen. Note that you can accomplish most of the rest of this build via remote login through SSH. The only time you need to be physically in the server room is when you are messing with the BIOS and powering the nodes for the first time (Figure 3).

Because SSH is the main entry to this system, I need to lock down sshd. Install and test a personal SSH key for the root user, then limit admin node logins to admin group-only and key-only. You only need to add a few lines to the sshd configuration file /etc/ssh/sshd_config:

PermitRootLogin without‑password AllowGroups fijiadmin

Then, add the admin group and restart sshd:

# groupadd fijiadmin # usermod ‑G fijiadmin root # service sshd restart

Generate an SSH key for the fijistor root account, which you can then provide to all compute nodes:

# ssh‑keygen # just hit enter, no passphrase # cp /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys.fijistor # chmod ‑R go= /root/.ssh

If you don’t plan to access the admin node through the console GUI, you can disable the graphical login by commenting out the relevant line in the/etc/inittab file. This will free up some memory:

# Run xdm in runlevel 5 # x:5:respawn:/etc/X11/prefdm ‑nodaemon

Now perform a first full-system package update – installing the development tools – and reboot into the new kernel:

# yum ‑y update # yum ‑y install @development‑tools # reboot

It is important to keep system logs, so don't forget to extend the retention time for logfiles by changing a few settings in the /etc/logrotate.conf file:

# keep 4 weeks worth of backlogs #rotate 4 rotate 999

Enable network forwarding on the admin node, so that all the exec nodes can resolve public services when needed. To set up IP forwarding on the admin node, add the following to the /etc/sysctl.conf file:

net.ipv4.ip_forward = 1

The next step is to tweak the iptables firewall settings, or nothing will get through the firewall. In this case, the speed at which SSH may be attempted is limited, to curtail SSH brute forcing. All traffic from the internal cluster network is allowed. Dropped packets are logged for debug purposes – a setting you can always comment out and disable if you need to later. Finally, all outbound traffic from the internal network is put through NAT. Listing 1 has some critical settings for the /etc/sysconfig/iptables file.

Listing 1: /etc/sysconfig/iptables

*filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [0:0] :RH-Firewall-1-INPUT - [0:0] -A INPUT -j RH-Firewall-1-INPUT -A FORWARD -j RH-Firewall-1-INPUT -A RH-Firewall-1-INPUT -i lo -j ACCEPT -A RH-Firewall-1-INPUT -p icmp --icmp-type any -j ACCEPT -A RH-Firewall-1-INPUT -p 50 -j ACCEPT -A RH-Firewall-1-INPUT -p 51 -j ACCEPT -A RH-Firewall-1-INPUT -p udp --dport 5353 -d 224.0.0.251 -j ACCEPT #-A RH-Firewall-1-INPUT -p udp -m udp --dport 631 -j ACCEPT #-A RH-Firewall-1-INPUT -p tcp -m tcp --dport 631 -j ACCEPT -A RH-Firewall-1-INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT ### begin ssh #-A RH-Firewall-1-INPUT -m state --state NEW -m tcp -p tcp --dport 22 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp --dport 22 --syn -s 192.168.1.0/255.255.255.0 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp --dport 22 --syn -m limit --limit 6/m --limit-burst 5 -j ACCEPT ### end ssh ### begin cluster -A RH-Firewall-1-INPUT -i eth0 -s 192.168.1.0/255.255.255.0 -j ACCEPT -A RH-Firewall-1-INPUT -i eth0 -d 255.255.255.255 -j ACCEPT -A RH-Firewall-1-INPUT -i eth0 -p udp --dport 67:68 -j ACCEPT -A RH-Firewall-1-INPUT -i eth0 -p tcp --dport 67:68 -j ACCEPT ### end cluster ### begin log -A RH-Firewall-1-INPUT -m limit --limit 10/second -j LOG ### end log -A RH-Firewall-1-INPUT -j REJECT --reject-with icmp-host-prohibited COMMIT ### begin nat *nat :PREROUTING ACCEPT [0:0] :POSTROUTING ACCEPT [0:0] :OUTPUT ACCEPT [0:0] -A POSTROUTING -o eth1 -j MASQUERADE #-A PREROUTING -i eth1 -p tcp --dport 2222 -j DNAT --to 192.168.1.200:22 COMMIT ### end nat

Now reload the kernel sysctl parameters and restart the firewall:

# service iptables restart

The next step in the process is to format the external 10TB RAID storage on /dev/sdx, obviously substituting in the correct device for sdx. If your configuration will not include any additional external storage, you can skip this step. Notice that you can overwrite the pre-existing partition information on the device and then use LVM directly (Listing 2). I also specify the metadata size to account for 128KB alignment. The pvs command will reveal that requesting 250KB actually results in a 256KB setting.

Listing 2: Configuring Partitions

# cat /proc/partitions # dd if=/dev/urandom of=/dev/sdx bs=512 count=64 # pvcreate --metadatasize 250k /dev/sdx # pvs -o pe_start # vgcreate RaidVolGroup00 /dev/sdx # lvcreate --extents 100%VG --name RaidLogVol00 RaidVolGroup00 # mkfs -t ext3 -E stride=32 -m 0 -O dir_index,filetype,has_journal,sparse_super /dev/RaidVolGroup00/RaidLogVol00 # echo "/dev/RaidVolGroup00/RaidLogVol00 /data0 ext3 noatime 0 0" >>/etc/fstab # mkdir /data0 ; mount /data0 ; df -h

Creating a File Repository

With storage ready, it is time to build a file repository, which will feed all system Yum updates and Kickstart-based node installs. Create a directory structure with the base installer packages from the CentOS install DVD and then pull all the latest updates from a local rsync file mirror (Listing 3). I strongly urge you to find your own local mirror [8] that provides rsync. The commands in Listing 3 also pull the EPEL repository [9] for some additional packages.

Listing 3: Setting Up the Install System

# mkdir -p /data0/repo/CentOS/5.6/iso/x86_64

# ln -s /data0/repo /repo

# cd /repo/CentOS

# ln -s 5.6 5

# wget http://mirrors.gigenet.com/centos/RPM-GPG-KEY-CentOS-5

# cd /repo/CentOS/5.6/iso/x86_64

# cat /dev/dvd > CentOS-5.6-x86_64-bin-DVD-1of2.iso

# wget http://mirror.nic.uoregon.edu/centos/5.6/isos/x86_64/sha1sum.txt

# sha1sum -c sha1sum.txt

# mount -o loop CentOS-5.6-x86_64-bin-DVD-1of2.iso /mnt

# mkdir -p /repo/CentOS/5.6/os/x86_64

# rsync -avP /mnt/CentOS /mnt/repodata /repo/CentOS/5.6/os/

# mkdir -p /repo/CentOS/5.6/updates/x86_64

# rsync --exclude='debug' --exclude='*debug info*' --exclude='repoview' \

--exclude='headers' -irtCO --delete-excluded --delete \

rsync://rsync.gtlib.gatech.edu/centos/5.6/updates/x86_64 /repo/CentOS/5.6/updates/

# mkdir -p /repo/epel/5/x86_64

# cd /repo/epel

# wget http://download.fedora.redhat.com/pub/epel/RPM-GPG-KEY-EPEL

# rsync --exclude='debug' --exclude='*debuginfo*' --exclude='repoview' --exclude='headers' \

-irtCO --delete-excluded --delete rsync://archive.linux.duke.edu/fedora-epel/5/x86_64 /repo/epel/5/

# mv /etc/yum.repos.d/* /usr/src/

# cat /dev/null >/etc/yum.repos.d/CentOS-Base.repo

# cat /dev/null >/etc/yum.repos.d/CentOS-Media.repoNow create a repo configuration for yum in the /etc/yum.repos.d/fiji.repo file (Listing 4). Then create a script to update the file repo (Listing 5).

Listing 4: /etc/yum.repos.d/fiji.repo

### CentOS base from installation media [base] name=CentOS-$releasever - Base #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=os #baseurl=http://mirror.centos.org/centos/$releasever/os/$basearch/ baseurl=file:///repo/CentOS/$releasever/os/$basearch/ gpgcheck=1 gpgkey=http://mirror.centos.org/centos/RPM-GPG-KEY-CentOS-5 protect=1 ### CentOS updates via rsync mirror [update] name=CentOS-$releasever - Updates #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=updates #baseurl=http://mirror.centos.org/centos/$releasever/updates/$basearch/ baseurl=file:///repo/CentOS/$releasever/updates/$basearch/ gpgcheck=1 gpgkey=http://mirror.centos.org/centos/RPM-GPG-KEY-CentOS-5 protect=1 ### Extra Packages for Enterprise Linux (EPEL) [epel] name=Extra Packages for Enterprise Linux 5 - $basearch #baseurl=http://download.fedora.redhat.com/pub/epel/5/$basearch #mirrorlist=http://mirrors.fedoraproject.org/mirrorlist?repo=epel-5&arch=$basearch baseurl=file:///repo/epel/$releasever/$basearch enabled=1 protect=0 failovermethod=priority gpgcheck=1 gpgkey=http://download.fedora.redhat.com/pub/epel/RPM-GPG-KEY-EPEL

Listing 5: /root/bin/update_repo.sh

#!/bin/bash

OPTS='-vrtCO --delete --delete-excluded \

--exclude=i386* \

--exclude=debug \

--exclude=*debuginfo* \

--exclude=repoview \

--exclude=headers'

rsync $OPTS rsync://rsync.gtlib.gatech.edu/centos/5.6/updates/x86_64 /repo/CentOS/5.6/updates/

rsync $OPTS rsync://archive.linux.duke.edu/fedora-epel/5/x86_64 /repo/epel/5/Now that I have a file repository for all RPMs, I can share it out via NFS, along with the /data0 and /usr/global/ directories. On this cluster, user home directories happen to be in the /data0/home/directory. I will use /usr/global/ just like /usr/local/ except it is mounted on all nodes via NFS. The script global.sh (Listing 6) is symlinked on all nodes to /etc/profile.d/global.sh to set user environment variables.

Listing 6: /usr/global/etc/profile.d/global.sh

# Grid Engine export SGE_ROOT=/usr/global/sge . /usr/global/sge/default/common/settings.sh alias rsh='ssh' alias qstat='qstat -u "*"' # Intel compilers . /usr/global/intel/Compiler/11.1/064/bin/iccvars.sh intel64 . /usr/global/intel/Compiler/11.1/064/bin/ifortvars.sh intel64 . /usr/global/intel/Compiler/11.1/064/mkl/tools/environment/mklvars64.sh export INTEL_LICENSE_FILE=/usr/global/intel/licenses:$INTEL_LICENSE_FILE

The NFS /etc/exports file describes which directories are exported to remote hosts (Listing 7).

Listing 7: /etc/exports

/data0 fiji(rw,async,no_root_squash) 192.168.1.0/255.255.255.0(rw,async,no_root_squash) /usr/global 192.168.1.0/255.255.255.0(rw,async,no_root_squash) /kickstart 192.168.1.0/255.255.255.0(ro) /repo 192.168.1.0/255.255.255.0(ro)

When the configuration is complete, start the NFS server:

# chkconfig nfs on # service nfs start

Enable remote syslog logging from nodes by adding the following options in the /etc/sysconfig/syslog file:

SYSLOGD_OPTIONS="‑m 0 ‑r ‑s fiji.baz.edu"

Then restart syslog:

# service syslog restart

All node hostnames and IPs for the cluster should be listed in the /etc/hosts file (Listing 8).

Listing 8: /etc/hosts

127.0.0.1 localhost.localdomain localhost ::1 localhost6.localdomain6 localhost6 192.168.1.1 fiji.baz.edu fiji 192.168.1.2 fijiistor.baz.edu fijistor 192.168.100.9 ib 192.168.1.101 node01 192.168.1.102 node02 192.168.1.103 node03 192.168.1.104 node04 192.168.1.200 fiji-bmc 192.168.1.201 node01-bmc 192.168.1.202 node02-bmc 192.168.1.203 node03-bmc 192.168.1.204 node04-bmc

Don’t forget to enable network time synchronization for consistent logging:

# ntpdate ‑u ‑b ‑s 1.centos.pool.ntp.org # hwclock ‑‑utc ‑‑systohc # chkconfig ntpd on ; service ntpd start

Starting Kickstart

The next step is to install all the services needed for a Kickstart installation server (Listing 9) and build a file structure for network booting via PXE, TFTP, and NFS. This step will allow a new compute node to boot via the network in a manner similar to booting from a CD or DVD.

Listing 9: Setting Up Kickstart

# yum install dhcp xinetd tftp tftp-server syslinux # mkdir -p /usr/global/tftpboot ; ln -s /usr/global/tftpboot /tftpboot # mkdir -p /tftpboot/pxelinux.cfg /tftpboot/images/centos/x86_64/5.6 # cd /tftpboot/images/centos/x86_64/ ; ln -s 5.6 5 # rsync -avP /mnt/isolinux/initrd.img /mnt/isolinux/vmlinuz /tftpboot/images/centos/x86_64/5.6/ # cd /usr/lib/syslinux # rsync -avP chain.c32 mboot.c32 memdisk menu.c32 pxelinux.0 /tftpboot/ # mkdir -p /usr/global/kickstart ; ln -s /usr/global/kickstart /kickstart ; cd /kickstart # mkdir -p /kickstart/fiji/etc ; cd /kickstart/fiji/etc # mkdir -p rc.d/init.d profile.d ssh yum/pluginconf.d yum.repos.d # touch rescue.cfg ks-fiji.cfg ; ln -s ks-fiji.cfg ks.cfg

First, edit the PXE menu in the /tftpboot/pxelinux.cfg/default file (Listing 10).

Listing 10: /tftpboot/pxelinux.cfg/default

DEFAULT menu.c32 PROMPT 0 TIMEOUT 100 ONTIMEOUT local NOESCAPE 1 ALLOWOPTIONS 0 MENU TITLE Fiji Cluster PXE Menu LABEL local MENU LABEL Boot local hard drive LOCALBOOT 0 LABEL centos MENU LABEL CentOS 5 Fiji Node Install KERNEL images/centos/x86_64/5/vmlinuz APPEND ks=nfs:192.168.1.2:/kickstart/ks.cfg initrd=images/centos/x86_64/5/initrd.img ramdisk_size=100000 ksdevice=eth0 ip=dhcp LABEL rescue MENU PASSWD $4$XXXXXX MENU LABEL CentOS 5 Rescue KERNEL images/centos/x86_64/5/vmlinuz APPEND initrd=images/centos/x86_64/5/initrd.img ramdisk_size=10000 text rescue ks=nfs:192.168.1.2:/kickstart/rescue.cfg

Next, generate your own password to replace the stub in Listing 10. SHA-1 encrypted passwords start with $4$:

# sha1pass password $4$gS+7mITP$y3s1L4Z+5Udp2vlZHChNXd8lhAg$

Copy in all the files that will sync to all nodes:

# cp /etc/hosts /kickstart/fiji/etc/ # cp /root/.ssh/id_rsa.pub /kickstart/fiji/authorized_keys # cp /etc/yum.repos.d/*.repo /kickstart/fiji/etc/yum.repos.d/

Then you can proceed to the next step in the process, which is to edit the /kickstart/fiji/etc/ntp.conf file and change the server to the admin node:

#server 0.centos.pool.ntp.org #server 1.centos.pool.ntp.org #server 2.centos.pool.ntp.org server 192.168.1.2

When you get to booting the compute nodes, you will be able to paste each MAC addresses into the /etc/dhcpd.conf file (Listing 11) and then restart the dhcpd process.

Listing 11: /etc/dhcpd.conf

ddns-update-style interim;

ignore client-updates;

option option-128 code 128 = string;

option option-129 code 129 = text;

subnet 192.168.1.0 netmask 255.255.255.0 {

option routers 192.168.1.2;

option subnet-mask 255.255.255.0;

option nis-domain "fiji.baz.edu";

option domain-name "fiji.baz.edu";

option domain-name-servers 123.123.123.123;

option time-offset -18000; # Eastern

option ntp-servers 192.168.1.2;

default-lease-time 21600;

max-lease-time 43200;

allow booting;

allow bootp;

next-server 192.168.1.2;

filename "/pxelinux.0";

host node01 {

hardware ethernet 00:11:22:33:44:a0;

fixed-address 192.168.1.101;

}

host node01-bmc {

hardware ethernet 00:11:22:33:44:8d;

fixed-address 192.168.1.201;

}

host node02 {

hardware ethernet 00:11:22:33:44:83;

fixed-address 192.168.1.102;

}

host node02-bmc {

hardware ethernet 00:11:22:33:44:61;

fixed-address 192.168.1.202;

}The node will then boot off the network with the configured IP address. Booting each of the compute nodes one at a time and pasting each address into the /etc/dhcpd.conf file is a bit of a tedious process, which surely could be automated with more advanced management techniques.

Set the disabled option in /etc/xinetd.d/tftp to no (as shown in Listing 12).

Listing 12: /etc/xinetd.d/tftp

service tftp

service tftp

{

socket_type = dgram

protocol = udp

wait = yes

user = root

server = /usr/sbin/in.tftpd

server_args = -s -v /tftpboot

disable = no

per_source = 11

cps = 100 2

flags = IPv4

}Now load the newly configured services:

# service xinetd restart # chkconfig dhcpd on # service dhcpd restart

With a complete PXE file structure in place, I can move on to the major step of preparing Kickstart configuration files. Kickstart configuration files are flat text files that specify all of the options the installer can accept, along with all of the pre- and post-install scripting you care to add. Listing 13 is a very simple Kickstart config file that only boots into the rescue mode of the installer. This file is good to have ready to debug the inevitable failed node.

Listing 13: /kickstart/rescue.cfg

lang en_US keyboard us mouse none nfs --server=192.168.1.2 --dir=/repo/CentOS/5/iso/x86_64 network --bootproto=dhcp

A full compute node Kickstart file /kickstart/ks.cfg is a available for download at the Linux Magazine website [10]. I recommend pasting in this beginner kickstart configuration file and continually test installing the first compute node. You can edit the Kickstart file again and again, making small adjustments with each step, then completely re-install the first node until the installation is perfected. After the first node is correct, move on to power up each additional node in the cluster.

Don’t forget to generate and replace the dummy password stubs with your own password. Encrypted passwords in MD5 format start with $1$:

$ grub‑md5‑crypt Password: Retype password: $1$d6oPa/$iUemCR50qSyvGSVTX9NrX1

With the admin node installed and the Kickstart configuration set up and ready for action, the next step is to install and configure all compute nodes that will execute the jobs submitted to the cluster.

Setting Up the Compute Nodes

Once the whole infrastructure is in place, you are ready to start installing compute nodes. The exact steps will vary depending on your configuration, but the key is to be methodical and minimize the time spent at each node.

Please note that the head/login node, having hostname fiji, can be installed manually or automated in the same manner as the compute nodes, with the additional step of configuring eth1 as a public-facing network interface. A sample compute node installation procedure might include the following steps:

- Cable the KVM switch and hit the power button.

- F12 for PXE boot.

- Ctrl+S to get the system Ethernet MAC address.

- Ctrl+E to get the BMC Ethernet MAC address

- Set LAN parameters: IPv4 IP Address Source: DHCP

- Set LAN user configuration: enter and confirm password

- Hit Esc; save changes and exit.

- Add MAC addresses to fijistor‘s /etc/dhcpd.conf, then enter service dhcpd restart.

- Hit Enter on Fiji Cluster Node Install PXE menu.

- Wait for a package dependency check.

- Go to the next node.

You might want to use a management tool like IPMI to control the DRAC/BMC, remotely power-cycling locked nodes:

# yum ‑y install OpenIPMI‑tools # ipmitool ‑H 192.168.1.2XX ‑U root ‑P PASSWORD ‑I lanplus chassis status # ipmitool ‑H 192.168.1.2XX ‑U root ‑P PASSWORD ‑I lanplus chassis power cycle

With a cluster of Linux servers, it is desirable to run the same commands across all the compute nodes. You can run these common commands with the use of a simple for loop script, or you can use a cluster-enabled shell like Dancer’s Shell [11] or ClusterSSH [12]. To execute the commands, create a file called /etc/machines.list comprising a list of all nodes, one per line, then use the simple/root/bin/ssh_loop.sh script (Listing 14).

Listing 14: /root/bin/ssh_loop.sh

#!/bin/sh for I in `grep -v "\#" /etc/machines.list`; do echo -n "$I " ; ssh $I "$@" done

You must keep user authentication in sync across all nodes. Although you have better ways to do this, for the sake of brevity, I will do a simple sync of auth files from the head/login node to all other nodes with the script /root/bin/update_etc.sh (Listing 15).

Listing 15: /root/bin/update_etc.sh

rsync -a fiji:/etc/passwd /root/etc/

rsync -a fiji:/etc/shadow /root/etc/

rsync -a fiji:/etc/group /root/etc/

rsync -a /root/etc/passwd /root/etc/shadow /root/etc/group /etc/

rsync -a /root/etc/passwd /root/etc/shadow /root/etc/group /kickstart/fiji/etc/

for NODE in `cat /etc/machines.list | grep -v "^#"`; do

rsync -a /kickstart/fiji/etc $NODE:/

doneGetting on the Grid

The final step is to enable a job queue to manage the workload. I will use Son of Grid Engine [13] from the BioTeam courtesy binaries [14]. Son of Grid Engine is a community-based project that evolved from Sun’s Grid Engine project. When Oracle bought Sun and phased out the free version of Grid Engine, the Son of Grid Engine developers stepped in to maintain a free version. For more information on working with Son of Grid Engine, see the how-to documents at the project website [15].

Remember that the user home directories and /usr/global/ are exported to the entire cluster via NFS. Users will have SSH keys without passwords for remote shell commands from the head node to all compute nodes.

To get started with Son of Grid Engine, follow the steps in Listing 16.

Listing 16: Setting up Son of Grid Engine

# mkdir /usr/global/sge-6.2u5_PLUS_3-26-11 # ln -s /usr/global/sge-6.2u5_PLUS_3-26-11 /usr/global/sge # export SGE_ROOT=/usr/global/sge # cd /usr/global/sge # adduser -u 186 sgeadmin # mkdir src ; cd src # wget http://bioteam.net/dag/gridengine-courtesy-binaries/sge-6.2u5_PLUS_3-26-11-common.tar.gz # wget http://bioteam.net/dag/gridengine-courtesy-binaries/sge-6.2u5_PLUS_3-26-11-bin-lx26-amd64.tar.gz # cd /usr/global/sge # tar xzvf src/sge-6.2u5_PLUS_3-26-11-common.tar.gz # tar xzvf src/sge-6.2u5_PLUS_3-26-11-bin-lx26-amd64.tar.gz # chown -R sgeadmin.sgeadmin .

You can interactively install the master host on the admin node, with all settings per default except the following: install as sgeadmin user, set network ports with environment, sge_qmaster port 6444,sge_execd port 6445, cell name default, cluster name p6444, say no to pkgadd and yes to verify permissions, say no to JMX MBean server, select classic spooling method, GID range 20000-20100, enter each hostname, and set no shadow host.

# cd /usr/global/sge/ # ./install_qmaster

Then we set the user environment:

. /usr/global/sge/default/common/settings.sh

The SGE logs are found at: /usr/global/sge/default/spool/qmaster/messages, /tmp/qmaster_messages (during qmaster startup), /usr/global/sge/default/spool/HOSTNAME/messages, and/tmp/execd_messages (during execd startup).

You need to install SGE on all compute nodes. This step is most easily done with an automated install config. Listing 17 shows an example of an SGE cluster.conf file, stripped of comments.

Listing 17: Example SGE cluster.conf

SGE_ROOT="/usr/global/sge" SGE_QMASTER_PORT="6444" SGE_EXECD_PORT="6445" SGE_ENABLE_SMF="false" SGE_ENABLE_ST="true" SGE_CLUSTER_NAME="p6444" SGE_JMX_PORT="6666" SGE_JMX_SSL="false" SGE_JMX_SSL_CLIENT="false" SGE_JMX_SSL_KEYSTORE="/tmp" SGE_JMX_SSL_KEYSTORE_PW="/tmp" SGE_JVM_LIB_PATH="/tmp" SGE_ADDITIONAL_JVM_ARGS="-Xmx256m" CELL_NAME="default" ADMIN_USER="sgeadmin" QMASTER_SPOOL_DIR="/usr/global/sge/default/spool/qmaster" EXECD_SPOOL_DIR="/usr/global/sge/default/spool" GID_RANGE="20000-20100" SPOOLING_METHOD="classic" DB_SPOOLING_SERVER="" DB_SPOOLING_DIR="spooldb" PAR_EXECD_INST_COUNT="20" ADMIN_HOST_LIST="fijistor" SUBMIT_HOST_LIST="fiji" EXEC_HOST_LIST="node01 node02 node03 node04" EXECD_SPOOL_DIR_LOCAL="" HOSTNAME_RESOLVING="true" SHELL_NAME="ssh" COPY_COMMAND="scp" DEFAULT_DOMAIN="none" ADMIN_MAIL="none" ADD_TO_RC="true" SET_FILE_PERMS="true" RESCHEDULE_JOBS="wait" SCHEDD_CONF="1" SHADOW_HOST="" EXEC_HOST_LIST_RM="" REMOVE_RC="true" WINDOWS_SUPPORT="false" WIN_ADMIN_NAME="Administrator" WIN_DOMAIN_ACCESS="false" CSP_RECREATE="true" CSP_COPY_CERTS="false" CSP_COUNTRY_CODE="DE" CSP_STATE="Germany" CSP_LOCATION="Building" CSP_ORGA="Organisation" CSP_ORGA_UNIT="Organisation_unit" CSP_MAIL_ADDRESS="name@yourdomain.com"

To set up an automated installation:

# cd /usr/global/sge/ # cp util/install_modules/inst_template.conf cluster.conf # vim cluster.conf # ./inst_sge ‑x ‑auto /usr/global/sge/cluster.conf automated install log sge_root/spool/install_HOSTNAME_TIMESTAMP.log

After a successful install run, verify that Son of Grid Engine is working and check the configuration with:

$ ps ax | grep sge $ qconf ‑sconf

A good first step is to submit a simple job test to the cluster as a normal user:

# adduser testuser # update_etc.sh # su ‑ testuser $ qsub /usr/global/sge/examples/jobs/simple.sh $ qstat

Conclusion

Now that you have a functional super computer cluster with job and resource management, what next? Should you install Blender [16] and start rendering a feature-length movie? Will you install MPI and explore parallel computing [17]? Maybe you can run the Linpack benchmark and compete in the Top 500 [18]? Well, that all depends on your chosen discipline, but I imagine that your group of researchers will have enough work to keep the system busy. nnn

Info

[1] Red Hat Enterprise Linux documentation:

http://docs.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/index.html

[2] CentOS-5 documentation:

http://www.centos.org/docs/5/

[3] RHEL install guide:

http://docs.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/5/html/Installation_Guide/index.html

[4] Kickstart installations:

http://docs.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/5/html/Installation_Guide/ch-kickstart2.html

[5] CentOS ISO mirrors:

http://isoredirect.centos.org/centos/5/isos/x86_64/

[6] Preboot execution environment:

http://en.wikipedia.org/wiki/Preboot_Execution_Environment

[7] Intelligent platform management interface:

http://en.wikipedia.org/wiki/Intelligent_Platform_Management_Interface

[8] CentOS North American mirrors:

http://www.centos.org/modules/tinycontent/index.php?id=30

[9] Fedora EPEL public active mirrors:

http://mirrors.fedoraproject.org/publiclist/EPEL/

[10] Code for this article (choose issue 132):

http://www.linux-magazine.com/Resources/Article-Code

[11] Dancer’s shell:

http://www.netfort.gr.jp/~dancer/software/dsh.html.en

[12] ClusterSSH:

http://clusterssh.sourceforge.net/

[13] Son of Grid Engine:

https://arc.liv.ac.uk/trac/SGE

[14] BioTeam grid engine courtesy binaries:

http://bioteam.net/dag/gridengine-courtesy-binaries/

[15] Grid engine how-to:

http://arc.liv.ac.uk/SGE/howto/howto.html

[16] Blender:

http://www.blender.org/

[17] Introduction to parallel computing:

https://computing.llnl.gov/tutorials/parallel_comp/

[18] The Linpack benchmark:

http://www.top500.org/project/linpack

The Author

Gavin W. Burris is a Senior Systems Programmer with the University of Pennsylvania School of Arts and Sciences. In his position with the Information Security and Unix Systems group, he works with HPC clusters, server room management, research computing desktops, web content management, and large data storage systems. He maintains a blog at: http://idolinux.blogspot.com