The LattePanda Mu low-power HPC compute module puts an HPC system on your desktop.

Desktop Blades (of Glory)

A small club of people, of which I'm the president and a member, are interested in personal clusters you can have in your office, and even on your desktop. I've made several home clusters from various components, ranging from the small Cluster HAT, to a small Raspberry Pi cluster, to an eight-node x86 stack. In my previous home I had three 20A circuits installed for clusters, I really like great performance but with low power usage, so I can get four nodes on a normal 15 or 20A circuit.

These low-power systems can come in all shapes and sizes, but most of them are born from the single-board computer (SBC) market, a number of which originated in makerspaces, where people started making their own systems. The application of these systems run a breadth of uses for computers in general. If you also add the ability to make add-on cards, the varieties are endless. In general, these cards are not PCI Express (PCIe) buses, but HAT (hardware attached on top) boards that plug into the general-purpose input/output (GPIO) pins (more on that later). You can then stack these boards to make something like a sandwich of compute and sensor capabilities. Some of these boards have even been to space, although they aren't designed for that purpose.

The SBC world has even started developing systems for HPC (or close to it) with SBCs such as a Raspberry Pi Compute Model 4, blades, chassis, or a combination of devices, that allow you to create very dense systems. To begin, I'll make a quick review of the system that arguably started the SBC movement, the Raspberry Pi.

Raspberry Pi

Most people reading this article will know about the Raspberry Pi (Pi) that was introduced in 2012. Hobbyists, including me, got excited by the prospect of having a very low power SBC that runs Linux and is inexpensive on their desk. The Pi had a CPU, a reasonable amount of RAM (sort of), an Ethernet port, several USB ports, eMMC storage, and, perhaps most importantly, a set of GPIO pins that could be programmed for almost anything you could create and could be controlled with software.

Very quickly, people created small boards or HATs that attached to the pins for cameras and various sensors (think edge computing) or anything they could dream and code. People were putting them into self-contained weather stations, sensor packages for atmospheric balloons, and a wide variety of robotics. It really was a huge revolution for the makerspace. You can even imagine people making HPC-oriented HATs.

If you are in HPC, you can easily imagine people building clusters of Pis. I don't know who built the first one, it could have been for Kubernetes or web serving, but quickly Pi clusters for HPC appeared. The annual supercomputing conferences had lots of them on the show floor. At first, many of them were used for learning about clusters, the components you needed to build them, the networking you needed, the concepts for managing and monitoring them, how to program them, how to debug and identify bottlenecks, and so on; however, some were being used for very serious computing.

The first Pis were only 32-bit, which limited their applicability to current HPC that uses 64-bit (multiprecision was not yet "a thing"). However, people built them and used them regardless. An individual user could afford to buy a few of them along with switches, flash cards, and USB cables for power and control. Yours truly even built one.

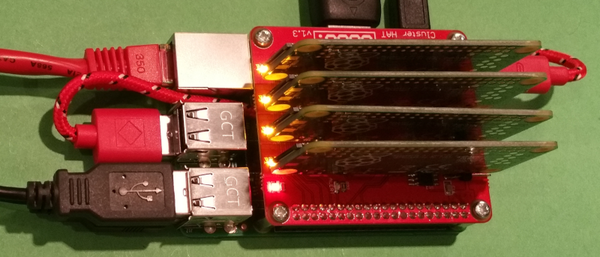

Commercial companies started selling Pi clusters (e.g., PicoClusters). The Raspberry Pi Zero boards also gained some interest with the Cluster HAT, which comprises a base Raspberry Pi with a HAT that uses the GPIO pins and has four slots to plug in Pi Zero boards (Figure 1). I built and tested a Raspberry Pi Cluster HAT in 2017. It was a fun build, and I got some HPC code to run on it, but ultimately, the Pi Zeros just did not have enough performance to make it interesting to me.

Starting with the Raspberry Pi 3, the CPU was now 64 bits, and all of the peripheral chips accommodated this development, which immediately brought the Pi to the level of “serious” HPC, although I still thought 32-bit was good enough for many applications. What was lacking was the operating system (OS).

By far the most popular OS for the Pi, even today, is the Raspberry Pi OS. You have several other options, but because of the limited memory and more modest computational power, The Raspberry Pi OS was the OS of choice. However, it wasn’t for another six years that Raspberry Pi OS became 64-bit. Six years is a long time, but concerns about backward compatibility kept everyone waiting, in my opinion, an inexcusably long time; they were holding back a huge number of projects. Now with a full 64-bit system, the Pi became, in some eyes, a real HPC system. Moreover, systems could now address more than 4GB of memory, which many felt was a serious impediment to using the Pi.

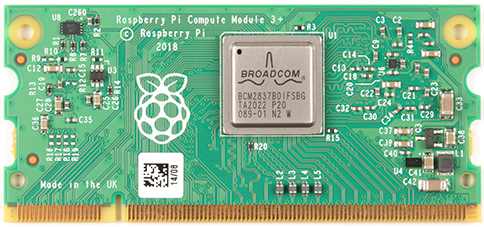

Raspberry Pi Compute Module

In 2014, Raspberry Pi announced the Compute Module, a device that is like (and looks like) a 200-pin DDR2 SO-DIMM (Figure 2). Several versions of the Compute Module came out until the Raspberry Pi Compute Module 4 (CM4, launched in 2020). The CM4 is more like a small credit card and includes a quad-core processor, memory, Ethernet controller, flash storage, USB controllers, and other components. These CM4 units didn't have connectors for peripherals, so you “mounted” the Compute Module onto a carrier board that had all of the connectors – kind of like a bare motherboard with just connectors.

The initial carrier board was called the Compute Module 4 IO Board and had connectors for HDMI, Gigabit Ethernet, USB, microSD, PCI Express Gen 2 one-lane (x1), 40-pin set of GPIO pins, camera connector, and so on. All of these connectors were linked to the chips on the Compute Module 4. The CM4 measured 55x40x4.7mm (i.e., about 2.1x1.6x1.5 inches), which is smaller than a credit card.

In essence, the CM4 had become the ultimate compute blade, with all the chips that would be needed to create a full system on a very small board. Rather than shrinking the entire board and removing things HPC really doesn't need (e.g., sound connectors), retaining all the components and connecting it to a carrier board, which could then be anything you wanted (including blades) was ingenious.

These carrier boards could be designed by anyone, including other companies, and they have been. The famous Jeff Geerling has created a page that lists production carrier boards, where you will find a huge number of boards for almost any type of system you want to create.

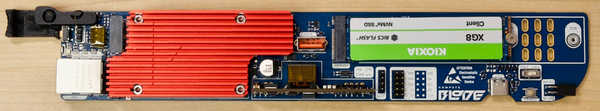

Raspberry Pi Compute Blade

The carrier board for the CM4 can be created for any purpose, including HPC, as you can see with the Compute Blade (Figure 3). Ivan Kuleshov had started work on CM4-based carrier boards designed to fit in standard rack structures (1U), and he created a Kickstart that raised more than EUR1 million (>$840K). The blades themselves are 255x42.5x17.5mm (length x height x thickness, or roughly 10x1.67x0.7 inches).

Each blade can accommodate a CM4 or CM4 Lite Raspberry Pi and can hold an M.2 NVMe card up to type 22110. Power is provided by USB-C or Power over Ethernet. The blade has a Gigabit Ethernet port on the back, an HDMI port at the front, and a USB-C port on the bottom of the blade near the back (on the right in Figure 3). A USB-A port is present in case you want to plug in to debug the blade.

The blade by itself is really cool, but Kuleshov has gone a step further and designed a 1U server chassis (19 inches), the BladeRunner, that can accommodate up to 20 Compute Blades. For good measure, he has integrated cooling, as well, with a four-node chassis with integrated fans, along with a two-node chassis design you can 3D print.

The Compute Blade is a really, really interesting combination of features that are perfect for HPC. If you have Kuleshov's talent, the CM4 is a great approach to creating custom systems without having to create pricey custom motherboards. By starting with a simple carrier board with various connectors, you can keep costs very low, as shown in Kuleshov's wonderful Compute Blade, which has the basics and a few extras that an HPC system might need.

What does this capability do for HPC? It creates a high-density, low-cost HPC cluster that you can put on your desk or in your home rack (come on, admit you have one of those); plus, it looks like an HPC system! To me, this is putting cool looking, HPC cluster technology in the hands of individual users, so they can learn and use real computing.

A Change of Focus

During the Pandemic, Raspberry Pis, particularly CM4s, were in great demand. Prices went up, demand went up, availability went down, and people got frustrated. One of the reasons demand was so high was that commercial companies were using the CM4 and other Raspberry Pi boards in their products. The Raspberry Pi Foundation decided that they could not let down the companies because people would be out of work during a very difficult time, so they chose to let down home users, makers, and others. It was a difficult choice, but it continues up to today.

At this point I want to express my opinion. I understand the choice they made, but I quickly grew tired of looking for CM4s anywhere on the planet, even after the lockdown was over. You could maybe find some on eBay, but the prices were ridiculous. Therefore, I started purchasing small used x86 systems from eBay for HPC testing. The price of these systems was well below that of a Pi, the performance was much better, and the availability was millions of times better. Of course, they weren't as compact as the Pi and they used more power, but for my usage, they worked very well. Therefore, I gave up on the Raspberry Pi, but I still watch for interesting developments, such as the Compute Blade I mentioned previously.

But all is not lost.

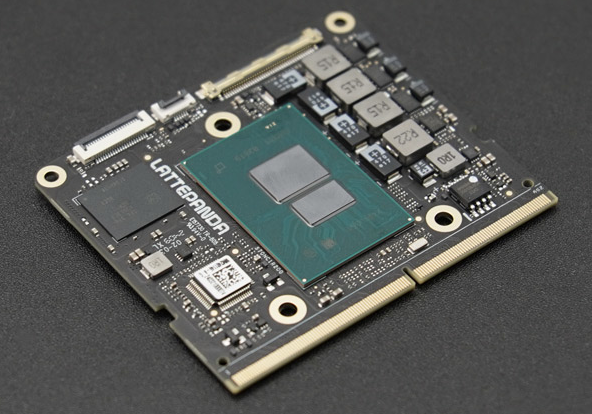

x86 SBC Compute Module

What has re-invigorated my interested in lower power HPC, especially blade, designs is an x86-based Compute Module-like board. The LattePanda Mu is a compute module with an Intel N100, 8GB of low-power double data rate (LPDDR) memory, up to 64GB of eMMC flash storage, up to nine PCIe 3.0 lanes, up to four USB 3.2 ports, eight USB 2.0 ports, 64 GPIO pins (different from Raspberry Pi), and 2.5 or 10 Gigabit Ethernet (GbE). The card is 60x69.6mm (about 2.4x2.84 inches), which makes it a little taller than a credit card and a bit over 1U (Figure 4).

The Intel N100 peaks around 22-23W under load, although DFRobot says up to 35W. The Raspberry Pi 5 under load peaks around 12W, so the power draw is definitely different. (Note that these numbers are just the best estimates I could find. Your mileage may vary.) The N100 is estimated to have higher performance than a Raspberry Pi 5, which is already two to three times faster than the Pi 4. Who in HPC doesn't like more performance?

Right now, two carrier boards can accommodate the LattePanda Mu N100 compute module. One is a relatively small "Lite" carrier board (Figure 5) that has a Gigabit Ethernet port, USB 3.0 ports, HDMI port, M.2 M-key SSDs and M.2 key E WiFi, and – drum roll please – a PCIe 3.0 x4 expansion slot! The board is the standard 3.5 inches (width) used for embedded motherboards.

The full-function evaluation carrier board is a larger ITX-size board that has two 2.5GbE, two HDMI 2.0, a USB-C, four USB 3.0, and two USB 2.0 ports; a SIM card and standard PCIe 3.0 x1 and PCIe 3.0 x4 slots; and M.2 key E and M.2 B-key slots (Figure 6).

The LattePanda Mu full-feature carrier board has a great deal of potential. You could design your own carrier board if you have the skills (sadly, I do not), and although you cannot make a 1U blade with it, you perhaps could mount several of them on a single 2U blade to gain back some density. Having two 2.5GbE ports per Mu would be wonderful for HPC. I hope Kuleshov is reading this. By the way, LattePanda provides KiCad templates so you can design your own board.

The LattePanda Mu N100 x86-based compute module hits the sweet spot for me. The Intel N100 is a powerful CPU, with great network connectivity and pots of USB ports, and the pricing is pretty good. An Intel i3-N300/N305 CPU doubles the number of cores if you want basically the same thing with more cores.