« Previous 1 2 3 Next »

Desktop Supercomputers: Past, Present, and Future

Present

Since the Cray CX-1, companies have offered two- and four-socket deskside workstations that can be used to run supercomputing applications. However, the early “four-way” (four-socket) systems tended to be expensive, and their cache snoop traffic could occupy a significant portion of interprocessor communication. Today's four-ways have gotten better, but they have lots of DIMM memory and storage drive capability and are not really designed for HPC. Rather, they were designed for something else like databases or graphics processing.

NVIDIA DGX Station A100

Among large workstations, Nvidia has recently upped the ante on by offering a deskside solution with very high performance GPUs that focuses on artificial intelligence (AI) and HPC. AI applications have had a major effect on HPC workloads because they require massive amounts of compute to train the models. Additionally, a large percentage of AI workloads, particularly for deep learning (DL) training, is accomplished with interactive Jupyter notebooks.

As the demand for AI applications grows, more and more people are developing and training models. Learning how to do this is a perfect workload for a workstation that the user controls and that can easily run interactive notebooks. These systems are also powerful enough for training what are now considered small to medium-sized models.

Just a few years ago, Nvidia introduced an AI workstation that used their powerful V100 GPUs. The DGX Station had a 20-core Intel processor, 256GB of memory, three 1.92TB NVMe solid-state drives (SSDs), and four very powerful Tesla V100 GPUs, each with 16 or 32GB. It used a maximum of 1,500W (20A circuit), and you could plug it into a standard 120V outlet. It had an additional graphics card, so you could plug in a monitor. This system sold for $69,000.

The DGX Station has now been replaced with the DGX Station A100 that includes the Nvidia A100 Tensor Core GPU. It now uses an AMD 7742 CPU with 64 cores, along with 512GB of memory, 7.68TB of cache (NVMe drives), a video GPU for connecting to a monitor, and four A100 GPUs, each with 40 or 80GB of memory. This deskside system also uses up to 1,500W and standard power outlets (120V). The system price is $199,000.

These deskside systems are a kind of pinnacle of desktop/deskside supercomputers that use standard power outlets while providing a huge amount of processing capability for individuals; however, they are pushing the power envelope of standard 120V home and office power. Although powerful, they are also out of the price range of the typical HPC user.

The DGX Station A100 represents an important development because right now, several AI-specific processors are being developed, including Google’s tensor processing unit (TPU) and field-programmable gate arrays (FPGAs). If any of these processors gain a foothold in the market, putting them in workstations is an inexpensive way to get them to developers.

SBC Clusters

At the other end of the spectrum from the DGX Station A100 are single-board computers (SBCs), which have become a phenomenon in computing courtesy of the Raspberry Pi. SBCs contain a processor (CPU), memory, graphics processing (usually integrated with the CPU), network ports, and possibly some other I/O capability. Inexpensive SBCs are less than $35 and most of the time cost less than $100; some SBCs that cost only $10 even include a quad-core processor, an Ethernet port, and WiFi. Moreover, the general purpose I/O (GPIO) pins that many of these systems offer allow the SBCs to be expanded with additional features, such as NVMe drives, RAID cards, and a myriad of sensors.

Lately, SBCs have started to give low-end desktops a run for their money, but an important feature is always low power, much lower than any other desktop. For example, the Raspberry Pi 4 Model B under extreme load only uses about 6.4W.

The CPU types for these SBCs are mostly ARM architecture, but some use x86 or other processors. Many are 64-bit, and some have a reasonable amount of memory (e.g., 8GB or more). Others have very high performance GPUs for the low-power envelope, such as the Nvidia Jetson Nano. As you can imagine, given the low cost and low power, people have built clusters from SBCs. Like many other people, I built a cluster of Raspberry Pi 2 modules (Figure 4).

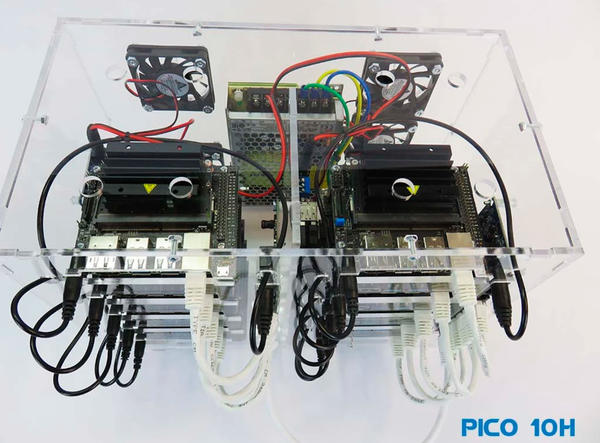

PicoCluster LLC has taken home-built SBC clusters, effectively Beowulf clusters, to the next level: They build cases along with ancillary hardware from a variety of SBCs. Their Starter Kit includes a custom case for the SBCs, power, cooling fans, and networking. However, the kit does not include the SBCs and their SD cards. An Advanced Kit builds on the Starter Kit but adds the SBCs. Finally, an Assembled Cube is fully equipped with everything – SBCs, SD cards – and is burned in for four hours. This kind of system is perfect for a desktop supercomputer (Figure 5). Under load, the Jetson Nano uses a maximum of about 10W, so the Pico 10H uses a bit over 100W (including the switch and other small components).

Figure 5: PicoCluster 10H cluster of 10 Jetson Nano SBCs in an assembled cube (by permission ofPicoCluster LLC for this article).

Figure 5: PicoCluster 10H cluster of 10 Jetson Nano SBCs in an assembled cube (by permission ofPicoCluster LLC for this article).

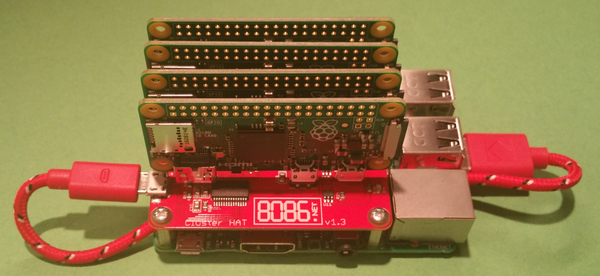

An add-on board (Cluster HAT; hardware attached on top) to a standard Raspberry Pi allows you to add up to four Raspberry Pi Zero boards to create a very small cluster. A while ago, I wrote an article about the first-generation Cluster HAT (Figure 6). Newer versions of the Cluster HAT allow you to scale to a larger number of Cluster HATs per Raspberry Pi.

Limulus

Doug Eadline, a luminary in the Beowulf community, has applied the Beowulf principles to desktop supercomputing with a system he calls Limulus. Limulus takes consumer-grade standard motherboards, processors, memory, drives, and cases and creates a deskside Beowulf. To do this, he has created mounting brackets with specific 3D-printed components to create blades with micro-ATX motherboards that are mounted in a standard case (Figure 7).

The Limulus systems also include built-in internal networking ranging from 1 to 25GigE and can include various storage solutions, including the stateless compute nodes (i.e., no local storage). Warewulf, a computer cluster implementation toolkit, boots the cluster, and Eadline has coupled it with the Slurm scheduler so that if no jobs are in the queue, the compute nodes are turned off. Conversely, with jobs in the queue, compute nodes that are turned off will be powered on and used to run jobs.

Limulus comes in different flavors, and an HPC-focused configuration targets individual users, although a larger version is appropriate to support small workgroups. A Hadoop/Spark configuration comes with more drives, primarily spinning disks; a deep learning configuration (edge computing) can include up to two GPUs.

Limulus systems range in size and capability. You can differentiate them by the computer case. The thin case (Figure 7) usually accommodates four motherboards, whereas the large case can accommodate up to eight motherboards (Figure 8). The Limulus motherboards are single-socket systems typically running lower power processors. You can pick from Intel or AMD processors, depending upon your needs.

Limulus is very power efficient and plugs into a standard wall socket (120V). Depending on the configuration, the system can have as little as a few hundred watts or up to 1,500W for systems with eight nodes and two GPUs.

A true personal workstation with four motherboards, 24 cores, 64GB of memory, and 48TB of main storage costs just under $5,000. The largest deskside system has 64 cores, 1TB of memory, 64TB of SSD storage, and 140TB of hard drive space and goes for less than $20,000. Compare these two prices to the prices of the three previous desktop supercomputers discussed.

Future

Pontificating on the future is always a difficult task. Most of the time, I would be willing to bet that the prediction will be wrong, and I’d probably win many of those bets (over half). Nonetheless, I’ll not let that prevent me from having fun.

Supercomputing Desktop Demand Will Continue to Grow

One safe prediction is that the need for desktop supercomputers will continue to grow. Regardless of the technology, supercomputing systems grow and end up becoming centralized, shared resources. The argument for this is that it theoretically costs less when everything is consolidated (economy of scale). Plus, it does allow the handful of applications that truly need the massive scale of a TOP500 system to access it.

However, centralized systems take away compute resources from the user’s hands, and the user must operate asynchronously, submitting their job to a centralized resource manager and waiting for the results. All the jobs are then queued according to some sort of priority. The queue ordering is subject to competing forces that are not under the control of the user. A simple, single-node job that takes less than five minutes to run could get pushed down the queue to a point that it takes days to run, and interactive applications would be impossible to run.

While some applications definitely need very large systems, surprisingly, the number of applications that need one to four nodes dominate the workload. Having the ability to run an application when needed is valuable for users, and this is what desktop supercomputers do. They allow a large group of users to run jobs when they need to (interactive), rather than being stuck in the queue. Depending on the size of user jobs, desktop systems can also serve as the only resource for specific users.

For those applications and users that need extremely large systems, desktop supercomputers are also advantageous because they take over the small node count jobs, moving them off the centralized system. The sum of the resources freed up for the larger jobs could be quite extensive (as always, it depends).

Cloud-Based Personal Clusters

The cloud offers possibilities for desktop supercomputers, albeit in someone else’s data center. The process of configuring a cluster in the cloud is basically the same for all major cloud providers. The first step is to create a head node image. It need only happen once:

- Start up a head node Linux virtual machine (VM), probably with a desktop such as Xfce. If needed, this VM can include a GPU for building graphics applications. If so, the GPU tools can be installed into the VM.

- The head node should then have cluster tools installed. A good example is OpenFlight HPC, which has environment modules, many HPC tools, a job scheduler, and a way to build and install new applications. You could even install Open OnDemand for a GUI experience.

- The head node can also host data and share it with the compute nodes over something like NFS. If the cloud provider has a managed NFS service, then this need not be included with the head node. However, if more performance than NFS is needed, a parallel filesystem such as Lustre or BeeGFS can be configured outside of the head node.

- At this point, you can save the head node image for later use.

A user then can start up a head node when needed with this saved image and a simple command from the command line or called from a script. The user then logs in to the head node and submits a job to the scheduler. Tools such as OpenFlightHPC will start up compute nodes as they are needed. For example, if the job needs four compute nodes and none are running, then four compute nodes are started. Once the compute nodes are ready to run applications, the scheduler runs the job(s). If the compute nodes are idle for a period of time, the head node can spin down the compute node instances, saving money.

Many of the large cloud providers have the concept of preemptible instances (VMs) that can be taken from you, interrupting your use of that node. This results when another customer is willing to pay more for the instance. In the world of AWS, these are called Spot Instances. Although the use of Spot Instances for compute nodes can save a great deal of money compared with on-demand instances, the most expensive instance type, the user must be willing to have their applications interrupted and possibly restarted.

After the job is finished, if no jobs are waiting to be run, the compute nodes are shutdown. If the head node is not needed either, it too can be shut down, but before doing so, any new data should be saved to the cloud or copied back to the user’s desktop system, or the head node image can be updated.

Of course, you will recognize the need for additional infrastructure and tools to make this process easy and to ensure that the user has limits to how much money can be spent on cloud resources.

« Previous 1 2 3 Next »