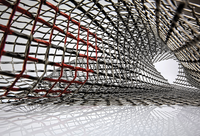

Lead Image by Ricardo Gomez Angel on Unsplash.com

Service mesh for Kubernetes microservices

Mesh Design

The ease with which microservices can be deployed, upgraded, and scaled makes them a compelling way to build an application, but as a microservice application grows in complexity and scale, so does the demand on the underlying network that is its lifeblood. A service mesh offers a straightforward way to implement a number of common and useful microservice patterns with no development effort, as well as providing advanced routing and telemetry functions for your microservice application straight out of the box.

Service Mesh Concepts

Unlike a monolithic application, in which the network is only a matter of concern at a limited number of ingress and egress points, a microservices application depends on IP-based networking for all its internal communications, and the more the application scales, the less "ideal" the behavior of that internal network becomes. As interactions between services become more complex, more voluminous, and more difficult to visualize, it's no longer safe for microservice app developers to assume that those interactions will happen transparently and reliably. It can become difficult to see whether a bug in the application is caused by one of the microservices (and if so, which one) or by the configuration of the network.

One way to mitigate non-ideal behaviors in the microservice network is by creating services that make allowances for the interactions. Developers can carefully calculate how long request timeouts should be, allowing for dependencies on other requests cascading down the chain. Another approach is for service developers to create a client library for use by developers of dependent services that interacts with their service's API in a resilient manner. Both approaches have serious drawbacks. The first causes a lot of duplicated effort – each team is spending resources trying to solve essentially the same problem. The second approach runs

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.