« Previous 1 2 3 Next »

Secure and seamless server access

Lightning

Deploying Tunnel on a Host

A tunnel once created can be deployed on a host. To do so, you need to install a lightweight daemon and run it as a service. If you provide an application token to the startup command, it will fetch the configuration from Cloudflare.

Listing 4 first fetches the package. Because it is running on a Debian server, it fetches the .deb package; other systems need their respective packages. The package is installed and finally run to install the configuration for a specific tunnel. Lastly, it enables and starts the service so it provides a gateway onto the server.

Listing 4

Install and Run Application

curl -L --output cloudflared.deb https://github.com/cloudflare/cloudflared/releases/latest/download/cloudflared-linux-amd64.deb

sudo dpkg -i cloudflared.deb

sudo cloudflared service install "${CF_TUNNEL_TOKEN}"

systemctl enable cloudflared

systemctl start cloudflared

# install nginx and test connectivity

apt update

apt-get install -y nginx

systemctl start nginx

If everything is configured as expected, you can freely connect to it from

${var.hostname}.${var.cf_tunnel_primary_domain}in a browser and see the NGINX welcome page (Figure 3).

Limiting Access

Cloudflare Tunnel only removes the need for opening inbound connectivity into the hosts; they just provide a unique endpoint maintained by Cloudflare that, when reached, tunnels to them. The next step should be to restrict access to the endpoint, which Cloudflare provides with the Application Auth tool, where you can type rules that, on the basis of the user's IP or email address or location, allow or deny access to a given endpoint (Listing 5 and Figure 4).

Listing 5

Cloudflare App with Terraform

resource "cloudflare_access_application" "gitlab" {

zone_id = data.cloudflare_zone.tunnel.id

name = "Internal GitLab"

domain = "${var.hostname}.${var.cf_tunnel_primary_domain}"

type = "self_hosted"

session_duration = "24h"

}

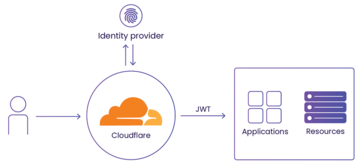

Figure 4: Cloudflare can work as an identity provider to your internal apps, resources, and third-party services. It can also rely on third-party identity providers, such as Okta, G Suite, Facebook, Azure AD, and others (JWT = JSON web token).

Figure 4: Cloudflare can work as an identity provider to your internal apps, resources, and third-party services. It can also rely on third-party identity providers, such as Okta, G Suite, Facebook, Azure AD, and others (JWT = JSON web token).

Cloudflare Access is based on the concept of policies. Each policy comprises:

- An Action: Allow, Block, or Bypass. Although Allow and Block are self-explanatory, Bypass policies are checked first, and if satisfied, further evaluation is skipped.

- Rule types: Include, Exclude, Require. Specifies how the condition is evaluated. All policies must have at least one Include rule, and when a policy has multiple rules, the Include rule type ORs rules and Require ANDs rules.

- Selectors. The condition and a value (e.g., a list of enabled IPs or email addresses).

Only users who positively match specified policies will have access to configured applications.

For example, the policy in Table 1 only allows access to users with email addresses from the vizards.it domain (Listing 6).

Table 1

Sample Policy

| Action | Rule | Type | Selector |

|---|---|---|---|

| Allow | Include | Emails ending in | @vizards.it |

Listing 6

Access by Domain

resource "cloudflare_access_policy" "test_policy" {

application_id = cloudflare_access_application.gitlab.id

zone_id = data.cloudflare_zone.tunnel.id

name = "Employees access"

precedence = "1"

decision = "allow"

include {

email_domain = ["vizards.it"]

}

}

For one application, you can specify several policies, which are evaluated in a specific order: first Bypass rules, then Allow and Block in the order specified.

The policy can also be assigned to a software-as-a-service (SaaS) application hosted by a third party like Salesforce: Cloudflare has to be set as a single sign-on (SSO) source. However, more often, it will be used to protect internal applications, either on an FQHN (e.g., GitLab.vizards.it ), on a wildcard name (e.g., *.vizards.it ), or on a path level (e.g., museum.vizards.it/internal ).

Access for Non-Web Apps

As mentioned previously, HTTP(S) services do not require any software on the client side. Things get complicated if you protect non-web applications because you have to use cloudflared clients to reach them.

For administrators, I'll focus on SSH and kubectl. SSH is quite easy because it requires a proxy connection through a cloudflared client. First, install the cloudflared daemon and add the following entry in ~/.ssh/config:

Host example-host-ssh.vizards.it ProxyCommand cloudflared access ssh --hostname %h

Now it is possible to log in and perform the usual:

ssh <USER>@example-host.ssh.vizards.it

If authentication is required, a web browser opens, and the user is asked to log in to Cloudflare.

Things get complicated when you want to use kubectl [5] to manage a Kubernetes cluster behind Tunnel and Application Auth because kubectl does not support proxy.

First, you have to establish a connection:

cloudflared access tcp --hostname k8s.vizards.it --url 127.0.0.1:7777

Then, before each kubectl call, you have to prepend HTTPS_PROXY, for example:

HTTPS_PROXY=socks5://127.0.0.1:7777 kubectl

Creating an alias,

alias kcf="HTTPS_PROXY=socks5://127.0.0.1:7777 kubectl"

simplifies the process.

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.