Lead Image © Ringizzz, 123RF.com

Service discovery, monitoring, load balancing, and more with Consul

Cloud Nanny

The cloud revolution has brought some form of cloud presence to most companies. With this transition, infrastructure is dynamic and automation is inevitable. HashiCorp's powerful tool Consul, described on its website as "… a distributed service mesh to connect, secure, and configure services across any runtime platform and public or private cloud" [1], performs a number of tasks for a busy network. Consul offers three main features to manage application and software configurations: service discovery, health check, and key-value (KV) stores.

Consul Architecture

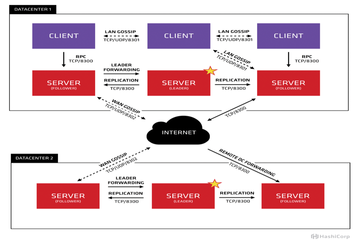

As shown in Figure 1, each data center has its own set of servers and clients. All nodes within the data center are part of the LAN gossip pool, which has many advantages; for example, you do not need to configure server addresses, because server detection is automatic, and failure detection is distributed among all nodes, so the burden does not end up on the servers only.

Figure 1: Consul's high-level architecture within and between data centers (image source: HashiCorp [2]).

Figure 1: Consul's high-level architecture within and between data centers (image source: HashiCorp [2]).

The gossip pool is also used as a messaging layer between nodes

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.