« Previous 1 2 3 4 Next »

Five Kubernetes alternatives

Something Else

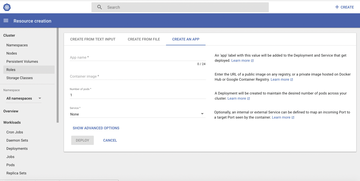

Kubernetes [1] is king of the hill in the orchestration universe. Invented by Google and now under the auspices of the Linux Foundation, Kubernetes has not only blossomed quickly but has led to a kind of monoculture not typically seen in the open source world. Reinforcing the impression is that many observers see Kubernetes (Figure 1) as a legitimate OpenStack successor, although the solutions have different target groups.

Against this background, it is not surprising that Kubernetes' presence sometimes obscures the view of other solutions that might be much better suited to your own use case. In this article, I look to put an end to this unfortunate situation by introducing Kubernetes alternatives Docker Swarm, Nomad, Kontena, Rancher, and Azk and discussing their most important characteristics.

Docker Swarm

Docker Swarm [2] (Figure 2) must be top of the list of alternatives to Kubernetes. Swarm can be seen as a panic reaction from Docker when the container builders realized that more and more users were combining Docker with Kubernetes.

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.