« Previous 1 2 3 Next »

Clustering with the Nutanix Community Edition

The Right Track

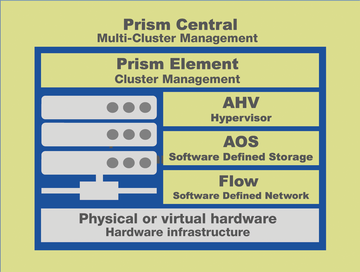

To be clear, the Community Edition of Nutanix was developed for testing purposes only; it is not a replacement for the production version. The Community Edition does not give you all the possibilities that you have with the commercial version. For example, the Community Edition only supports two hypervisors: Acropolis (AHV) by Nutanix and ESXi by VMware. The basic setup of a private enterprise cloud from Nutanix built on the Community Edition includes the hypervisor, the Controller Virtual Machine (CVM) and associated cloud management system, the Prism element for single-cluster management, and Prism Central for higher level multicluster management (Figure 1).

With the Community Edition, you can set up a one-, three-, or four-node cluster. All other conceivable cluster combinations are reserved exclusively for the commercial version. The individual components of the Community Edition, such as AHV; the AOS cloud operating system, which is based on the individual CVMs in the cluster; and the cloud management system, cannot be mixed with components of the production version. Therefore, you cannot manage a Community Edition cluster with Prism Central from the production version. Conversely, you cannot use Prism

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.