« Previous 1 2 3 4 Next »

The state of OpenStack in 2022

Hangover

The Big Six

Most of the changes are found in the six core platform components that together form an OpenStack instance. For example, the storage manager Cinder can now overwrite a virtual volume with the contents of a hard drive image. Until now, this was only possible during virtual machine (VM) creation. Now, however, existing VMs can be re-created without deleting and re-creating the entire VM or disk (reimaging).

Moreover, Cinder now has support for new hardware back ends. In the future, it will be able to use Fibre Channel to address its storage in the form of LightOS over NVMe by TCP, just like Netstor devices from Toyou. NEC V series storage will also support both Fibre Channel and iSCSI in the future.

The Glance image service has always been one of the components with more relaxed development; consequently, it offers little new. Quota information about the stored images can now be displayed; some new API operations for editing metadata tags, for example, have been added; and there are more details about virtual images residing on RADOS block devices (RBDs) in Ceph.

Horizon, Nova, Keystone

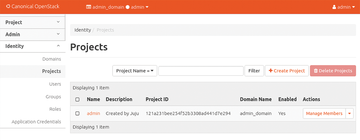

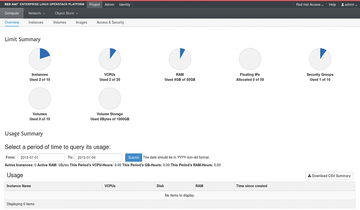

The Horizon graphical front end has been massively overhauled by the folks behind OpenStack over the past two years and now supports translation to other languages and theming in a far better way. Canonical and Red Hat placed great emphasis on this dashboard: After all, Canonical Ubuntu wants to impress in orange (Figure 3), whereas Red Hat OpenStack accents its interface with red (Figure 4). Functionally, the dashboard in Horizon now has the ability to create and delete quality of service (QoS) rules.

Figure 3: The developers have successively improved the theming options for the OpenStack dashboard. Horizon on Ubuntu appears today in the typical Canonical orange. © Ubuntu

Figure 3: The developers have successively improved the theming options for the OpenStack dashboard. Horizon on Ubuntu appears today in the typical Canonical orange. © Ubuntu

Figure 4: The Red Hat OpenStack Platform (RHOP) uses the typical Red Hat look found in other products.© Red Hat

Figure 4: The Red Hat OpenStack Platform (RHOP) uses the typical Red Hat look found in other products.© Red Hat

The most important innovation in Yoga for the Nova virtualizer is the ability to pass smart network interface cards (SmartNICs) directly through to a VM by way of network back ends. Traditionally, a software-defined network (SDN) in the OpenStack context works by creating a virtual switch on the host that takes care of packet delivery. However, this approach to processing packets has the major disadvantage that it aggressively hogs the host CPU. For this reason, practically all major network manufacturers are now offering NICs whose chips can process Open vSwitch. Therefore, control over network packet delivery is offloaded from the host to the NICs, where it is handled more efficiently – lifting the load off the host's shoulders.

Previously, Nova could use NICs by selecting the appropriate driver for the emulator in use. Now, however, Nova has a setting on the back end for the virtual network card, so the feature has been standardized and can theoretically be used by any network back-end driver in Nova.

You can see how established OpenStack thinks it has become by looking for Keystone in the OpenStack release highlights. The component is responsible for user and project management and is therefore an absolutely essential feature; however, the developers consider nothing in Yoga relevant enough to warrant a special changelog entry. The developers themselves would probably not call the Keystone feature complete, but the intent is to protect admins against too many changes in Keystone in the foreseeable future.

Kubernetes on the Horizon

Kubernetes on OpenStack (actually Kubernetes on OpenStack on Kubernetes) is a fairly common deployment scenario for the free cloud environment today. Accordingly, it is important to the developers to optimize OpenStack integration with Kubernetes to the best possible extent. More recently, a number of components have been added to OpenStack for this purpose, including Kuryr.

Like OpenStack, Kubernetes is known to come with its own SDN implementation. It used to be quite common to run the virtualized Kubernetes network on top of the OpenStack virtual network – much to the chagrin of many admins, because if anything went wrong in this layer cake, it was difficult or impossible to even guess the source of the problem.

Kuryr remedies this conundrum by creating virtual network ports in OpenStack so that Kubernetes can use them natively and dispense with its own SDN. This solution implicitly enables several features that would otherwise go unused, such as the previously described outsourcing of tasks to NICs, including offloading support, or the seamless integration of as-a-service services from OpenStack in Kubernetes environments.

Octavia, the load balancer as a service, plays a prominent role in the OpenStack universe today but can only be meaningfully used for Kubernetes if you have Kuryr. In this case, meaningful means without stacking several balancer instances on top of each other.

« Previous 1 2 3 4 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.