TCP Fast Open

In mid-2011, Google’s “Make the web faster” team, which is led by Sivasankar Radhakrishnan, Arvind Jain, Yuchung Cheng, and Jerry Chu, presented a draft for reducing preventable latency. The technique, which is called TCP Fast Open (TFO), depends on streamlining the process of opening a TCP session.

The idea itself is not new – way back in 1994, RFC1379 and RFC1644 specified the conceptually similar Transactional TCP (T/TCP). Unfortunately, an analysis published in September 1996 revealed serious security issues with T/TCP, and the technique failed to establish itself on a broader front. Based on this previous experience, the Google team refined the approach when developing TFO, leading to an improved result. Linux kernel 3.6 implements the necessary client-side infrastructure, and 3.7 will include support for TFO on the server end, so it looks like the era of faster TCP connections might be just around the corner.

The Time Thieves

The lion’s share of today’s Internet traffic is characterized by relatively short-lived data streams. With a website, for example, multiple simultaneous TCP sessions open briefly to transfer a relatively small amount of data comprising many small elements (e.g., HTML code, small graphics, and JavaScript). Because of the high performance cost associated with repeatedly establishing TCP connections, browsers often try to keep unused connections open after first calling a site (these are known as HTTP persistent connections). But, on high-traffic servers, administrators configure very tight timeout controls to avoid tying up resources unnecessarily. Thus, the time required to open and re-open TCP connections remains a source of performance problems.

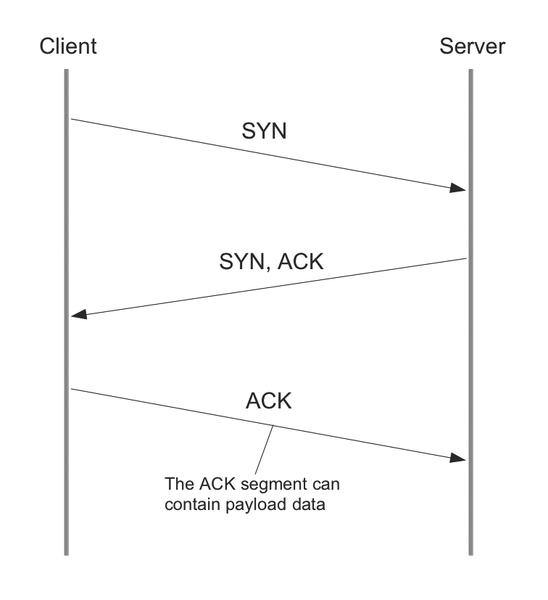

For those who might not be familiar with the elaborate ritual for starting TCP connections, the process is often called a three-way handshake (Figure 1).

The client requests the appropriate target server (SYN, synchronize). The server responds, if the client's request is legitimate, with a SYN ACK to confirm the client connection request. In this phase, parameters are exchanged for the maximum segment size (MSS), the maximum number of payload data bytes in a TCP segment allowed between the endpoints of the connection), and the initial sequence number (ISN), a unique number to make sure that the transmission is in the correct order and to detect duplicates); there is still no exchange of payload data.

In principle, the RFCs that define TCP (RFC793 and RFC1323) allow the transfer of payload data at this point, but the data transfer cannot be processed before the connection is established. The client now sends an ACK to complete the connection; the payload, say, the address of the page to open in the browser, is not transferred to the server until this point. In other words, by the time the first payload is transferred in step three of the connection, the packages have already traveled from the client to the server and have been returned. This results in an unnecessary Round Trip Time (RTT) of several dozen milliseconds, which can be avoided by using TFO.

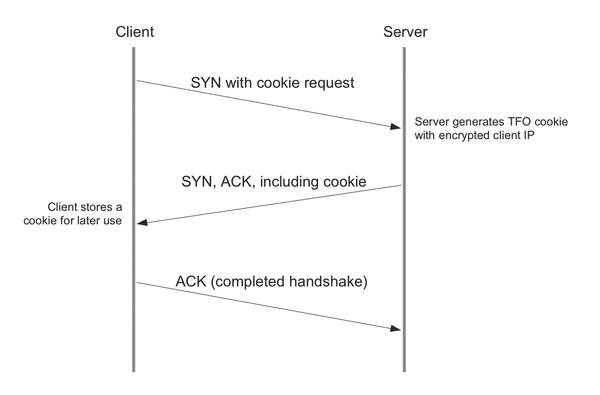

TFO speeds up the session startup process by introducing a TFO cookie. When a TFO-enabled client sends a connection request, the requested server first generates the cookie and sends it to the client for the ensuring connection structures in the second step (SYN ACK) (see Figure 2). No other data is exchanged. Among other things, the cookie contains the encrypted IP address of the client.

The process of requesting, generating and exchanging cookies takes place independently in the TCP stack, so application developers do not need to worry about it. However, you should note that there are no mechanisms for identifying multiple SYN requests prior to generating the cookie – Google seems to either trust the idempotence of the requested web server (e.g., for static pages) or rely on the application detecting duplicate requests from the same client.

Life in the Fast Lane

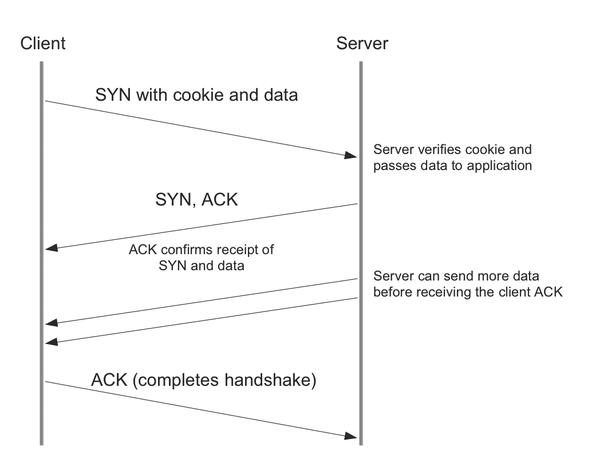

After setting up the connection with the three steps used by legacy TCP, the client has the TFO cookie, which it can use to avoid repeating the handshake in future connections. The client sends the TFO cookie and the initial payload data, such as a URL, with the next SYN request; a new TCP option was introduced to handle this situation. The requested server validates the cookie and thus ensures that the encrypted IP address stored in the cookie matches that of the requesting client. If successful, the server can now, in step 2, return payload data before receiving an ACK from the client. Compared with the traditional approach, this avoids a complete RTT (Figure 3)

Figure 3: TFO connection setup with cookie and payload data transport in the first step of the handshake.

Figure 3: TFO connection setup with cookie and payload data transport in the first step of the handshake.

If the server is unable to validate the client, no further data is returned to the client – instead a fallback mechanism kicks in, and the traditional three-way handshake is used; the server then only responds with an ACK to the requesting client.

Because a client with a valid TFO cookie can consume resources on the server before the handshake has been completed, the server risks resource exhaustion attacks. By using a defense mechanism that is well known to prevent SYN flooding attacks, admins can define a limited pool of “floating connections” (i.e., connections that may include a valid TFO cookie but have still not completed the handshake as a preventive measure). Once this pool is exhausted, the server enforces use of the legacy approach.

A practical implementation of a new mechanism has found its way into the Linux kernel. Fine tuning will certainly lead to discussions on various mailing lists in the near future. For example, the algorithm for generating the TFO cookie is implementation specific. Depending on the server profile, weighting could achieve either energy savings or high performance. Also, much thought needs to be put into the security of the implementation, such as the regular replacement of keys used server side to encrypt the client IP.

On the Edge

Adventurous users can already use TCP Fast Open, provided you have a recent kernel:

echo "1"> /proc/sys/net/ipv4/tcp_fastopen

TFO is enabled for client operations by setting bit 0 (with a value of "1" ). Bit 1 (value "2" ) enables TFO for server operations; setting both bits (with a value of "3" ) enables both modes.

In addition to the implementations in various operating systems, an official TCP option number will need to be allocated by IANA in the near future.

So far, an experimental TCP option number has been used to remain compliant. From the application developer’s point of view, very little work is required. Primarily, web servers and browsers make up the group of programs that will benefit the most, and programs that cannot use TFO, as well as devices such as routers, firewalls, etc., just keep on running regardless because of automatic fallback to the traditional TCP handshake.

The Author

Timo Schöler is a senior system administrator with Inter.Net Germany GmbH (Snafu) in Berlin. He focuses on load balancing, high availability, and virtualization.

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.