Lead Image © grafner, 123RF.com

Simple, small-scale Kubernetes distributions for the edge

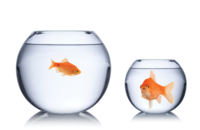

Right-Sized

Production Kubernetes clusters use several physical servers, whether the Kubernetes nodes run directly on the hardware or use a virtualization layer – although not actually needed today. Many articles published about Kubernetes rely on single-node setups for practical examples because it is what application developers primarily use. At the same time, the number of scenarios in which single-node setups also make sense in practical use is increasing. More and more users are equipping edge devices with a simple Kubernetes environment.

On one hand, you can use a central cluster management system such as Open Cluster Manager [1] to manage these devices. On the other hand, you only need to develop and test your applications for a single platform: Kubernetes. A practical example includes merchandise management systems. The components for warehousing, invoicing, and ordering run on the central Kubernetes clusters in the data center, whereas small edge servers with the point-of-sale (POS) application in a Kubernetes container are fine for the in-store POS systems.

More powerful Kubernetes platforms (e.g., Rancher (SUSE) or OpenShift (Red Hat)) are not easy to set up on a single node. Although technically feasible, it makes little sense because full-fledged platforms run several dozen containers themselves. For this reason, various manufacturers offer lightweight Kubernetes distributions that only need a few containers for edge operation.

In this article, I look at three of these distributions: K3s (SUSE), MicroShift (Red Hat), and MicroK8s (Canonical). Of course, you will find other lean distributions, such as Kubernetes in Docker (KinD), Minikube, and k3d, but I do not look at those options here because they are primarily intended for use on developer desktops.

K3s

K3s [2] is

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.