Lucy Baldwin, 123RF

A closer look at hard drives

Disco Mania

In the previous issue, I set down some history and the basic hard drive layout and operation background as a prelude to fully diving into the subject in this second part of my series. Resuming from where I left off, I'll try find out everything that my laptop knows about its internal drive (Figure 1).

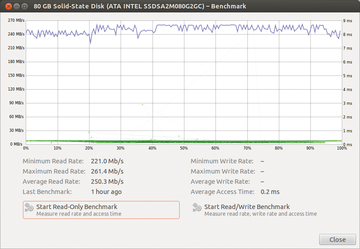

Figure 1: Intel SA2M080G2GC, an Intel 320 second-generation SSD, being tested on a 3Gbps SATA 2 bus.

Figure 1: Intel SA2M080G2GC, an Intel 320 second-generation SSD, being tested on a 3Gbps SATA 2 bus.

I have an 80GB Intel 320 SSD, performing remarkably close to its specified sequential read rating of 270MBps [1], but it is the second-generation drive's write performance that demonstrates the significant benefits of the TRIM [2] extension, enabling an SSD to distinguish a true overwrite operation from a write onto unallocated free space. Because a drive's logic has no insight into filesystem structure, these two operations were previously indistinguishable, needlessly degrading SSD write performance. The TRIM option enables the filesystem to notify disks of file deletions – resolving this problem in most configurations not involving RAID, which is still negatively affected.

The /etc/fstab file shows that this partition is installed with Ubuntu 12.04's default ext4 filesystem, which is

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.