Pansiri Pikunkaew, 123RF

Protecting your web application infrastructure with the Nginx Naxsi firewall

Fire Protection

Large web applications usually consist of several components, including a front end, back-end application servers, and a database. The front end handles a large part of the processing of requests, and if properly configured and tuned, it can help to shorten access times, relieving the load on the underlying application server and providing protection against unauthorized access.

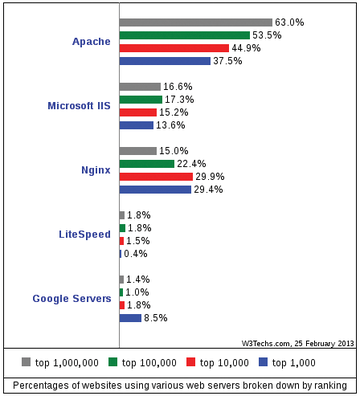

The Nginx web server [1] has quickly established itself in this ensemble. Nginx (pronounced "Engine X") can act as a reverse proxy, load balancer, and static server. The system is known for high performance, stability, and frugal resource requirements. Although Apache is still king of the hill, approximately 30 percent of the top 10,000 websites already benefit from Nginx [2] (Figure 1).

Although Nginx is finding success with all sizes of networks, it is particularly known for its ability to scale to extremely high volumes. According to the project website, "Unlike traditional servers, Nginx doesn't rely on threads to handle requests. Instead it uses a much more scalable event-driven (asynchronous) architecture. This architecture uses

...Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.