Lead Image © designpics, 123RF.com

LXC 1.0

Lean and Quick

Linux containers have been fully functional since kernel 2.6.29. However, Linux has had containers in the form of Virtuozzo [1] and OpenVZ [2] for some time. The difference is that the Linux kernel now has all the necessary components for operating containers and no longer requires patches. Kernel namespaces isolate containers from each other and CGroups limits resources and takes care of priorities.

Solid Foundation

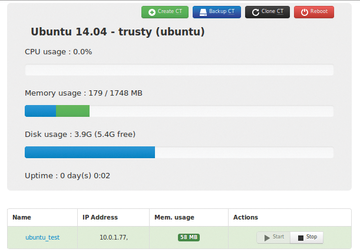

A first stable version of the LXC [3] userspace tool has been used to manage containers since February 2014. Ubuntu 14.04 has LXC 1.0 on board, which the developers recommend and will provide support for until April 2019 (Figure 1). If you want to install LXC in Ubuntu Trusty Tahr, you are best off using the v14.04.1 [4] server images. Kernel 3.13 (used here) has five years of LTS support; this doesn't apply to the kernels of newer LTS updates. You can get the LXC tools after installation by entering:

apt-get install lxc

The packages (among other things) listed in Table 1 will land on your computer.

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.