Lead Image © cepixx, 123RF.com

Safeguard and scale containers

Herding Containers

Since the release of Docker [1] three years ago, containers have not only been a perennial favorite in the Linux universe, but native ports for Windows and OS X also garner great interest. Where developers were initially only interested in testing their applications in containers as microservices [2], market players now have initial production experience with the use of containers in large setups – beyond Google and other major portals.

In this article, I look at how containers behave in large herds, what advantages arise from this, and what you need to watch out for.

Herd Animals

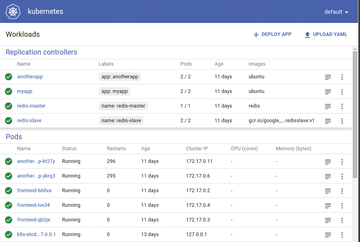

Admins clearly need to orchestrate the operation of Docker containers in bulk, and Kubernetes [3] (Figure 1) is a two-year-old system that does just that. As part of Google Infrastructure for Everyone Else (GIFEE), Kubernetes is written in Go and available under the Apache 2.0 license; the stable version when this issue was written was 1.3.

The source code is available on GitHub

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.