Photo by Julien DI MAJO on Unsplash

Questions about the present and future of OpenStack

Maturity Processes

ADMIN Magazine: The OpenStack Foundation has changed its name to the OpenInfra Foundation. Can you say something about the part OpenStack plays in this new setting?

Thierry Carrez: The foundation was originally created to host and promote the OpenStack project. In the process of doing so in the last 10 years, we assembled a wide community of operators, organizations, and developers interested in the concept of using open source solutions to provide infrastructure. With such an audience, it is only natural that we support and host other projects that are relevant for the same audience.

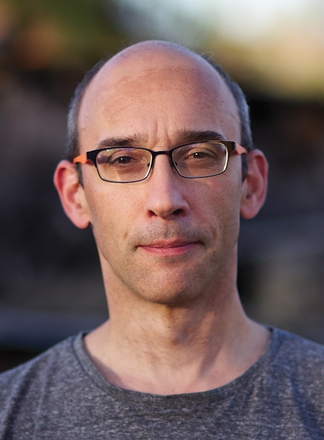

Thierry Carrez, General Manager at the OpenInfra Foundation, was involved in founding the OpenStack project as a systems engineer and still contributes to its governance and release management. A fellow of the Python Software Foundation, he previously worked as technical lead for Ubuntu Server at Canonical, operations lead for the Gentoo Linux Security team, and IT manager at various companies.

Thierry Carrez, General Manager at the OpenInfra Foundation, was involved in founding the OpenStack project as a systems engineer and still contributes to its governance and release management. A fellow of the Python Software Foundation, he previously worked as technical lead for Ubuntu Server at Canonical, operations lead for the Gentoo Linux Security team, and IT manager at various companies.

This is why we became the OpenInfra Foundation: to support other open infrastructure projects beyond OpenStack that are of interest to the group of infrastructure providers we assembled. OpenStack is still by far the largest project hosted at the Foundation, though, so it is central to all of our activities. With the change and the new project hosting offering we presented at the 2018 Summit in Berlin, we are ready to tackle the next decade of open infrastructure projects.

AM: OpenStack celebrates its 12th anniversary this year. Where do you see it in the coming years, and what will change?

TC: Today, OpenStack is the de facto open source standard for deploying cloud infrastructure services, be it to offer private resources for a given organization or public resources for customers around the world with a credit card. Combined with the Linux kernel and Kubernetes for application orchestration, it forms a very popular open source framework, the Linux OpenStack Kubernetes Infrastructure (LOKI). Usage is growing significantly, driven by new requirements and new practices.

That said, OpenStack is functionally mature and its scope now well defined, so I expect new development to slow down and focus on maintenance going forward. In the same way Linux kernel development is driven by new capabilities in computer hardware, I expect OpenStack development also to be driven by new capabilities in data center hardware needing to be made available to the software.

AM: OpenStack is still a very successful project with big numbers, but some critics say the growth in installations belongs more to existing customers than to new customers. Is that right?

TC: It's really both. In June we held our first in-person event since the pandemic, the OpenInfra Summit in Berlin [1]. We met one new user after another, many of them running at impressive scale surpassing 100,000 cores of compute on OpenStack. New requirements like digital sovereignty are driving a lot of adoption, especially in scientific use cases and the public cloud in Europe. The need to deploy new municipal and research systems rapidly for pandemic response also resulted in a lot of additional deployments worldwide. Existing implementations keep growing, as well. It's a healthy balance of energy and creativity for the community.

AM: When you take a look at possible competitors, why should users choose OpenStack and not AWS? What are the main differences?

TC: Users should always choose the solution that is the best for their specific needs. There are many reasons why someone would choose one, the other, or both. Our community tells us that they see OpenStack playing an important role to control their infrastructure costs, or to fulfill their regulatory requirements, or to answer very specific use cases for which hyperscalers can't provide a good solution. In those strategies, private OpenStack clouds are often used in combination with AWS, Azure, Google, or one of the many OpenStack-based public clouds in a multicloud or hybrid cloud deployment.

That said, the main difference between OpenStack and proprietary clouds is the ability to participate in an open, active community and directly influence the direction of the project through personal involvement. That's something proprietary solutions will never match.

AM: Companies are desperately looking for developers and IT experts. What steps are planned to make OpenStack easier to use to mitigate this staff shortage?

Jeremy Stanley has worked as a Unix and Linux sys admin for more than two decades, focusing on information security, Internet services, and data center automation. He is a core member of the OpenStack project's infrastructure team, serving on both the technical committee and the vulnerability management team.

Jeremy Stanley has worked as a Unix and Linux sys admin for more than two decades, focusing on information security, Internet services, and data center automation. He is a core member of the OpenStack project's infrastructure team, serving on both the technical committee and the vulnerability management team.

Jeremy Stanley: It's funny: Every time I hear someone talk about how hard OpenStack is to deploy and manage, they inevitably have not touched it for years. The community has made operations a major point for new capabilities, and while infrastructure will always be hard, the stereotypical challenges to deploying and operating OpenStack are no longer issues.

AM: In detail, are there any plans regarding the development of OpenStack to make deployment less complicated?

JS: In recent years, the community has put a lot of energy into supporting installations for a variety of popular configuration management and orchestration solutions like Ansible, Chef, Helm, and Puppet, as well as focusing on both packaging for server distributions and container-based frameworks like Kubernetes.

AM: What do you think could make the installation more simple?

JS: Many new users don't realize that OpenStack isn't an all-or-nothing solution. It's a composable, modular suite of services, most of which are optional, and some can even be used entirely on their own. We're working to amplify this message, because a lot of the perceived complexity is really a result of people mistakenly installing more than they actually need.

AM: Any plans to make the upgrading process less complex and with less downtime?

TC: :At this point, upgrading an OpenStack cluster is a pretty established process, and it does not trigger significant downtime. At the same time, the size of deployments has grown significantly, with some now well surpassing the million-core CPU mark. That represents a lot of OpenStack clusters, so keeping them up to date with a release every six months is still significant work.

To reduce the pressure to upgrade for established deployments, starting with the OpenStack "Antelope" release in March 2023, OpenStack is offering the possibility to directly upgrade yearly instead of every six months. This should hopefully facilitate the lives of OpenStack operators.

AM: Can you describe the modular architecture of OpenStack?

JS: The fundamental design principle across OpenStack projects is that services interact with each other through REST (i.e., HTTP-based) interfaces to coordinate shared resources. Services are written in a consistent programming language and coding style, avoiding significant duplication through central libraries and consensus around dependencies. The result is a pluggable suite of service options that organizations can tailor to their particular use cases by installing just what they need, while still having the opportunity to grow their solution by adding other services as their requirements change.

AM: What should change in terms of the OpenStack architecture to make management easier?

JS: A significant undertaking, already well underway, is the implementation of an extensible role-based access control model. With this, operators will be able to delegate permissions for some tasks to other users, reducing their own workload. Consumers of the cloud resources will likewise gain the ability to provide fine-grained control of specific actions to relevant stakeholders in their organizations. This work is still in its early stages, with the introduction of support for a read-only role to allow access to audit systems without the risk that the user might make changes; the ability to create more specific roles will follow in coming releases.

AM: What do you think are the key deployment challenges that organizations face with OpenStack?

JS: The hardest challenges, by far, are related to planning and sizing infrastructure. No two use cases are the same, and especially with added complications in obtaining hardware these days, it's more important than ever to be as accurate as possible and not overspend. Unfortunately, sizing a deployment (of anything beyond trivial complexity, not just OpenStack) is more of an art than a science. If your haven't done it often and for a wide variety of solutions, it's hard to even know where to begin. That's really one of the biggest reasons to establish a relationship with an OpenStack distribution vendor: They have more experience than anyone else at estimating how much of what sort of hardware you're likely to need and how best to design the deployment to maximize efficiency and minimize cost.

AM: Maybe you could give a special view on the "Yoga" release and how it helps make things easier.

JS: It's hard to single out a few features, but one good example is that Ironic [for provisioning bare metal machines] continued to evolve its defaults to align with more modern server infrastructures, switching from legacy BIOS to UEFI and emphasizing local boot workflows for deployed images. Another is Kolla [production-ready containers and deployment tools], which focused on a single set of container images, rather than trying to maintain two different solutions in parallel, and simplified its design with the addition of a new Ansible collection that shared across its components. Additionally, Nova added support for offloading the network control plane to a SmartNIC, freeing up more capacity for hypervisor workloads, and can also now provide processor architecture emulation for users who want to run software built for ARM, MIPS, PowerPC, or S/390 systems, all on the same host.

AM: Yoga Neutron has a new feature called Local IP. Can you explain the benefits in performance?

TC: Local IP is primarily focused on high efficiency and performance of the networking data plane for very large scale clouds or clouds with high network throughput demands. Local IP is a virtual IP that can be shared across multiple ports or VMs, is only reachable within the same physical server or node boundaries, and is optimized for such performance use cases.

TC: It was a standing room only session, and it was great to see operators of large deployments at Ubisoft, Bloomberg, OVHcloud, CERN, Workday, or Adevinta share their experiences and pain points. They primarily discussed two classic scaling pain points in large OpenStack clusters: RabbitMQ and Neutron. In particular, they traded tips on how best to monitor and alleviate load issues.

This discussion was driven by the operators themselves within the OpenStack Large Scale SIG and is a great example of what open communities can achieve working together – something that is only truly possible with open source software.

Infos

- OpenInfra Summit Berlin: https://www.youtube.com/hashtag/openinfrasummit

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.