Photo by Quino Al on Unsplash

Analyzing tricky database problems

Set Theory

Troubleshooting complex cloud infrastructures can quickly look like finding the proverbial needle in a haystack. Nevertheless, the effort can be worthwhile, as shown by a practical example at the Swiss foundation Switch. The foundation has been organizing the networking of all Swiss university IT resources for more than 30 years [1]. In addition to Swiss universities, the Switch community also includes other institutions from the education and research sectors, as well as private organizations (e.g., financial institutions or industry-related research institutions).

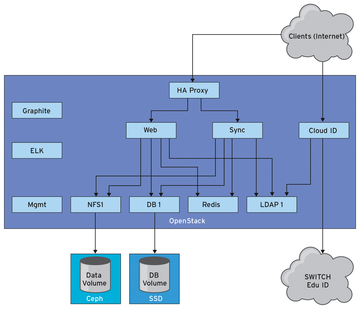

Because of its high degree of networking, Switch depends on a powerful cloud infrastructure. The foundation provides tens of thousands of users with the Switch Drive file-sharing service, which is based on ownCloud, is hosted 100 percent on Swiss servers, and is used mainly for synchronizing and sharing documents (Figure 1).

Switch Drive is essentially an ownCloud offering under the Switch brand that manages 30,000 users and about 105 million files. The oc_filecache table contains about 100 million lines. To manage this high number, six MariaDB servers [2] are used in Galera Cluster

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.