« Previous 1 2 3 Next »

Better monitoring for websites and certificates

Tick It Off

Detailed Monitoring of Certificates

The check_cert tool enters the stage just like the HTTP check: completely implemented in Rust and usable both as a plugin for Nagios-compatible systems and solo on the command line. One small difference is that check_cert has no predecessor; the tool and its functions are a development from scratch.

In Checkmk, it is located right next to the HTTP check under Setup | Services | HTTP, TCP, email … and then Networking | Check certificates . An example of a fairly comprehensive certificate check (still without using the full range of options) is:

./check_cert --url checkmk.de --port 443 --not-after 3456000 1728000 --max-validity 90 --serial 05:0e:50:04:eb:b0:35:ad:e9:d7:6d:c1:0b:36:d6:0e:33:f1--signature-algorithm1.2.840.10045.4.3.2--issuer-o Let's Encrypt--pubkey-algorithm rsa--pubkey-size 256

This check would give you a pretty good level of security; the exact serial number requirement alone should leave little room for doubt. Many options are self-explanatory, and others are not, such as signature-algorithm and not-after.

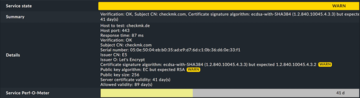

The signature algorithm is specified here with the corresponding object identifier (OID) and, in the Checkmk web interface, is listed in plain text (here, ecdsa-with-SHA256

). You already know the values that follow not-after from above: These represent the 40 or 20 days remaining until the status changes to WARN/CRIT (--certificate-levels 40,20), which are checked in seconds.

In the web interface, the options for check_httpv2 and check_cert have the same name and are configured in days for simplicity. However, check_cert can also check the maximum remaining lifetime or allow self-signed certificates without generally ignoring the validity of the certificates.

How does this comprehensive check manifest itself in monitoring? Despite the exact serial number, you see a warning message that the signature and public key algorithms do not match. Either it is a configuration error, or you are just being shown how warning messages for individual options are displayed in the web interface (Figure 6).

So much for the basics of using the two checks for individual websites and certificates. Now it's time for a little more practice and complexity.

Monitoring Many Websites

An exciting question in practice is: How can Checkmk be used to monitor a large number of websites? A question that addresses something that has not played a role so far is: On which host(s) do the checks actually run?

The classic approach in the old check plugin is to assign a rule to each website and assign all services to a virtual host that exists solely for this purpose. With three sites to monitor, this task is no problem, but with 200 sites, it certainly is. Checkmk offers two possible approaches: macros and individual endpoints.

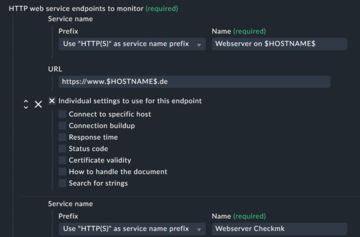

Multiple endpoints are very straightforward: In the configuration of check_httpv2, you can simply specify any number of URLs as endpoints, all of which share a basic configuration. Additionally, each endpoint can be assigned its own options, which override the basic configuration accordingly. The end result would be one rule, one host, and many endpoints.

Alternatively, you can use macros. Instead of a URL, the hostname can be specified as a macro ($HOSTNAME$) as the endpoint (Figure 7). If the rule is then filtered to a specific folder for website hosts, for example, the check would be applied to a website with the same name for each host in that folder; in other words (in pseudo code):

./check_httpv2 --url https://$HOSTNAME$

If website example.com is to be monitored later, all you have to do is create a host example.com in the correct folder, and the check will run against this address. The end result would be one rule, one endpoint, and many hosts.

Both variants have their advantages, and both work for check_cert. With the macro method, instead of multiple rules or endpoints, multiple hosts are managed, which offers significantly more possibilities for structuring, is clearer, and covers one of the standard cases well for the question: Is the host's website accessible under its name? The endpoint method is more flexible in terms of different configurations for individual websites and bundles the entire issue into one rule. This approach is particularly advantageous if a website and its various subpages (imprint, with or without WWW, etc.) are to be monitored. In practice, this method usually results in a mixed operation of both approaches.

For the next practical exercise, I return to the command line.

Websites Reachable via Load Balancers

Nowadays, pinging a website usually involves redirects. The check_httpv2 tool follows these by default and includes the redirect in the output:

URL to test: https://example.com/ Stopped on redirect to: https://www.example.com/ (changed IP) (!) Status: 301 Moved Permanently

However, if, for example, a load balancer distributes a domain name to several back-end servers with different IP addresses, performance problems might raise the question of where the problem is situated. In the simplest case, separate checks are recommended:

- Check on domain name example.com , including load balancer

- Checks directly on IP addresses of the web servers; load balancer is bypassed

The "server" option is important here, which could look like:

./check_httpv2 --url example.com --server 192.0.2.73

In this case, availability is not checked via the URL, but directly by way of the forwarding destination, the specific IP address. In practice, you could use a rule with multiple endpoints to check both the URL and all specific servers behind the load balancer. In Checkmk, you will find this option under Connect to physical host .

By the way, don't confuse this option with the proxy option! You can configure a track via a proxy server with --proxy-url or, in Checkmk, HTTP proxy

in the Connection buildup

section, which also makes it clear that the --server option has nothing to do with establishing a connection, but rather defines an alternative destination.

It should now be clear how the check and its options work, but I still need to mention one feature separately because it goes beyond pure log data: content checking.

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.