« Previous 1 2 3 4 Next »

Coordinating distributed systems with ZooKeeper

Relaxed in the Zoo

Admins who manage the compute cluster with a specific number of nodes and high availability (HA) requests will at some point need a central management tool that, for example, takes care of the naming, grouping, or configurations of the menagerie. Thanks to ZooKeeper [1], which is available under the Apache 2.0 license, not every cluster has to provide a synchronization service itself. The software can be mounted in existing systems – for example, in a Hadoop cluster.

Server and Clients

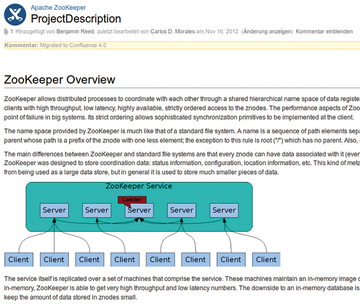

A ZooKeeper server keeps track of the status of all system nodes. Larger decentralized systems and multiple replicating servers can be used (Figure 1). They then synchronize node status information among themselves, making sure that system tasks run in a fixed order and that no inconsistencies occur.

You can imagine ZooKeeper as a distributed filesystem, because it organizes its information analogously to a filesystem. It is headed by a root directory (/). ZooKeeper nodes, or znodes

, are maintained below this; the name is intended to distinguish them from computer nodes.

A znode acts both as a binary file and a directory for more znodes,

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.