Controlling Amazon Cloud with Boto

The Amazon Cloud offers a range of services for dynamically scaling your own server-based services, including the Elastic Compute Cloud (EC2), which is the core service, various storage offerings, load balancers, and DNS. It makes sense to control these via the web front end if you only manage a few services and the configuration rarely changes. The command-line tool, which is available from Amazon for free, is better suited for more complex setups.

If you want to write your own scripts to control your own cloud infrastructure, however, Boto provides an alternative in the form of an extensive Python module that largely covers the Amazon API.

Python Module

Boto was written by Mitch Garnaat, who lists a number of application examples in his blog. Boto is available under an undefined free license from GitHub. You can install the software using the Python Package Manager, Pip:

pip install boto

To use the library, you first need to generate a connection object that maps the connection to the respective Amazon service:

from boto.ec2 import EC2Connection conn = EC2Connection(access_key, secret_key)

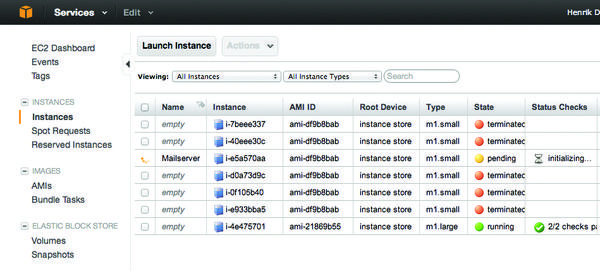

As you can see, the EC2Connection function expects two keys that you can manage via Amazon Identity and Access Management (IAM). In the Users section of the IAM Management Console, you can go to User Actions and select Manage Access Keys to generate a new passkey (Figure 1).

Figure 1: Amazon Identity and Access Management (IAM) is used to generate the access credentials needed to use the web service.

Figure 1: Amazon Identity and Access Management (IAM) is used to generate the access credentials needed to use the web service.

The key is immediately ready for downloading but disappears later for security reasons. In other words, you want to download the CSV file and store it in a safe place where you can find it again even six months later.

The preceding call connects to the default region of the Amazon Web Service, which is located in the United States. To select a different region, Boto provides a connect_to_region() call. For example, the following command connects to the Amazon datacenter in Ireland:

conn = boto.ec2.connect_to_region("eu-west-1")Thanks to the connection object, users can typically use all the features a service provides. In the case of EC2, this equates to starting an instance, which is similar to a virtual machine. To do this, you need an image (AMI) containing the data for the operating system. Amazon itself offers a number of images, including free systems as well as commercial offerings, such as Windows Server or Red Hat Enterprise Linux. Depending on the license, use is billed at a correspondingly higher hourly rate.

AWS users have created images that are preconfigured for web services, for example, and any kind of application you can imagine. For the time being, you can discover the identifier you need to start an image instance in the web console, which also includes a search function. The following function launches the smallest Amazon instance on offer using the ami-df9b8bab image:

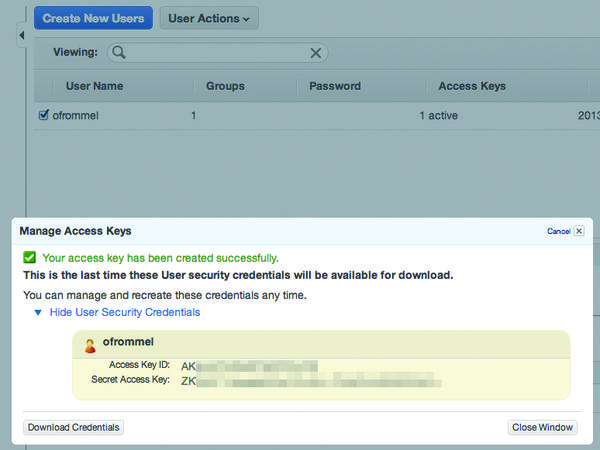

conn.run_instances('ami-df9b8bab', instance_type='m1.small')If you take a look at the EC2 front end, you can see the instance launch (Figure 2).

That’s it basically; you can now use the newly created virtual server. Amazon points out that users themselves are responsible for ensuring that the selected instance type and the AMI match. The cloud provider does not perform any checks.

Reservation

To stop an instance you have started, it would be useful to have direct access to it in Boto. This access can be achieved by storing the return value for the above call in a variable: run_instances() returns a reservation containing an array field named instances that stores all the concurrently started instances. Listing 1 shows the complete script for starting and stopping an instance.

Listing 1: launch.py

01 import boto.ec2

02

03 conn = boto.ec2.connect_to_region("eu-west-1")

04 reservation = conn.run_instances('ami-df9b8bab', instance_type='m1.small')

05 instance = reservation.instances[0]

06

07 raw_input("Press ENTER to stop instance")

08

09 instance.terminate()For better orientation with a large number of instances, the Amazon Cloud provides tags. Thus, you can introduce a name tag that you assign when you create the instance:

instance.add_tag("Name", "Mailserver")The instance object’s tags attribute then stores the tags as a dictionary.

To help you stop multiple instances, the EC2 module provides the terminate_instances() method, which expects a list of instance IDs as parameters. If you did not store the reservations at the start, you can now retrieve them with the somewhat misleadingly named get_all_instances() . Again, each reservation includes a list of instances – even the ones that have been terminated in the meantime:

>>> reservations = conn.get_all_instances() >>> reservations[0].instances[0].id u'i-1df79851' >>> reservations[0].instances[0].state u'terminated'

If you add a few lines, like those in Listing 2, to a script, you can call the script with python -i .

Listing 2: interact.py

01 # call with python -i

02 import boto.ec2

03

04 conn = boto.ec2.connect_to_region("eu-west-1")

05 reservations = conn.get_all_instances()

06

07 for r in reservations:

08 for i in r.instances:

09 print r.id, i.id, i.stateYou then end up in an interactive Python session in which you can further explore the EC2 functions – for example, using the dir<(object)> command:

$ python -i interact.py r-72ffb53e i-1df79851 terminated r-9b6b61d4 i-3021247f terminated >>>

To save yourself the trouble of repeatedly typing the region and the authentication information, Boto gives you the option of storing these variables in a configuration file, either for each user as ~/.boto or globally as /etc/boto.cfg .

The configuration file is divided into sections that usually correspond to a specific Amazon service. Access credentials are stored in the Credentials section, which is not really convenient, because in addition to the region, the user also needs to enter the matching endpoint; however, you only need to do this work the first time. You can retrieve the required data (e.g., as in Listing 3).

Listing 3: Regions

01 >>> import boto.ec2

02 >>> regions = boto.ec2.regions()

03 >>> regions

04 [RegionInfo:ap-southeast-1, RegionInfo:ap-southeast-2, \

RegionInfo:us-west-2, RegionInfo:us-east-1, RegionInfo:us-gov-west-1, \

RegionInfo:us-west-1, RegionInfo:sa-east-1, RegionInfo:ap-northeast-1, \

RegionInfo:eu-west-1]

05 >>> regions[-1].name

06 'eu-west-1'

07 >>> regions[-1].endpoint

08 'ec2.eu-west-1.amazonaws.com'A complete configuration file containing a region, an endpoint, and access credentials is shown in Listing 4.

Listing 4: ~/boto

01 [Boto] 02 ec2_region_name = eu-west-1 03 ec2_region_endpoint = ec2.eu-west-1.amazonaws.com 04 05 [Credentials] 06 aws_access_key_id=AKIABCDEDFGEHIJ 07 aws_secret_access_key=ZSDSLKJSDSDLSKDJTAJEm+7fjU23223131VDXCXC+

As mentioned before, the regional data is service specific; after all, you want to run the services in different regions. The region for EC2 in this example is thus ec2_<region_name> , whereas the S3 storage service uses s3_<region_name> , and the load balancer uses elb_<region_name> , and so on.

The API is a little confusing because some functions are assigned to individual services and can be found in the matching Python modules, whereas others with the same tasks are located in the boto module as partially static functions. For example, to set up a connection, you have boto.ec2.connect_to_region() and boto.connect_ec2() . Although boto.connect_s3 follows the same pattern, the corresponding call in the S3 module is named S3Connection . The API documentation provides all the details.

Load Balancers

To set up a load balancer in the Amazon Cloud with Boto, you first need a Connection object, which you can retrieve with the boto.ec2.elb.connect_to_region() or boto.connect_elb() calls. Another function creates a load balancer, to which you can assign individual nodes:

lb = conn.create_load_balancer('lb', zones, ports) \

lb.register_instances(['i-4f8cf126'])Alternatively, you can use the Autoscaling module that automatically adds nodes to the load balancer or removes them depending on the load.

The Boto library includes modules for every service Amazon offers. Thus, besides EC, it includes the basic services, such as Secure Virtual Network, Autoscaling, DNS, and Load Balancing. For simple web applications with greater scalability and availability requirements, these tools will take you quite a long way. Additionally, Amazon offers a wide range of possibilities for storing data, including the Dynamo distributed database, the Elastic Block Store, a relational database, and a large-scale system for a “data warehouse.”

Of course, you can always install a MySQL, PostgreSQL, or NoSQL database on a number of instances of Linux and replicate it on other PCs. For load distribution, you would then use the load balancing service, possibly in cooperation with the Amazon CloudFront content delivery network.

When it comes to managing your own cloud installation, you have too many choices, rather than too few. In addition to autoscaling, your options include Elastic Beanstalk, OpsWorks, and Cloud Formation, all of which offer similar features. This list of services, however, is not complete; the PDF on Amazon’s website provides an overview.

Conclusions

Boto lets you write scripts that manage complex setups in the Amazon Cloud. Even if you’re not a Python expert, you should have little difficulty acquiring the necessary knowledge. The documentation for the Python module is usable, although many of the API’s methods are fairly complex, with their multitude of parameters. Finally, don’t forget that cloud computing frameworks already provide some of the functionality that you can program with Boto, so take care not to reinvent the wheel. More information about Boto can be found in the documentation. In printed form, check out the Python and AWS Cookbook , which is also by the Boto author.

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.