Lead Image © Lucy Baldwin, 123RF.com

Filesystem Murder Mystery

Hunting down a performance problem

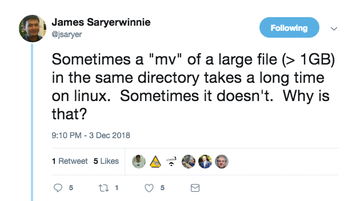

As I was generating results for one of last Fall's columns, James Saryerwinnie of the Amazon AWS team was chasing an interesting performance problem and relaying his progress live on Twitter (Figure 1) [1], providing me yet another unnecessary opportunity to procrastinate. What followed was one of the most interesting Twitter threads in performance-tuning in recent memory and a great practical demonstration of the investigative nature of performance problems.

Saryerwinnie was observing occasional unexplained delays of a few seconds while renaming some gigabyte-sized files [2] on a fast cloud instance [3]. I was able to reproduce his result in an AWS EC2 m5d.large instance running Ubuntu Server 18.04 (Figure 2). I used an EBS class gp2 volume of 8GB showing remarkable performance in this particular test (including a throughput of 175MBps), but similar results are achievable at other scales with some lucky timing.

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.