« Previous 1 2 3 4 Next »

Ceph object store innovations

Renovation

Help On the Way

SUSE also noticed that Calamari is hardly suitable for mass deployment. Especially in Europe, SUSE was a Ceph pioneer. Long before Red Hat acquired Inktank, SUSE was experimenting with the object store. Although SUSE has enough developers to build a new GUI, they instead decided to cooperate with it-novum, the company behind openATTIC [5], a kind of appliance solution that supports open-source-based storage management.

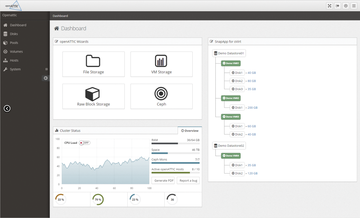

For some time, the openATTIC [6] beta branch has contained a plugin that can be used for basic Ceph cluster management, which is exactly the part of openATTIC in which SUSE is interested (Figure 3). In the future collaboration with it-novum, SUSE is looking to improve the Ceph plugin for openATTIC and extend it to include additional features. In the long term, the idea is to build a genuine competitor to Calamari. If you want, you can take a closer look at the Ceph functions in openATTIC.

Figure 3: openATTIC is setting out to become the new GUI of choice for Ceph administration, thanks to a collaboration between SUSE and it-novum.

Figure 3: openATTIC is setting out to become the new GUI of choice for Ceph administration, thanks to a collaboration between SUSE and it-novum.

When the initial results of the collaboration between SUSE and it-novum can be expected is unknown; this is one topic not covered in the press releases. However, the two companies are unlikely to take long to present the initial results, because there is clearly genuine demand right now, and openATTIC provides the perfect foundation.

Disaster Recovery

In the unanimous opinion of all admins with experience of the product, one of the biggest drawbacks of Ceph is the lack of support for asynchronous replication, which is necessary to build a disaster recovery setup. A Ceph cluster could be set up to span multiple data centers if the connections between the sites are fast enough.

Then, however, the latency problem raises its ugly head again: In Ceph, a client receives confirmation of a successful write only after the specified object has been copied to the number of OSDs defined by the replication policy. Even really good data center links have a higher latency than write access to filesystems can tolerate. If you want to build a multi-data-center Ceph in this way, you have to accept the very high latencies for write access to the virtual disk on the client side.

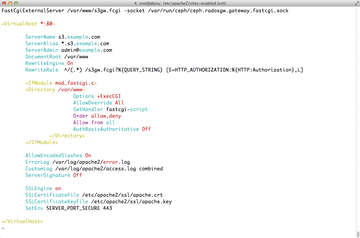

Currently, you have several approaches to solving the problem in Ceph. Replication between two clusters of Ceph with the RADOS federated gateway (Figure 4) is suitable for classic storage services in the style of Dropbox. An agent part of the RADOS gateway synchronizes data between the pools of two RADOS clusters at regular intervals. The only drawback: The solution is not suitable for RBDs, but it is exactly these devices that are regularly used in OpenStack installations.

Figure 4: The RADOS gateway offers Dropbox-like services for Ceph. In Jewel, the developers have improved off-site replication in the RADOS gateway.

Figure 4: The RADOS gateway offers Dropbox-like services for Ceph. In Jewel, the developers have improved off-site replication in the RADOS gateway.

A different solution is thus needed, and the developers laid down the basis for it in the Jewel version of Ceph: The RBD driver will have a journal in future in which the incoming write operations are noted. You can thus track for each RBD which changes are taking place. This eliminates the need for synchronous replication for links between data centers: If the content of the journal is regularly replicated between sites, a consistent state can always be ensured on both sides of the link.

If worst comes to worst, it's possible that not all the writes from the active data center reach the standby data center if the former is hit by a meteorite. However, the provider will probably prefer this possibility to day-long failure of all services.

Incidentally, the developers have again performed a major upgrade in Jewel on the previously mentioned multiple-site replication feature in the RADOS gateway. The setup can now be run in active-active mode. The agent used thus far will, in future, be an immediate part of the RADOS gateway itself, thus removing the need to run an additional service.

Sys Admin Everyday Life

In addition to the major construction sites, some minor changes in the Jewel release also attracted attention. Although unit files for operation with systemd have officially been around in Ceph since mid-2014, the developers enhanced them again for Jewel. A unit file now exists for each Ceph service; individual instances such as OSDs can be started or stopped in a targeted way on systems with systemd.

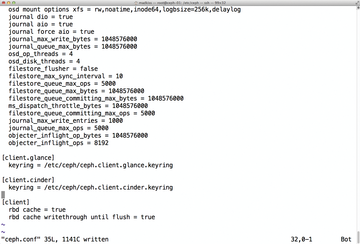

The same applies for integration with the SELinux security framework. Ubuntu users who would rather rely on the Ubuntu default AppArmor will need to put in some manual work if things go wrong. The developers have also looked into the topic of security for Jewel: Ceph uses its own authentication system, CephX, when users or individual services such as OSDs, MDSs, or MONs want to log in on the cluster (Figure 5).

Figure 5: CephX will be better protected in future against brute force attacks. However, it is unclear whether this function will make Jewel freeze.

Figure 5: CephX will be better protected in future against brute force attacks. However, it is unclear whether this function will make Jewel freeze.

For protection against brute force attacks, a client who tries to log in on the cluster with false credentials too often will be added automatically to a black list. Ceph then refuses all further logon attempts, regardless of the validity of the username and password combination. When this issue went to press, this function still not not appear in Jewel.

« Previous 1 2 3 4 Next »

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.