Lead Image © THISSATAN KOTIRAT , 123RF.com

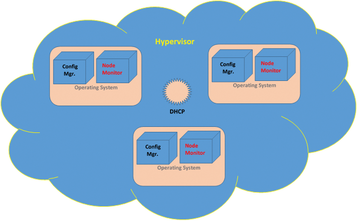

A self-healing VM system

Server, Heal Thyself

A common definition of a self-healing system is a set of servers that can detect a malfunction within its own operations and then repair any error(s) without outside intervention. "Repair" in this case will specifically mean replacing the problematic node entirely. In the example discussed in this article, I used Monit [1] to monitor the state of each virtual machine and Ansible to execute the replacement of faulty nodes. A DHCP server was also configured to assign new network addresses and reclaim the addresses that are no longer used.

An in-depth tutorial of the technologies used in the examples is not given. It is left up to the reader to acquire additional documentation if needed. Figure 1 below shows an overview of the setup.

Clouds and Hypervisors

The term "hypervisor" in this article means any platform or program that manages virtual machines to share the underlying hardware resources of a cluster of host servers. Under this definition, Amazon Web Services and Azure are included as hypervisors. Traditional examples, such as Red Hat KVM, VMware ESXi, and Xen are more suitable for this

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.