« Previous 1 2 3 4

AI and the BooM Stack

Conclusion

The Intelligence BooM reference stack is a departure point for visualizing the openEuler AI environment. The powerful tools of the openEuler application layer rest on a full stack of open source tools that is tailored and tuned for AI workloads. In addition to the tools described in this article, you will find drivers and software development kits (SDKs) to support out-of-the-box operations for a wide range of AI scenarios. See the box entitled “Performance Matters” for more on the performance tuning tools included with openEuler. The “openEuler Intelligence: A Case Study” box offers an example showing how openEuler Intelligence can bring the power of AI to solving real-world problems.

openEuler runs on a variety of hardware and is supported by a large community of users, including many AI specialists employed in both academia and industry. If you’re interested in learning more about AI in openEuler, contact the openEuler support team at contact@openeuler.io.

This article was made possible by support from openEuler by OpenAtom Foundation through Linux New Media's Topic Subsidy Program.

| Performance Matters |

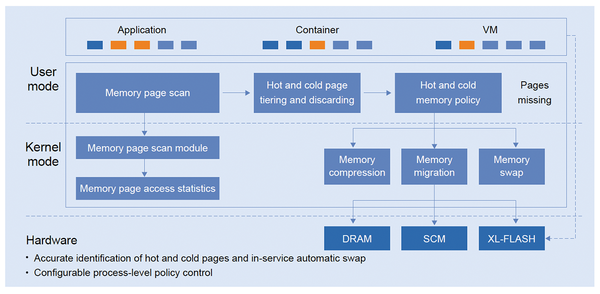

| AI applications place significant demands on performance. The openEuler team has years of experience with tuning HPC systems, cloud environments, and server rooms, and they bring that expertise to the challenge of tuning systems for AI. openEuler includes a number of tools for improving and optimizing performance. For instance, A-Tune (Figure 4) is an AI-powered tuning engine that uses configuration data and performance metrics to automatically tune the system and then continues to iterate using a parameter search algorithm to achieve optimum performance. Another tool, etmem (Figure 5), organizes memory into tiers, automatically routing hot data to high-speed DRAM and cold data to low-speed media. Hybrid Storage Acceleration Kit (HSAK) speeds up I/O for NVMe devices. Also included are custom versions of the GCC and LLVM compilers optimized for the openEuler environment. |

| openEuler Intelligence: A Case Study |

| The Intelligence BooM platform is designed to support custom solutions for practical problems. Sangfor Technologies, for example, recently implemented a patch management solution that leveraged the ready-to-use AI components built into openEuler Intelligence. All enterprise Linux developers face a common problem. The Linux mainline kernel releases an update every 9-10 weeks, with each update containing 2,000-3,000 patches. Most enterprise organizations, however, don’t want the disruption of a full kernel update every 10 weeks. Developers must therefore manually select the relevant patches and integrate them into production branches. This manual update process leads to inefficiencies and bottlenecks. One update can require up to 80 hours of a senior engineer’s time, often leading to error rates exceeding 10 percent. Sangfor wrote their patch management solution around the advanced components built into openEuler Intelligence. The solution leverages Qwen and other LLMs. Standardized templates generate reports, and a syntax analyzer translates code and patch submission into natural language suited for LLM comprehension. The AI-based solution was deployed as an eight-pipeline workflow that reduced the analysis time for 100 patches from 200 minutes to 30 minutes. The direct patch application success rate rose from 90 percent to 98 percent, and the patch analysis speed improved 50 times. Overall, the solution reduced the workload for an update from one person-week to one to two hours. Reducing the time for an update is particularly important in today’s age of heightened security awareness. Sangfor’s AI-based patch management solution reduces the response time for critical vulnerabilities from 14 days to less than 72 hours. By keeping an AI-based development environment close at hand, openEuler enabled a smarter solution to an age-old problem. |

| Info |

|

[1] openEuler Intelligence: https://www.openeuler.org/en/projects/intelligence/ [2] GMEM: https://www.openeuler.org/en/blog/G11N-SIG/20240116-memory-management/20240116-memory-management.html [3] KTransformers: https://kvcache-ai.github.io/ktransformers/ |

| Joe Casad |

| Joe Casad is the editor in chief of Linux Magazine. |

« Previous 1 2 3 4

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.