Lead Image © lassedesignen, 123RF

Building a virtual NVMe drive

Pretender

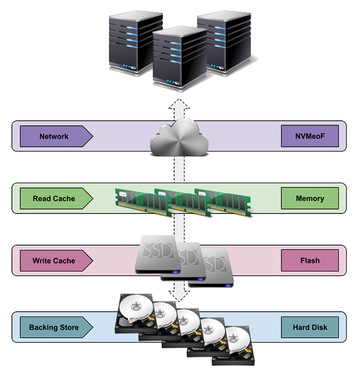

Often, older or slower hardware remains in place while the rest of the environment or world updates to the latest and greatest technologies; take, for example, Non-Volatile Memory Express (NVMe) solid state drives (SSDs) instead of spinning magnetic hard disk drives (HDDs). Even though NVMe drives deliver the performance desired, the capacities (and prices) are not comparable to those of traditional HDDs, so, what to do? Create a hybrid NVMe SSD and export it across an NVMe over Fabrics (NVMeoF) network to one or more hosts that use the drive as if it were a locally attached NVMe device (Figure 1).

The implementation will leverage a large pool of HDDs at your disposal – or, at least, what is connected to your server – and place them into a fault-tolerant MD RAID implementation, making a single large-capacity volume. Also, within MD RAID, a small-capacity and locally attached NVMe drive will act as a write-back cache for the RAID volume. The use of RapidDisk modules [1] to set up local RAM as a small read cache, although not necessary, can sometimes help with repeatable random reads. This entire hybrid block device will then be exported across your standard network, where a host will be able to attach to it and access it as if it were a locally attached

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.