« Previous 1 2 3 4

Management improvements, memory scaling, and EOL for FileStore

Refreshed

In the early days, Ceph was considered new, hip, and innovative because it promised scalable storage without ties to established storage solutions. If you were fed up with SANs, Fibre Channel, and complicated management tools, Ceph offered a solution that was refreshingly different, without a need for special and expensive hardware. In the meantime, Ceph has become a commodity – that is, an established solution for specific areas of application – with a market for training as well as for specialists with Ceph knowledge. Therefore, it's hardly surprising that new Ceph releases no longer come with masses of new features, unlike the past, but instead are taking things a little easier.

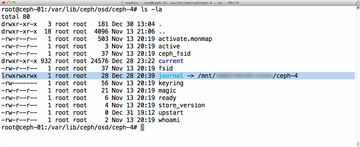

The newly released Ceph version 17.2 [1] bears witness to that trend: Instead of turning big wheels, the developers have focused on tweaks, with not too many changes for existing clusters. Only if you operate a very old Ceph cluster and still rely on the old on-disk FileStore format (Figure 1) will you be prompted to take immediate action. In this article, I explain why this is the case and what else you can and should expect from Ceph 17.2.

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.