« Previous 1 2 3 4 Next »

Best practices for KVM on NUMA servers

Tuneup

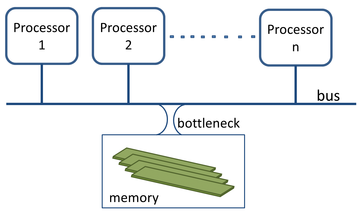

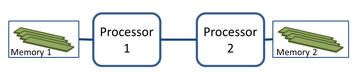

Non-uniform memory access (NUMA) [1] systems have existed for a long time. Makers of supercomputers could not increase the number of CPUs without creating a bottleneck on the bus connecting the processors to the memory (Figure 1). To solve this issue, they changed the traditional monolithic memory approach of symmetric multiprocessing (SMP) servers and spread the memory among the processors to create the NUMA architecture (Figure 2).

The NUMA approach has both good and bad effects. A significant improvement is that it allows more processors with a corresponding increase of performance; when the number of CPUs doubles, performance is

...Buy this article as PDF

(incl. VAT)

Buy ADMIN Magazine

Subscribe to our ADMIN Newsletters

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Most Popular

Support Our Work

ADMIN content is made possible with support from readers like you. Please consider contributing when you've found an article to be beneficial.